As we begin 2010, it’s time for me to go on the record with some predictions. A review of my 2009 predictions shows a few hits, a couple of half-credits and a few more misses. Then again, many of my predictions were pretty bold.

This year is no different.

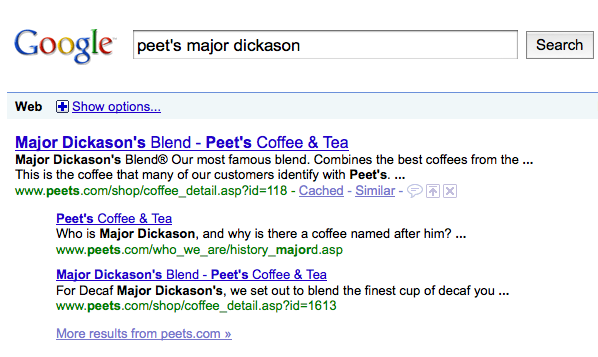

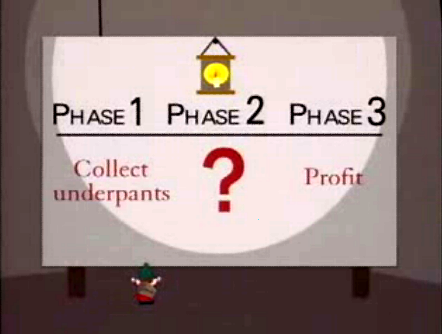

The Link Bubble Pops

At some point in 2010, the link bubble will pop. Google will be forced to address rising link abuse and neutralize billions of links. This will be the largest change in the Google algorithm in many years, disrupting individual SEO strategies as well as larger link based models such as Demand Media.

Twitter Finds a Revenue Model

As 2010 wears on Twitter will find and announce a revenue model. I don’t know what it will be and I’m unsure it will work, but I can’t see Twitter waving their hands for yet another year. Time to walk the walk Twitter.

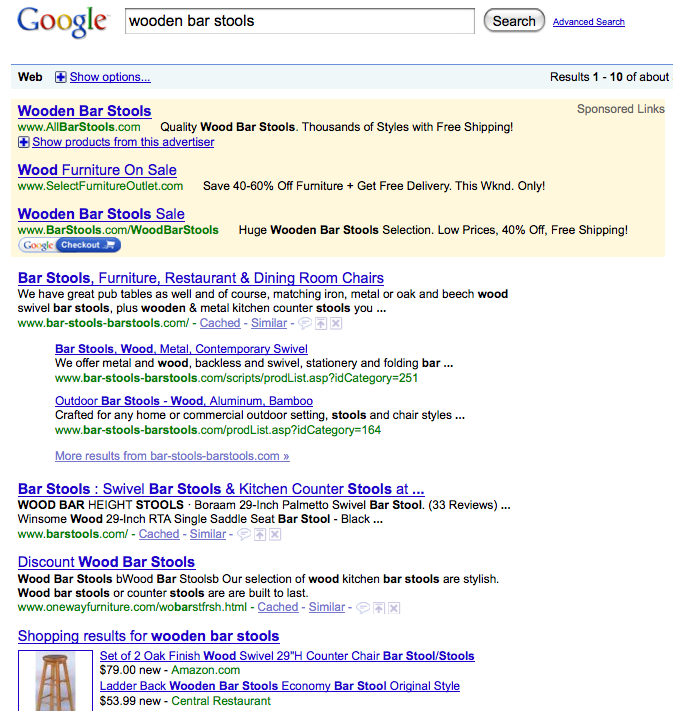

Google Search Interface Changes

We’ve already seen the search mode test that should help users navigate and refine search results. However, I suspect this is just the beginning and not the end. The rapid rate of iteration by the Google team makes me believe we could see something as radical as LazyFeed’s new UI or the New York Times Skimmer.

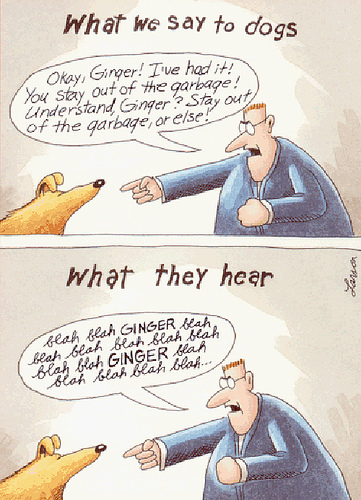

Behavioral Targeting Accelerates

Government and privacy groups continue to rage against behavioral targeting (BT), seeing it as some Orwellian advertising machine hell bent on destroying the world. Yet, behavioral targeting works and savvy marketers will win against these largely ineffectual groups and general consumer apathy. Ask people if they want targeted ads and they say no, show them targeted ads and they click.

Google Launches gBooks

The settlement between Google, the Authors Guild and the Association of American Publishers will (finally) be granted final approval and then the fireworks will really start. That’s right, the settlement brouhaha was the warm up act. Look for Google to launch an iTunes like store (aka gBooks) that will be the latest in the least talked about war on the Internet: Google vs. Amazon.

RSS Reader Usage Surges

What, isn’t RSS dead? Well, Marshall Kirkpatrick doesn’t seem to think so and Louis Gray doesn’t either. I’ll side with Marshall and Louis on this one. While I still believe marketing is the biggest problem surrounding RSS readers, advancements like LazyFeed and Fever make me think the product could also advance. I’m still waiting for Google to provide their reader as a while label solution for eTailers fed up with email overhead.

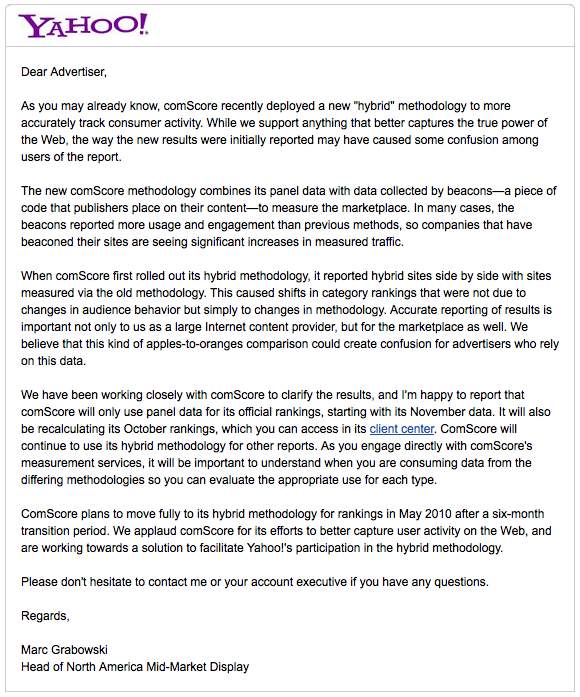

Transparent Traffic Measurement Arrives

Publishers and advertisers are tired of ballpark figures or trends which are directionally accurate. Between Google Analytics and Quantcast people now expect a certain level of specificity. Even comScore is transitioning to beacon based measurement. Panel based traffic measurement will recede, replaced by transparent beacon based measurement … and there was much rejoicing.

Video Turns a Profit

Online video adoption rates have soared and more and more premium content is readily available. Early adopters bemoan the influx of advertising units, trying to convince themselves and others that people won’t put up with it. But they will. Like it or not, the vast majority of people are used to this form of advertising and this is the year it pays off.

Chrome Grabs 15% of Browser Market

Depending on who you believe, Chrome has already surpassed Safari. And this was before Chrome was available for Mac. That alone isn’t going to get Chrome to 15%. But you recall the Google ‘What’s a Browser?‘ video, right? Google will disrupt browser inertia through a combination of user disorientation and brand equity. Look for increased advertising and bundling of Chrome in 2010.

Real Time Search Jumps the Shark

2009 was, in many ways, the year of real time search. It was the brand new shiny toy for the Internati. Nearly everyone I meet thinks real time search is transformational. But is it really?

A Jonathan Mendez post titled Misguided Notions: A Study of Value Creation in Real-Time Search challenges this assumption. A recent QuadsZilla post also exposes a real time search vulnerability. The limited query set and influx of spam will reduce real time search to an interesting, though still valuable, add-on. The Internati? They’ll find something else shiny.