This blog is in a sad state. It was hacked and the recovery process wasn’t perfect.

I should fix it and I should blog more! But my consulting business is booming. And almost all new business comes via referrals. There’s only so much time – that precious finite resource! So I shrug my digital shoulders and think, it’s good enough.

Good enough might cut it for some random blog. But not for a search engine. Yet, that’s what I see happening in slow-motion over the past few years. Google search has become good enough or as I’ve come to think of it – goog enough.

Photo Credit: John Margolies

So how did we get here? Well, it’s long and complicated. So grab the beverage of your choice and get comfortable.

(Based on reader feedback you can now navigate to the section of your choice with the following jump links. I don’t love having this here because it sort of disrupts the flow of the piece. But I acknowledge that it’s quite long and that some may want to return to the piece mid-read or cite a specific section.)

Brand Algorithm

Implicit User Feedback

Experts On Everything

Unintended Consequences

Weaponized SEO

The Dark Forest

Mix Shift

Information Asymmetry

Incentive Misalignment

Enshittification

Ad Creep

Context Switching and Cognitive Strain

Clutter

Google Org Structure

ChatGPT

Groundhog Day

Editorial Responsibility

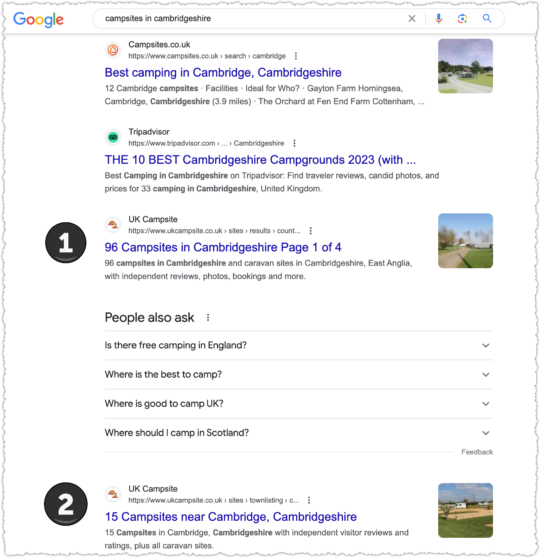

Food Court Search Results

From Goog Enough to Great

Brand Algorithm

One of the common complaints is that Google is biased toward brands. And they are, but only because people are biased toward brands.

My background, long ago, is in advertising. So I’m familiar with the concept and power of aided awareness, which essentially measures how well you recognize a brand when you’re prompted (i.e. – aided) with that brand.

Every Google search is essentially an aided awareness test of sorts. When you perform a Google search you are prompted with multiple brands through those search results. The ones that are most familiar often get an outsized portion of the clicks. And as a result those sites will rank better over time.

If you’re not in the SEO industry you may not realize that I just touched a third rail of SEO debate. Does Google use click data in their algorithms? The short answer is yes. The long answer is complex, nuanced and nerdy.

Implicit User Feedback

(Feel free to skip to the Experts On Everything section if technical details make you sleepy.)

I actually wrote about this topic over 8 years ago in a piece titled Is Click Through Rate a Ranking Signal?

I botched the title since it’s more than just click through rate but click data overall. But I’m proud of that piece and think it still holds up well today.

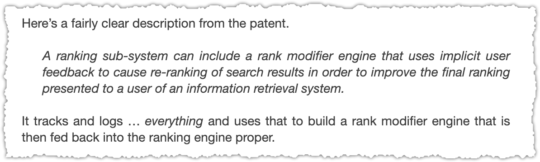

One of the more critical parts of that piece was looking at some of Google’s foundational patents. They are littered with references to ‘implicit user feedback’. This is a fancy way of saying, user click data from search engine results. The summation of that section is worth repeating.

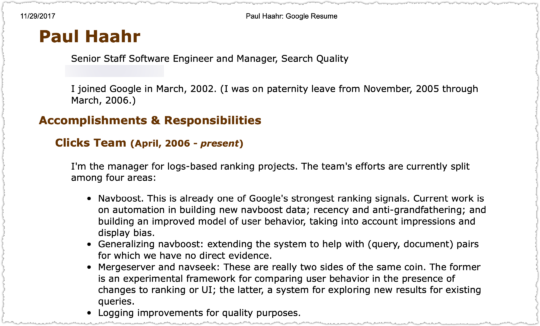

Since I wrote that other things have come to light. The first comes from Google documents leaked by Project Veritas in August of 2019. For the record, I have zero respect for Project Veritas and their goals. But one of the documents leaked was the resume of Paul Haahr.

The fact that there was a Clicks team seems rather straight-forward and you’d need some pretzel logic to rationalize that it wasn’t about using click data from search results. But it might be easier to make the case using Navboost by looking at how it’s used elsewhere.

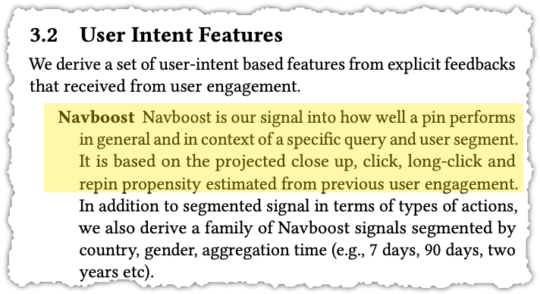

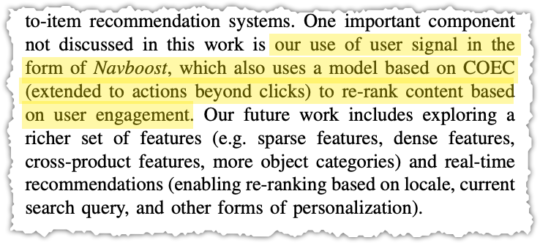

In this instance, it’s Pinterest, with the papers Demystifying Core Ranking in Pinterest Image Search (pdf) and Human Curation and Convnets: Powering Item-to-Item Recommendations on Pinterest (pdf).

Here are the relevant sections of both pertaining to Navboost.

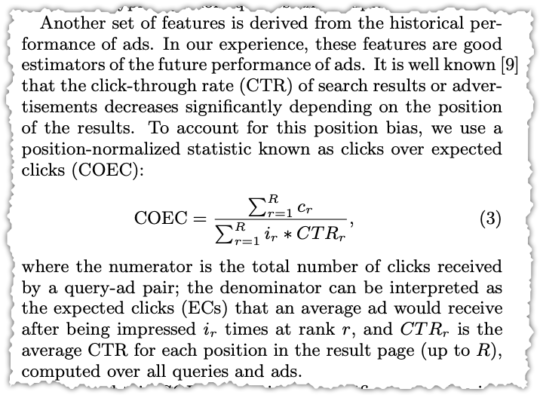

The COEC model is well-documented with this calculation excerpt from a Yahoo! paper (pdf).

The calculation looks daunting but the general idea behind Navboost is to provide a boost to documents that generate higher clicks over expected clicks (COEC). So if you were ranked fourth for a term and the expected click rate was x and you were getting x + 15% you might wind up getting a ranking boost.

The final pieces, which puts an end to the debate, come from antitrust trial exhibits that include a number of internal Google presentations.

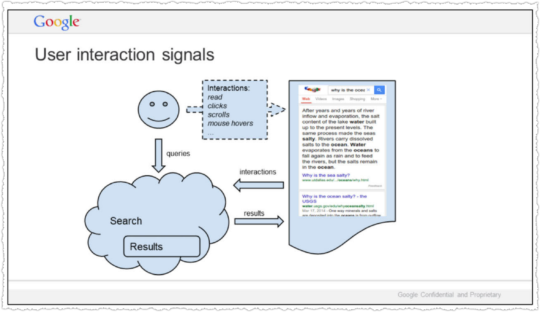

The first is Google presentation: Life of a Click (user-interaction) (May 15, 2017) (pdf) by former Googler Eric Lehman.

What’s crazy is that we don’t actually understand documents. Beyond some basic stuff, we hardly look at documents. We look at people. If a document gets a positive reaction, we figure it is good. If the reaction is negative, it is probably bad. Grossly simplified, this is the source of Google’s magic.

Yes, Google tracks all user interactions to better understand human value judgements on documents.

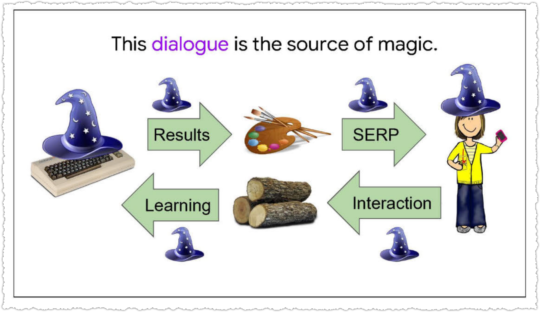

Another exhibit, Google presentation: Google is magical. (October 30, 2017) (pdf) is more concise.

The source of Google’s magic is this two-way dialogue with users.

Google presentation: Q4 Search All Hands (Dec. 8, 2016) goes into more depth.

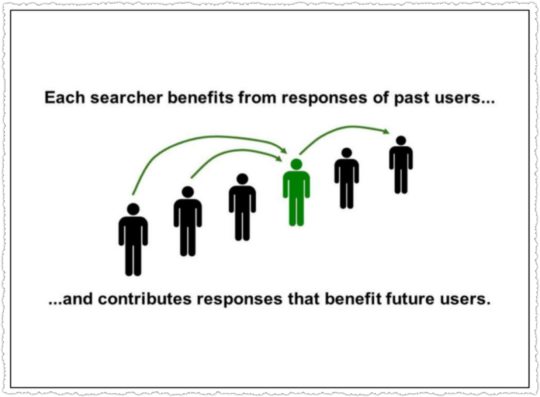

SO… if you search right now, you’ll benefit from the billions of past user interactions we’ve recorded. And your responses will benefit people who come after you. Search keeps working by induction.

This has an important implication. In designing user experiences, SERVING the user is NOT ENOUGH. We have to design interactions that also allow us to LEARN from users.

Because that is how we serve the next person, keep the induction rolling, and sustain the illusion that we understand.

The aggregate evaluations of prior user interactions help current search users who pass along their aggregate user interactions to future search users.

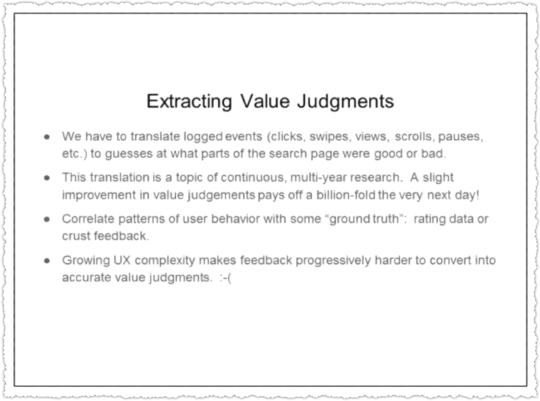

Perhaps the most important exhibit in this context is Google presentation: Logging & Ranking (May 8, 2020) (pdf)

Within that deck we get the following passages.

The logs do not contain explicit value judgments– this was a good search results, this was a bad one. So we have to some how translate the user behaviors that are logged into value judgments.

And the translation is really tricky, a problem that people have worked on pretty steadily for more than 15 years. People work on it because value judgements are the foundation of Google search.

If we can squeeze a fraction of a bit more meaning out of a session, then we get like a billion times that the very next day.

The basic game is that you start with a small amount of ‘ground truth’ data that says this thing on the search page is good, this is bad, this is better than that. Then you look at all the associated user behaviors, and say, “Ah, this is what a user does with a good thing! This is what a user does with a bad thing! This is how a user shows preference!’

Of course, people are different and erratic. So all we get is statistical correlations, nothing really reliable.

I find this section compelling because making value judgments on user behavior is hard. I am reminded of Good Abandonment in Mobile and PC Internet Search one of my favorite Google papers by Scott Huffman, that determined that some types of search abandonment were good and not bad.

Finally, we find that these user logs are still the fuel for many modern ranking signals.

As I mentioned, not one system, but a great many within ranking are built on logs.

This isn’t just traditional systems, like the one I showed you earlier, but also the most cutting-edge machine learning systems, many of which we’ve announced externally– RankBrain, RankEmbed, and DeepRank.

RankBrain in particular is a powerful signal, often cited as the third most impactful signal in search rankings.

Boosting results based on user feedback and preference seems natural to me. But this assumes that all sides of the ecosystem – platform, sites and users – are aligned.

Increasingly, they are not.

Experts on Everything

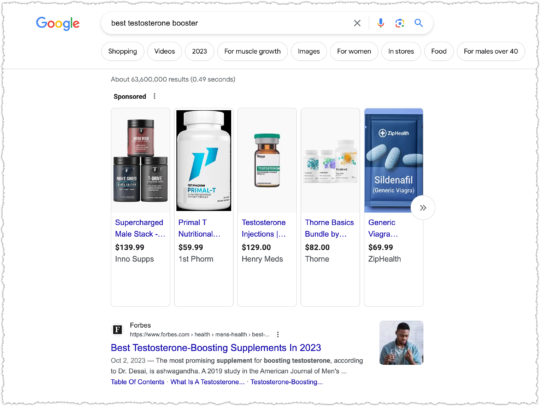

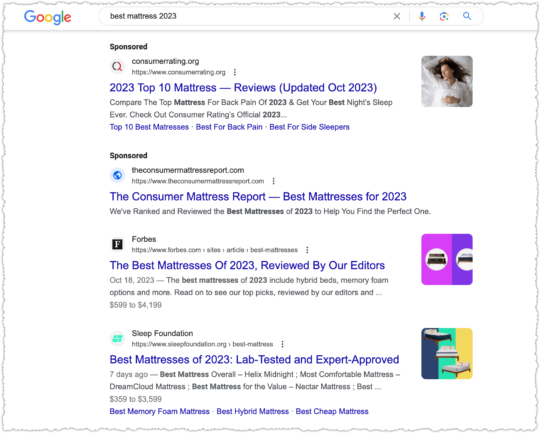

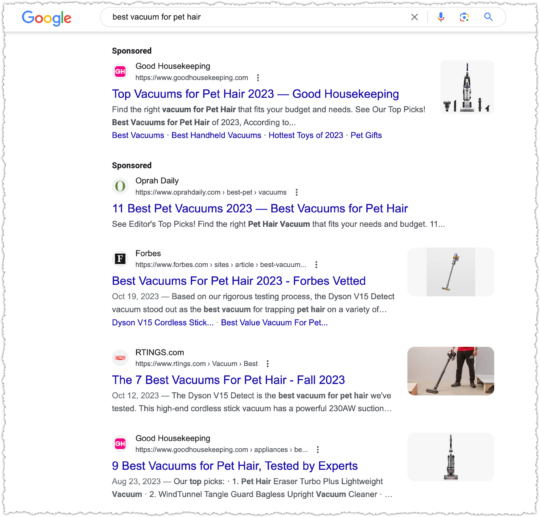

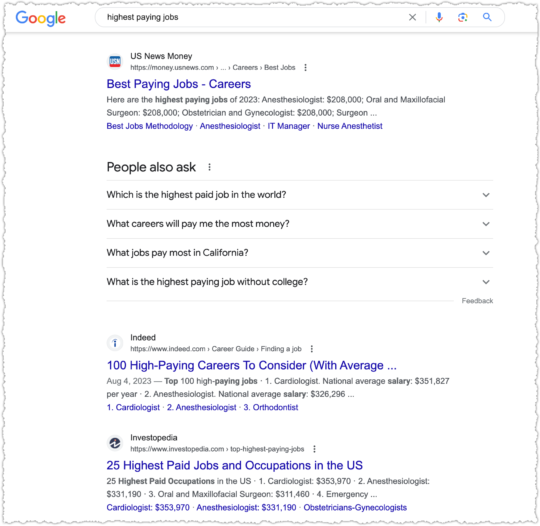

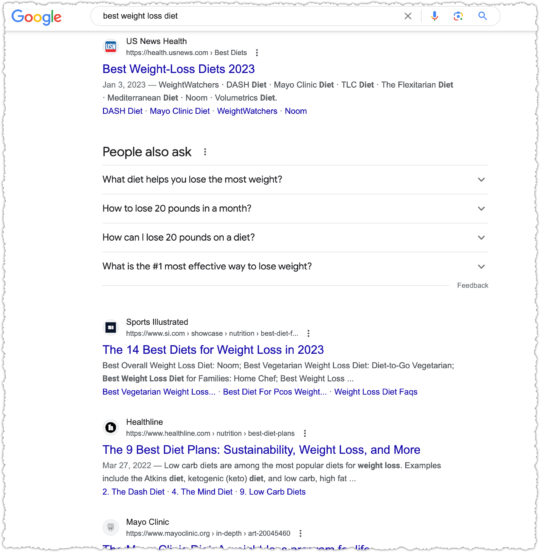

Whether you believe my explanation for why brands are ranking so well, it’s happening. In fact, a growing number of brands realize they can rank for nearly anything, even if it is outside of their traditional subject expertise.

I don’t exactly blame these brands for taking this approach. They’re optimizing based on the current search ecosystem. It’s not what I recommend to clients but I can understand why they’re doing it. In other words, don’t hate the player, hate the game.

With that out of the way, I’ll pick on Forbes.

Published eight times a year, Forbes features articles on finance, industry, investing, and marketing topics. It also reports on related subjects such as technology, communications, science, politics, and law.

Funny, I don’t see health in that description. But that doesn’t stop Forbes from cranking out supplement content.

Need some pep for your sex drive? Forbes is goog enough!

Need a good rest? Forbes is goog enough!

Got a pet that sheds? Forbes is goog enough.

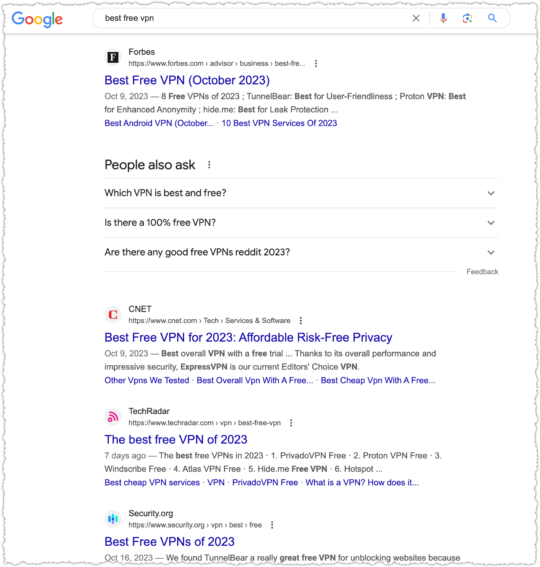

Or maybe you’re looking for a free VPN? Forbes? Goog enough!

Now this is, at least, technology adjacent, which is in their related topics description in Wikipedia. But does anyone really think Forbes has the best advice on free VPN?

Forbes still works under a contributor model, which means you’re never quite sure as to the expertise of the writer and why that content is being produced other than ego and getting ad clicks. (I mean, you have to be a click ninja to dodge the ads and read anything on the site anyway.)

It’s not just me that thinks this model produces marginal content. An incomplete history of Forbes.com as a platform for scams, grift, and bad journalism by Joshua Benton says it far far better than I could.

And unlike a bunch of those folks on Forbes, Joshua has the writing chops.

Perhaps the most notable gaffe was having Heather R. Morgan (aka – Razzlekhan) write about cybersecurity when she was guilty of attempting to launder $4.5 billion of stolen bitcoin.

But regular people still have a positive brand association with Forbes. They don’t know about the contributor model or the number of times it’s been abused.

So they click on it. A lot. Google notices these clicks and boosts it in rankings and then more people click on it. A lot.

The result is, according to ahrefs, a little over 70MM in organic traffic each month.

Forbes isn’t the only one doing this, though they might be the most successful.

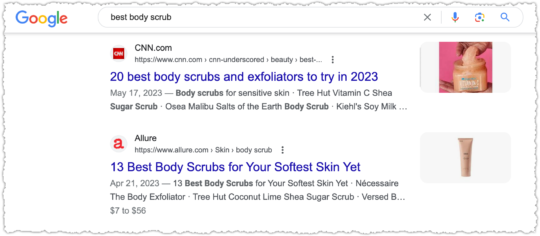

When you’re looking for the best body scrub you naturally think of … CNN?

Goog enough!

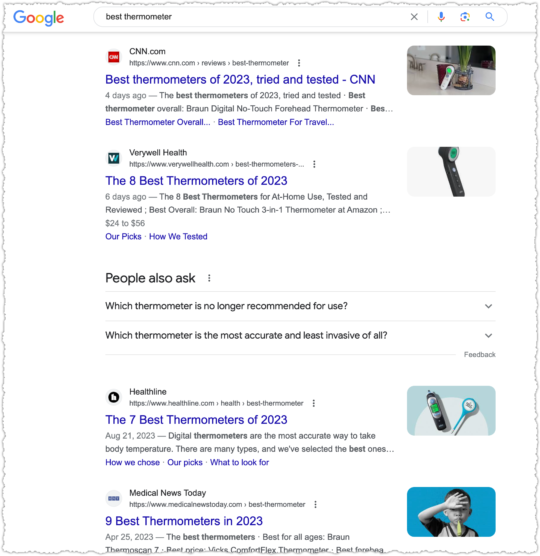

Or maybe you’ve caught this round of COVID and you need a thermometer.

Silly health sites. CNN is goog enough!

All of this runs through the /cnn-underscored/ folder on CNN, which is essentially a microsite designed to rank for and monetize search traffic via affiliate links. It seems modeled after The New York Times and its Wirecutter content.

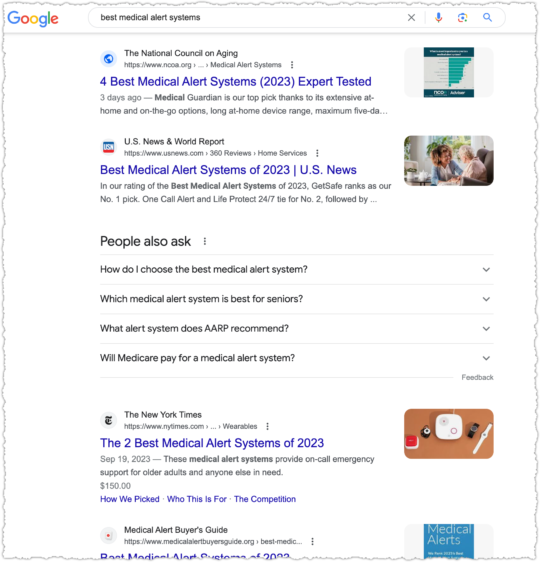

There are plenty of others I can pick on including Time and MarketWatch. But I’ll only pick on one more: U.S. News & World Report.

Looking for the best HVAC system?

U.S. News is goog enough!

When it comes to cooking though, some sort of recipe or food site has to be ranking right?

Naw, U.S. News is goog enough!

U.S. News takes it a step further when they get serious about a vertical. They launch subdomains with, by and large, content from third-parties. Or as they are prone to doing, taking third-party data and weighting it to create rankings.

You might have heard about some of the issues surrounding their College Rankings. Suffice to say, I find it hard to believe U.S. News is the very best result across multiple verticals, which includes, cars, money, health, real estate and travel.

At this point you know the refrain. In most cases, it’s goog enough!

At least in this last one The National Council on Aging is ahead of U.S. News. But they aren’t always and I fail to see why it should be this close.

Unintended Consequences

The over reliance on brand also creates sub-optimal results outside of those hustling for ad and affiliate clicks.

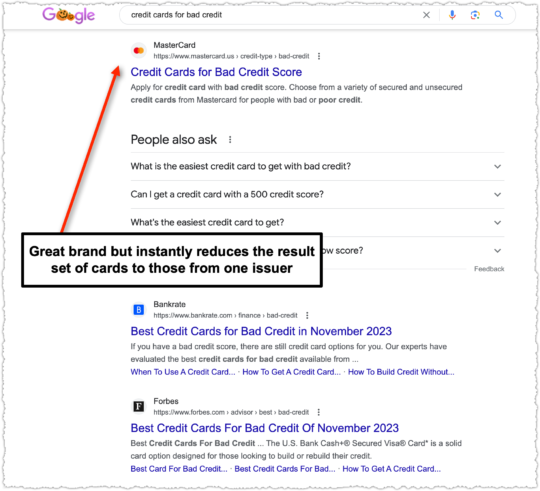

Here MasterCard is ranking for a non-branded credit card query. MasterCard is clearly a reputable and trusted brand within this space. But this is automatically a sub-optimal result for users because it excludes cards from other issuers like Visa and Discover.

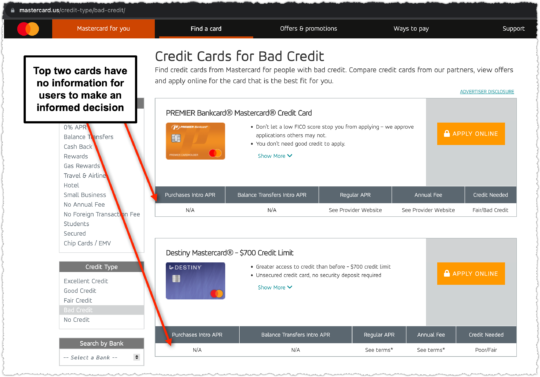

It gets worse if you know a bit about this vertical. When you click through to that MasterCard page you’re not provided any information to make an informed decision.

Why isn’t MasterCard showing the rates and fees for these cards?

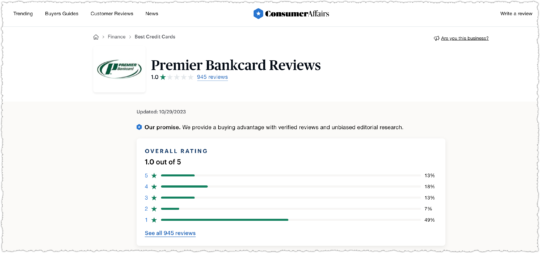

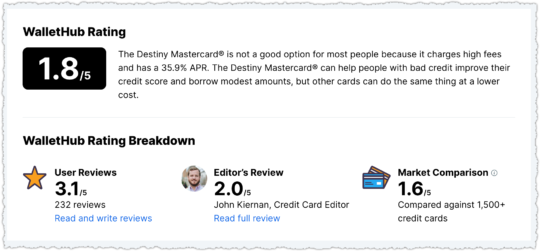

In short, those cards are pretty dreadful for consumers, particularly consumers who are in a vulnerable financial position. And the rate and fee information is available as you can see in the WalletHub example. There are better options available but users may blindly trust the MasterCard result due to brand affinity.

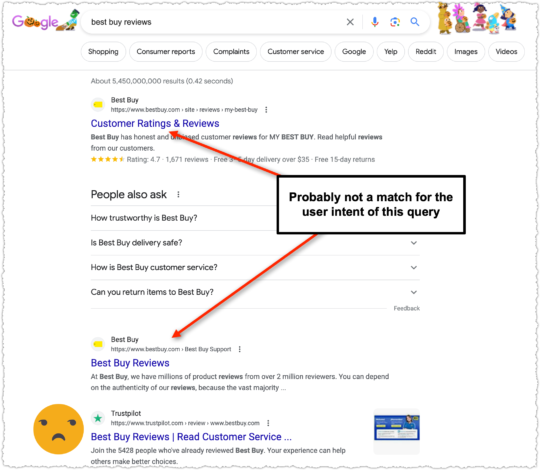

A lot of the terms I’ve chosen revolve around the ‘best’ modifier, which is in many ways a proxy for review intent. And reviews are a bit of a bugaboo for Google over the last few years. They even created a reviews system to address the problem. Yet, we still get results for review queries where the site or product itself is ranking.

If I’m looking for reviews about a site or product I’m not inclined to believe the reviews from that site. It’s like getting an alibi from your spouse.

The thing is, I get why Best Buy is trying so hard to rank well for this term. Because right below them is TrustPilot.

Weaponized SEO

What’s the problem with TrustPilot? Well, let me tell you. The way their business model works is by ranking for a ‘[company/site] reviews’ term with a bunch of bad reviews and a low rating.

Once TrustPilot ranks well (usually first) for that term, it does some outreach to the company. The pitch? They can help turn that bad rating into a good one for a monthly fee.

If you pay, good reviews roll in and that low rating turns around in a jiffy. Now when users search for reviews of your company they’ll know you’re just aces!

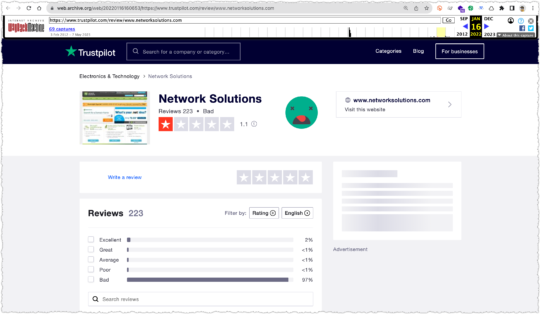

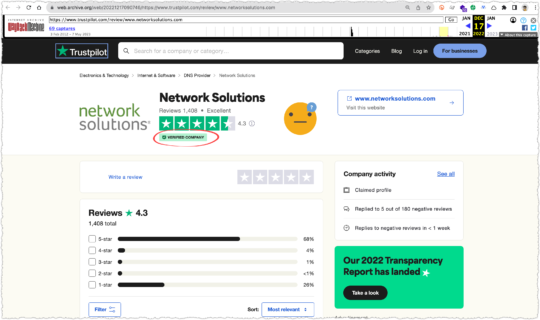

One of the better examples of this is Network Solutions, a company that a friend of mine has written about in great detail. Using the Internet Archive you can see that Network Solutions had terrible ratings on TrustPilot as of January 2022 when they were not a customer.

By December 2022 Network Solutions had become a customer (i.e. – verified company) and secured a rating of 4.3.

Some of you might be keen enough to look at the distribution of ratings and wonder how Network Solutions can have a 4.3 rating with 26% of the total being 1.0.

A simple weighting of the ratings would return a 3.87.

(5*.68)+(4*.04)+(3*.01)+(2*.01)+(1*.26) = 3.87

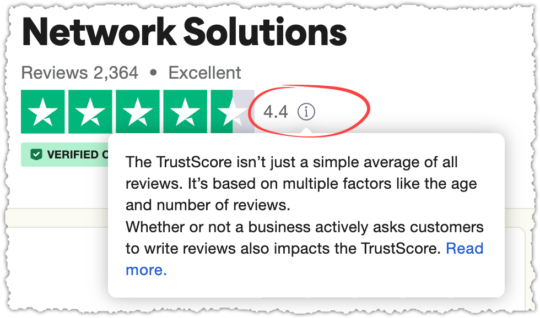

But if you hover over that little (i) next to the rating you find out they don’t use a simple average.

Following that link you can read how TrustScore is calculated. I have to say, I’m grudgingly impressed in an evil genius type of way.

-

Time span. A TrustScore gives more weight to newer reviews, and less to older ones. The most recent review holds the most weight, since newer reviews give more insight into current customer satisfaction.

-

Frequency. Businesses should continuously collect reviews to maintain their TrustScore. Because the most recent review holds the most weight, a TrustScore will be more stable if reviews are coming in regularly.

So all the bad reviews and ratings they collected to rank and strong arm businesses can be quickly swept away based on review recency. And all you have to do to keep your ratings high is to keep using their product. Slow clap.

I would bet customers have no idea this is how ratings are calculated. Nor do they understand, or likely care, about how TrustPilot uses search to create a problem their product solves. But TrustPilot looks … trustworthy. Heck, trust is in their name!

Now a company is likely neither as bad before nor as good after they become a TrustPilot customer. There is ballot box stuffing on both sides of the equation. But it’s unsettling that reddit is awash in complaints about TrustPilot.

Ugly truth behind TrustPilot Reviews contains the following comment:

I tried to leave a bad review on Trustpilot once, but the business was given an opportunity to protest before my review was published. TP demanded proof of my complaint. I provided an email chain but the business kept arguing nonsense and TP defaults to taking their side. The review was never posted. I’ve assumed since then that the site is completely useless because businesses seem to be able to complain until reviews get scrubbed.

Finding out that Trustpilot is absolutely NOT trustworthy! contains the following comment:

I complained about an insurance company who failed to look for the other party in an accident, failed to sort out the courtesy car, and didn’t call us or write to us when they said they would in a review. The company complained and TrustPilot took it down. I complained and TrustPilot asked me to provide evidence of these things that didn’t happen. I asked them what evidence of nonexistent events would satisfy them and they said it was up to me to work that out.

Fake Trustpilot review damaging my business contains the following comment:

I would just add, Trustpilot is a tax on businesses. It ruined my business because, usually, only unhappy people leave a review unsolicited. However, if you pay Trustpilot, they’ll send review requests to every customer and even sort you out with their special CMS.

So why do I have such a bee in the bonnet about TrustPilot and what does it have to do with search? The obvious issue is that TrustPilot uses a negative search result to create a need for their product.

It’s a mafia style protection racket. “Too bad about those broken windows” says the thug as they smash the glass. “But I think I can help you fix that.”

Let me be clear, I’d have little to no problem with TrustPilot if they were simply selling a product that helped companies deliver reviews to other platforms like Yelp or the Play Store.

The second reason is that a large number of people trust these ratings without knowing the details. I’m concerned that any ranking signal that is using click preference will be similarly trained.

The last reason is that Google has been rightly cracking down on the raft of MFA (Made for Adsense/Amazon) review sites that offered very little in the way of value to users. It was easy to ferret out these small spammy sites all with the same lists of products that would deliver the highest affiliate revenue.

Google got rid of all the corner dealers but they left the crime bosses alone.

I could paint a much darker portrait though. Google is simply a mirror.

Users were more prone to finger those scraggly corner dealers but are duped by the well dressed con man.

The Dark Forest

By now you’re probably sick and tired of search result screenshots and trying to determine the validity, size and scope of these problems.

I know I am. It’s exhausting and a bit depressing. What if this fatigue is happening when people are performing real Google searches?

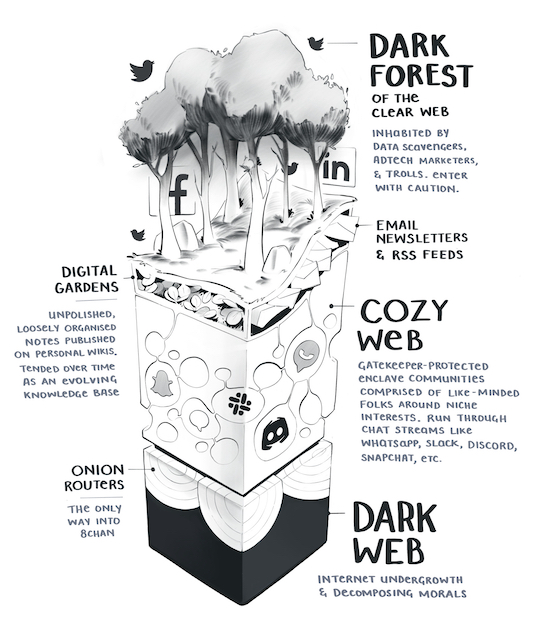

Enter the Dark Forest Theory.

Illustration Credit: Maggie Appleton

In May of 2019 Yancey Strickler wrote The Dark Forest Theory of the Internet. I was probably late to reading it but ever since I did it’s been rattling around in my head.

In response to the ads, the tracking, the trolling, the hype, and other predatory behaviors, we’re retreating to our dark forests of the internet, and away from the mainstream.

Dark forests like newsletters and podcasts are growing areas of activity. As are other dark forests, like Slack channels, private Instagrams, invite-only message boards, text groups, Snapchat, WeChat, and on and on.

This is the atmosphere of the mainstream web today: a relentless competition for power. As this competition has grown in size and ferocity, an increasing number of the population has scurried into their dark forests to avoid the fray.

These are some of the passages that explained the idea of a retreat away from mainstream platforms. And while search isn’t mentioned specifically, I couldn’t help but think that a similar departure might be taking place.

Then Maggie Appleton did just that with The Expanding Dark Forest and Generative AI. It’s a compelling piece with a number of insightful passages.

You thought the first page of Google was bunk before? You haven’t seen Google where SEO optimizer bros pump out billions of perfectly coherent but predictably dull informational articles for every longtail keyword combination under the sun.

We’re about to drown in a sea of pedestrian takes. An explosion of noise that will drown out any signal. Goodbye to finding original human insights or authentic connections under that pile of cruft.

Many people will say we already live in this reality. We’ve already become skilled at sifting through unhelpful piles of “optimised content” designed to gather clicks and advertising impressions.

Are people really scurrying away from the dark forest of search?

In February, Substack reported 20 million monthly active subscribers and 2 million paid subscriptions. (And boy howdy do I like the tone of that entire post!)

Before Slack was scarfed up by Salesforce it had at least 10 million DAU and was posting a 39% increase in paid customers. In retrospect, I recently used a Slack channel to better research rank tracking options because search results were goog enough but ultimately unhelpful.

Discord has 150 million MAU and 4 billion daily server conversation minutes. While it began as a community supporting gamers, it’s moved well beyond that niche.

I also have a running Signal conversation with a few friends where we share the TV shows we’re watching and help each other through business and personal issues.

It’s hard to quantify the impact of these platforms. It reminds me a lot of the Dark Social piece by Alexis Madrigal. Perhaps we’ve entered an era of dark search?

But a more well documented reaction has been the practice of appending the word ‘reddit’ to search queries. All of those pieces were from 2022. Today Google is surfacing reddit far more often in search results.

I’m not mad about this, unlike many other SEOs, because I think there is a lot of authentic and valuable content on reddit. It deserves to get more oxygen. (Disclaimer: reddit is one of my clients.)

Yet, I can’t help but think that Google addressed a symptom and not the cause.

Google took the qualitative (screeds by people that wound up on Hacker News) and quantitative (prevalence of reddit as a query modifier) and came to the conclusion that people simply wanted more reddit in their results.

Really, the cause of that reddit modifier was dissatisfaction with the search results. It’s an expression of the dark forest. They were simply detailing their work around. (It may also be a dissatisfaction with reddit’s internal search with people using Google by proxy to search reddit instead.)

Either way, at the end of the day, the main culprit is with search quality. And as I have shown above and as Maggie has pointedly stated, the results aren’t great.

They’re just goog enough.

Mix Shift

If people are running away from the dark forest, who is left to provide click data to these powerful signals. The last part of Yancey’s piece says it well.

The meaning and tone of these platforms changes with who uses them. What kind of bowling alley it is depends on who goes there.

Should a significant percentage of the population abandon these spaces, that will leave nearly as many eyeballs for those who are left to influence, and limit the influence of those who departed on the larger world they still live in.

If the dark forest isn’t dangerous already, these departures might ensure it will be.

Again, Yancey is talking more about social platforms but could a shift in the type of people using search change the click data in meaningful ways? A mix shift can produce very different results.

This even happens when looking at aggregate Google Search Console data. A client will ask how search impressions can go up but average rank go down. The answer is usually a page newly ranking at the bottom of the first page for a very high volume query.

It’s not magic. It’s just math.

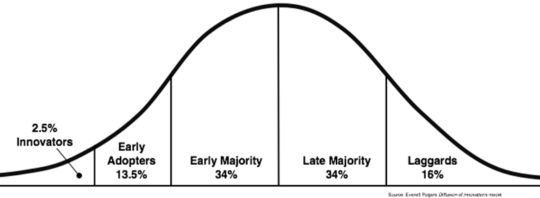

The maturity of search and these defections can be seen on the Diffusion of Innovation Curve.

Google search is well into the laggards at this point. Your grandma is searching! Google has achieved full market saturation.

In the past, when people complained about Google search quality, I felt they were outliers. They might be SEOs or technologists, both highly biased groups that often have an ax to grind. They were likely in the Innovators category.

But 20 million Substack subscribers, Discord usage, the sustained growth of DuckDuckGo and Google’s own worries over Amazon, Instagram and TikTok makes it feel different this time. The defections from the dark forest aren’t isolated and likely come from both Early Adopters and Early Majority.

Google is learning from user interactions and those interactions are now generated by a different mix of individuals. The people who used Google in 2008 are different from those who use Google today.

If Google is simply a mirror, whose face are we seeing?

Information Asymmetry

Many of the examples I’m using above deal with the exploitation of information asymmetry.

Information asymmetry refers to a situation where one party in a transaction or interaction possesses more or better information compared to the other party. This disparity in knowledge can create an environment in which the party with more information can exploit their advantage to deceive or mislead the other, potentially leading to fraudulent activities.

Most users are unaware of the issues with the Forbes contributor model or how TrustPilot collects reviews and calculates rankings. It’s not that content explaining these things doesn’t exist. They do. But they are not prominently featured and are often wrapped in a healthy dose of marketing.

So users have to share some of the blame. Should Your Best Customers be Stupid? puts it rather bluntly.

Whether someone’s selling a data plan for a device, a retirement plan to a couple, or a surgical procedure to an ailing child’s parents, it’s unlikely that “smart” customers will prove equally profitable as “stupid” ones. Quite the contrary, customer and client segmentation based on “information asymmetries” and “smarts” strikes me as central to the future of most business models.

Is the current mix of search users less savvy about assessing content? Or in the context of the above, are the remaining search users stupid?

Sadly, the SEO industry is a classic example of information asymmetry. Most business owners and sites have very little idea of how search works or the difference between good and bad recommendations.

The reputation of SEOs as content goblins and spammers is due to the large number of people charging a mint for generic advice and white-label SEO tool reports with little added value.

Information asymmetry is baked into search. You search to find out information from other sources. So information asymmetry widens any time the information from those sources is manipulated or misrepresented.

Incentive Misalignment

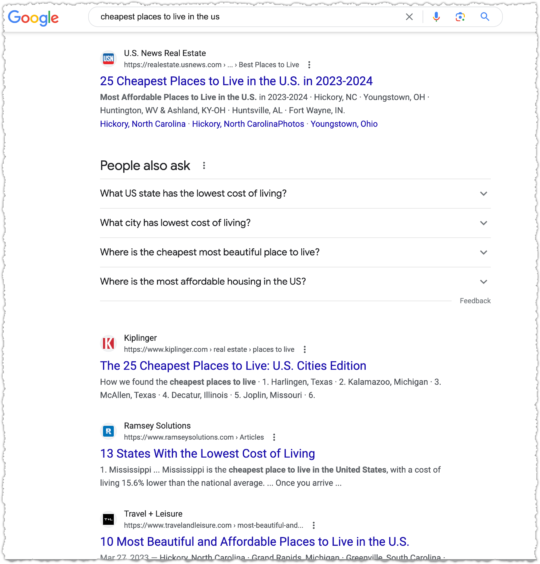

Let’s return to those divisive U.S. News College Rankings. It parallels an ecosystem in which the incentives of parties aren’t aligned. In this instance, U.S. News wants to sell ads and colleges want to increase admissions, while prospective students are simply looking for the best information.

The problem here is that both U.S. News and colleges have economic incentives increasingly misaligned with student informational needs. While economic incentives can be aligned with informational needs, they can be compromised when the information asymmetry between them widens.

In this instance, U.S. News simply became the source of truth for college rankings and colleges worked to game those rankings. Students became reliant on one source that was increasingly gamed by colleges.

The information asymmetry grows because of the high degree of trust (perhaps misplaced) students have in both U.S. News and colleges. Unaware of the changes to information asymmetry, students continued to behave as if the incentives were still aligned.

Now go back and replace U.S. News with Google, colleges with sites (like Forbes or CNN) and students with search users.

Enshittification

Cory Doctorow has turned enshittification into a bit of an Internet meme. The premise of enshittification is that platforms are doomed to ruin their product as they continue to extract value from them.

Here is how platforms die: first, they are good to their users; then they abuse their users to make things better for their business customers; finally, they abuse those business customers to claw back all the value for themselves. Then, they die.

I think one of his better references is from the 1998 paper The Anatomy of a Search Engine by two gents you might recognize: Sergey Brin and Larry Page.

… we expect that advertising funded search engines will be inherently biased towards the advertisers and away from the needs of the consumers.

The entire section under Appendix A: Advertising and Mixed Motives essentially makes the case for the dangers of incentive misalignment. So if you don’t believe me, maybe you’ll believe the guys who created Google.

But we don’t really have to believe anything. We have data to back this up.

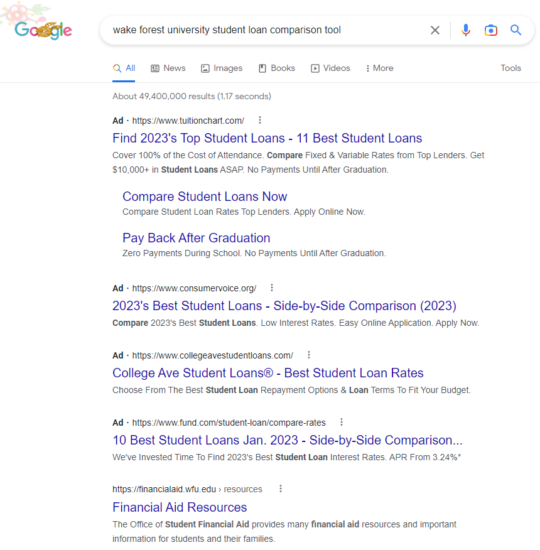

Ad Creep

There is no shortage of posts about the increasing prevalence of ads on search results.

Photo Credit: u/subject_cockroach636 via reddit

Anecdotes are easy to brush off as outliers and whiners. But, as they say, we have receipts.

In 2020, Dr. Pete Myers found that the #1 organic result started 616px down the page versus 375px in 2013. That’s a 64% increase.

But what about since 2020?

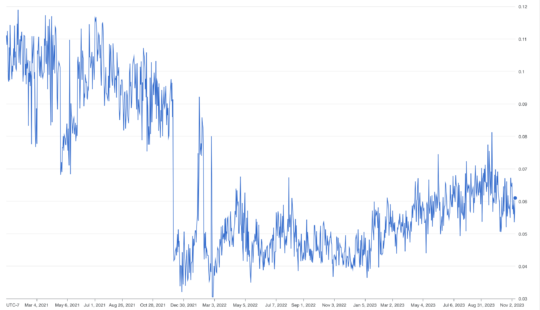

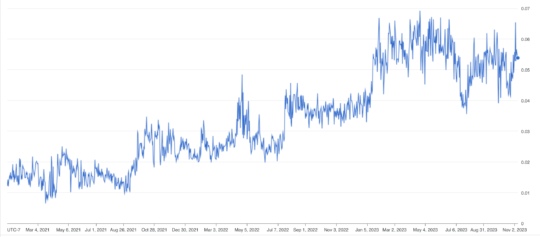

Nozzle.io shows that the percentage of space above the fold with a traditional ‘blue link’ decreased steeply at the end of 2021.

Nozzle takes the standard top of fold metric and looks at how much pixel space is dedicated to a true organic result.

It’s not always an ad as you can see above. But the fact remains that standard organic results are seeing less and less real estate going from ~11% to ~6% today.

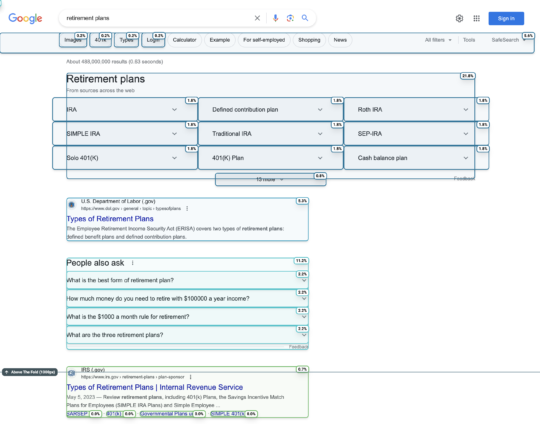

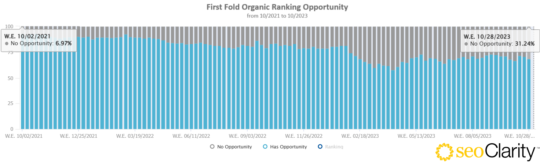

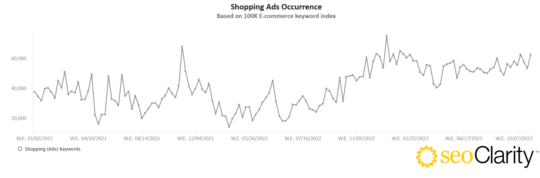

SEOClarity has different methodology but shows an increasing lack of opportunity to rank organically above the fold.

That’s from ~7% in October of 2021 to ~31% in October of 2023.

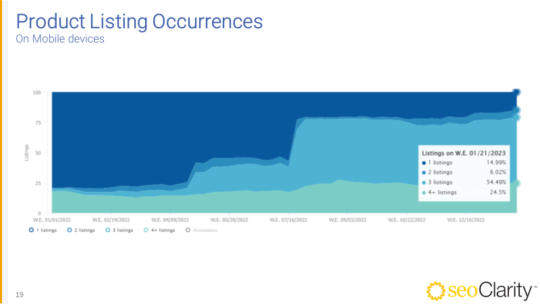

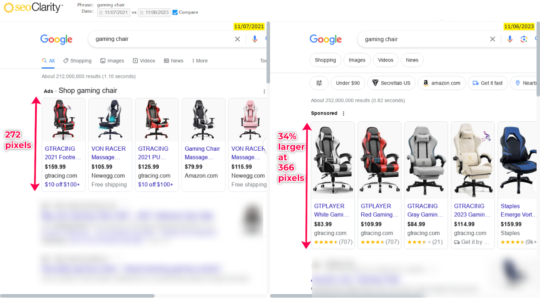

SEOClarity also shows the creep of product packs on mobile devices.

Here we see the expansion of product packs over the course of 2022, with an explosion of growth that ended with 79% SERPs with 3+ product packs.

You encounter these as you continue to scroll through a search result. Many wind up being unpaid listings but a simple label could turn any of these into a sponsored feature. And there are so many of them on search results today.

Those product units? They’re also getting bigger. The products unit increased by 34% from 2021 to present.

Nozzle confirms the compounding nature of this issue by doing a pixel analysis of the entire SERP. It shows that products have seen a 600% increase in SERP real estate from ~1% to ~6% today.

And in a 100K sample tracking by SEOClarity we can see the prevalence of Shopping Ads specifically has increased.

Of course you can see the spikes for the end of the year shopping spree but this year it’s like they just kept it on full blast.

And now they’re even testing putting ads in the middle of organic search results!

This seems like a pretty classic case of enshittification and what Google’s own founders cautioned against.

Context Switching and Cognitive Strain

The straw that might have broken the camel’s back and why this post exists is the decision by Google to remove indented results from search results.

I know, crazy right?

Now, for those not in the know, when a site had two or more results for a query Google would group them together, indenting the additional results from the same site. This was a great UX convention, allowing users to sort similar information and select the best from that group before moving on to other results.

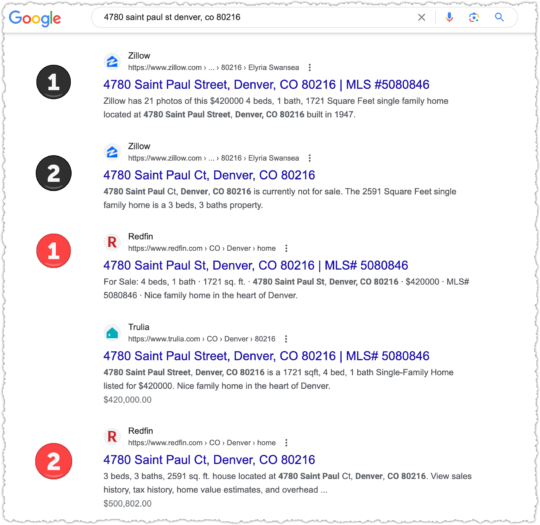

But now that indentation is gone. But it gets worse! Google isn’t even keeping results from the same site together anymore. You wind up with search results where you could encounter the same site twice or more but not consecutively.

I guess it’s goog enough for users.

But is it really? Let’s use an offline analogy and pretend I’m making fruit salad.

I go to the store and visit the produce section. There I find each type of fruit in a separate bin. Apples in one, oranges in another so on and so forth. I can quickly and easily find the best of each fruit to include in my salad. That is the equivalent of what search results are like with indented results.

Photo Credit: Shutterstock

Without indented results? I’d go to the store and visit the produce section where I’d find one gigantic bin with all the fruit mixed together. I have to look at an apple, find it’s not what I’m looking for, then find an orange I like, then look at a pear, then find another orange that I don’t need because I already found one, then another pear, then another orange I don’t need, then a pineapple.

I’m sorry, but in no world is this a good user experience. It’s such a foreign concept I gave up getting DALL-E to render a mixed up produce aisle.

Clutter

Do search results spark joy? There is no question that search results have become cluttered. Even as something as simple as a t-shirt query.

There are 51 individual images pictured above. I understand the desire to make results more visual. But this strikes me as a kitchen sink approach that may run afoul of The Paradox of Choice. And this example is just the tip of the iceberg.

Even Google agrees and has started to sunset SERP features.

To provide a cleaner and more consistent search experience, we’re changing how some rich results types are shown in Google’s search results. In particular, we’re reducing the visibility of FAQ rich results, and limiting How-To rich results to desktop devices. This change should finish rolling out globally within the next week.

All of these UX changes have a material impact on the evaluation of interaction data. Google says it best.

Growing UX complexity makes feedback progressively harder to convert into accurate value judgments

:-(

You know what hasn’t changed? People’s affinity for brands. So if other user interaction data is becoming harder to parse given the UX complexity does the weight of less complex user interaction data (such as Navboost) grow as a result?

Google Org Structure

Why does it all feel different now? Some of it may be structural.

Amit Singhal was the head of Google search from 2001 until 2016, when he departed under controversy. These were the formative years of Google and is the search engine I certainly think of and identify with when I think of Google.

Following Singhal was John Giannandrea, who employed an AI first approach. His tenure seemed rocky and ended quickly when Giannandrea was hired away by Apple in 2018.

Stepping in for Giannandrea was Ben Gomes, a long-time Google engineer who was more closely aligned with the approach Singhal took to search quality. I’ve seen Ben speak a few times and met him once. I found him incredibly smart yet humble and inquisitive at the same time.

But Ben’s time at the top was short. In June of 2020 Ben moved to Google Education, replaced by Prabhakar Raghavan, who had been heading up Google Ads.

Here’s where it gets interesting. When Raghavan took the role he became head of Google Search and Google Ads. It is the first time in Google’s history that one person would oversee both departments. Historically, it was a bit like the division between church and state.

That set off alarm bells in my head as well as others as detailed on Coywolf News.

Photo Credit: u/boo9817 via reddit

Even if your intentions are pure I felt it would be difficult not to give into temptation. It’s like Girl Scout cookies. You can’t have a box of Samoas lying around the house tempting you with their toasted coconut chocolatey-caramel goodness.

Your only defense is to not have them in the house at all. (Make a donation to the Girl Scouts instead.)

But what if you were the Girl Scout leader for that troop? You’ve been working pretty hard for a long time to ensure you can sell a lot of cookies. They’re stacked in your living room. Could that make it even tougher not to indulge?

Email from Benedict Gomes (Google) to Nick Fox (Google), Re: Getting ridiculous.. (Feb. 6, 2019) (pdf) shows the concern Gomes had with search quality getting too close to the money.

I think it is good for us to aspire to query growth and to aspire to more users. But I think we are getting too involved with ads for the good of the product and company

A month later there’s B. Gomes Email to N. Fox, S. Thakur re Ads cy (Mar. 23, 2019) (pdf), which contains an unsent reply to Raghavan that generally explains how Gomes believes search and short-term ad revenue are misaligned. It’s a compelling read.

Yet, a year later Raghavan had the top job. Now this could be an ice cream and shark attacks phenomenon. But either way you slice it, we’ve gotten more ads and a more cluttered SERP under the Raghavan era.

ChatGPT

You thought you could escape a piece on the state of search without talking about generative content? C’mon!

I’m not a fan. Services like ChatGPT are autocomplete on steroids, ultimately functioning more like mansplaining as a service.

They don’t really know what they’re talking about, but they’ll confidently lecture you based on the most generic view of the topic.

What Is ChatGPT Doing … and Why Does It Work? is a long but important read, which makes it clear that you’ll always get the most probable content based on its training corpus.

The loose translation is that you’ll always get the most popular or generic version of that topic.

Make no mistake, publishers are exploring the use of generative AI to create or assist in the writing of articles. Pioneers were CNET, who was in the crosshairs for the use of generative content soon after ChatGPT launched. Fast forward to today and it’s a Gannett site found to be using AI content.

Publishers have clear economic incentives to use generative AI to scale the production of generically bland content while spending less. (Those pesky writers are expensive and frequently talk back!)

I see the flood of this content hitting the Internet bad in two ways for Google search. First, unsophisticated users don’t understand they’re getting mediocre content. They are unaware the content ecosystem has changed, making the information asymmetry a chasm.

More dangerous, sophisticated users may be aware the content ecosystem has changed and will simply go to ChatGPT and similar interfaces for this content. If Google is full of generic results, why not get the same result without the visual clutter and advertising avalanche?

This doesn’t seem far-fetched. ChatGPT was the fastest-growing consumer application in history, reaching 100 million users two months after launch.

Groundhog Day

We’ve been here before. I wrote about this topic over 12 years ago in a piece titled Google Search Decline or Elitism?

Google could optimize for better instead of good enough. They could pick fine dining over fast food.

But is that what the ‘user’ wants?

Back then the complaints were leveled at content farms like Mahalo, Squidoo and eHow among others.

Less than a month after I wrote that piece Google did choose to optimize for better instead of goog enough by launching the Panda update. As a result, Mahalo and Squidoo no longer exist and eHow is a shadow of what it once was.

Is ChatGPT content the new Demand Media content farm?

Editorial Responsibility

A recent and rather slanted piece on the Verge did have one insight from Matt Cutts, a former Google engineer who I sorely miss, that struck a chord.

“There were so many true believers at Google in the early days,” Cutts told me. “As companies get big, it gets harder to get things done. Inevitably, people start to think about profit or quarterly numbers.” He claimed that, at least while he was there, search quality always came before financial goals, but he believes that the public underestimates how Google is shaping what they see, saying, “I deeply, deeply, deeply believe search engines are newspaper-like entities, making editorial decisions.”

The last sentence really hits home. Because even if I have the reasons for Forbes, CNN, U.S. News and others ranking wrong, they are ranking. One way or the other, that is the editorial decision Google is making today.

If my theory that Google is relying too much on brands through user preference is right, then they’re essentially abdicating that editorial decision.

They’re letting the inmates run the asylum.

Food Court Search Results

Photo Credit: Shutterstock

Any user interaction data from a system this broken will become increasingly unreliable. So it’s no surprise we’re seeing a simulacrum of content, a landscape full of mediocre content that might seem tasty but isn’t nutritional.

Search results are becoming the equivalent of a shopping mall food court. Dark forest migrants avoid the shopping mall altogether while those that remain must choose between the same fast food chains: Taco Bell, Sbarro, Panda Express, Subway and Starbucks.

You won’t get the best meal or coffee when visiting these places but it’s consistently … okay.

It’s goog enough!

From Goog Enough To Great

So how could Google address the yawning information asymmetry and incentive misalignment responsible for goog enough results? There’s no doubt they can. They have incredibly talented individuals who can tackle these issues in ways far more sophisticated than I’m about to suggest.

Refactor Interaction Signals

Google had a difficult task to combat misinformation after the last election cycle and COVID pandemic. It seems like they relied more heavily on user interaction signals and our affinity for brands to weed out bad actors.

This reminds me of Google’s Heisenberg Problem, a piece I wrote more than 13 years ago (I swear, I don’t feel that old). The TL;DR version is that the very act of measuring a system changes it.

User interaction signals are important but the value judgments made on them probably needs to be refactored in light of sites exploiting brand bias.

Rollback Ad Creep

Google’s own founders thought advertising incentives would not serve the needs of the consumer.

Ben Gomes wrote that “… the best defense against query weakness is compelling user experiences that makes users want to come back.” but “Short term revenue has always taken precedence.”

Someone may not make their OKRs and ‘the street’ (which I imagine to be some zombie version of Gordon Gecko) won’t like it. But Google could fall into the Blockbuster Video trap and protect a small portion of profits at the expense of the business.

Reduce UX Clutter

Some Google features are useful. Some not so much. But sometimes the problem is the sheer volume of them on one page.

This isn’t about going back to 10 blue links, this is about developing a less overwhelming and busy page that benefits consumers and allows Google to better learn from user interactions.

Deploy Generative Content Signals

Google is demoting unhelpful content through their Helpful Content System. It’s a great start. But I don’t think Google is truly prepared for the avalanche of generative content from both low-rent SEOs and large-scale publishers.

A signal for generative content should be used in combination with other signals. Two documents with similar scores? The one with the lower generative content score would win. And you can create a threshold where a site-wide demotion is triggered if too much of the corpus has a high generative content score.

Create Non-Standard Syntax Signals

Instead of looking for generative content, could Google create signals designed to identify human content. Maggie has a great section on this in her piece.

No language model will be able to keep up with the pace of weird internet lingo and memes. I expect we’ll lean into this. Using neologisms, jargon, euphemistic emoji, unusual phrases, ingroup dialects, and memes-of-the-moment will help signal your humanity.

This goes beyond looking for first person syntax and instead would look for idiosyncrasies and text flourishes that acted as a sort of human fingerprint.

Improve Document Signals

It’s clear that Google is better at understanding documents today through innovations like BERT, PaLM 2 and Passage Ranking to name a few. But these are all still relatively new signals that should and need to get better over time.

The October 2023 Google Core Algorithm Update (gosh the naming conventions have gotten boring) seemed to contain a change to one of these document signals which elevated content that had multiple repetitions of the same or similar syntax.

I could suggest a few more but I think this is probably … goog enough.

Disclaimer and Notes: I consult with reddit, Pinterest, WalletHub and Everand, all sites mentioned or linked to in this piece. Auditory accompaniment while writing was limited to two studio LPs by the Chemical Brothers: No Geography and For That Beautiful Feeling. A big thank you to Mitul Gandhi and Derek Perkins who both shared data with me on very short notice.

The Next Post: What Pandu Nayak Taught Me About SEO

The Previous Post: Recovering From The Weaponization of Social Media

5 trackbacks/pingbacks

Comments About It’s Goog Enough!

// 69 comments so far.

James Svoboda // November 08th 2023

Well done AJ! Really had overlooked how bad and bloated SERPs have gotten lately.

:clap: :clap: :clap:

AJ Kohn // November 08th 2023

Thanks so much James. Honestly, it was worse than I thought as I continued to do the due diligence.

Ammon Johns // November 08th 2023

The biggest issue with “Using neologisms, jargon, euphemistic emoji, unusual phrases, ingroup dialects, and memes-of-the-moment will help signal your humanity” is, of course, it only really fits in where query parameters already suit QDF, and it would still unfairly bias against more academic and professional language. Great for surfacing more forums and Reddit and Quora, and more of the ‘crowdsourced’ answers, but one also has to remember that the ‘crowd’ voted Trump into office, voted for Brexit, has made McDonalds the pinnacle of profitable restaurants, etc, etc.

AJ Kohn // November 08th 2023

Thanks for the comment Ammon. And you’re 100% correct.

It would need to be an editorial decision by Google to deliver the equivalent of fine dining over fast food. The question is what part should search engines play in reducing the information asymmetry?

If users find mediocre content satisfying should Google just dole out more of it? I think it’s a slippery slope, particularly since it makes ChatGPT look comparable in quality but without the avalanche of advertising.

At the end of the day they’re optimizing for the equivalent of Fast & The Furious 19: Wheelchair Drift.

Gabriella // November 08th 2023

Hey Ammon, I knew I’d find you here… Thank you for sharing your thoughts on the use of neologisms, jargon, and memes in online communication. It’s true that these elements can sometimes be more effective in specific contexts, such as online forums and social media platforms, where informal and colloquial language is the norm.

With that said, I have to admit you’ve raised an important point about the potential bias against more academic and professional language. It’s crucial to strike a balance and consider the audience and purpose of communication when employing such linguistic tools. While these techniques may indeed be great for surfacing diverse perspectives and engaging with a broader online community, Imho…it’s essential to remember that language has played a role in various significant events, as you’ve mentioned.

In conclusion, your comment underscores the complexity and nuances of language in our digital age, and it’s a reminder that effective communication should be adapted to context while respecting the diversity of voices and perspectives in our online communities. Per usual, you always seem to add depth to any discussion, thank you!

AJ – what can I say, this is brilliant and well worth the anticipation and wait!

AJ Kohn // November 08th 2023

Thank you for the kind words and comment Gabriella.

And it would all need to be balanced but I’m intrigued with syntactic and linguistic techniques that could signal human content versus generative content.

At present, I sense that Google is testing signals that boost documents that have first person syntax. That type of syntax can also be spoofed but it feels like a proxy for ‘real’ perspectives.

Victor Pan // November 08th 2023

I think it’ll be hilarious if Google released Google Classic (2000) on April Fool’s day.

But on a more serious note, could different versions of Google Search be the answer to the audience mix problem? Grandma is Googling but so is John and Jane – and I guess Johnny and Janie. My daughter (pre-teen) queries like a freak of nature but I’ve come to terms with it. She prefers images, reddit, and get this – Quora.

Every platform eventually dies out when it no longer captures the attention of the next generation.

Another angle to greatness is to double-down on Discover & query-less searches. Explore non-search Google Search user signals based on Google device/platform usage behavior (as proposed by Cindy Krum).

Check weather? Birthday gift ideas? Flowers for X. Too creepy? Let users opt-in to these query-less automations.

I’ll gladly get reminders and tips for other people’s birthdays.

AJ Kohn // November 08th 2023

Those are some great questions and ideas Victor.

I do think there are options for different versions of search. I think of it as something like ‘list view versus map view’ when looking at homes.

Or even as a type of filter on results that would be more focused on a certain type of result. Something akin to reddit’s hot, new and top filters.

It would be a transformative change to search but … maybe that’s what it takes?

And the whole Discover and query-less search thing is super interesting but … also a place where I think Google continues to struggle.

Anon // November 08th 2023

Your example with the National Council On Aging is you only saying they are good because the profile of the site might fit the category but actually they have a third party business running and monetizing that subfolder on their website.

I think the example with Forbes is that spend a lot more on their content in those specific examples you’ve highlighted but you’re casting some issues based on a few other areas on their site where they’ve seen bad press… for a website that large.

AJ Kohn // November 08th 2023

You are correct! The specific result here is a monetized result from the charity. I wish it wasn’t. And in some ways the NCOA is drafting on their brand just like U.S. News is in that example.

But that just underscores the enormity of the problem.

Forbes? Can’t help you there.

Tom // November 08th 2023

An excellent insight into the current state of Google search – thank you for sharing.

One major change in the last few years which I find increasingly frustrating as a searcher in the UK is that Google has seemingly abandoned localised results.

I’m a keen gardener which is a mature, highly specialised industry here in the UK. We have a very specific climate – there are plants you can and cannot grow here and specialist guidelines for growing in our environment.

In the last few years, if I Google ideas for plants to grow, growing tips, product recommendations – I’m shown an overwhelming majority of sites tailored towards US readers (maybe a result of Google leaning more heavily into brand signals?).

Let’s say I’m ‘pruning tomatoes’ for example – 9/10 of the sites on page one in Google UK are written for readers in the US. But tomatoes are sub-tropical plants and I’m caring for them in the UK’s temperate climate – the guidelines differ greatly.

If I Google the ‘types of tomatoes’ I can grow using Google UK, a staggering 10/10(!) sites on page one are written for a US / Canadian audience. But the types I can successfully grow in the UK differ greatly from the types available in the varied climate of the US. I could go on and on with more examples but you see where I’m going with this…

My point is that the advice from these US sites is likely ‘goog enough’ – but it isn’t tailored to a UK audience and it certainly doesn’t make for a good user experience. It also represents a significant downgrade on the search results we had become accustomed to a few years ago.

In the same way users have begun appending ‘reddit’ to their search terms, I regularly find myself appending ‘uk’ to mine. Or I use Bing.

AJ Kohn // November 08th 2023

Thanks so much for the kind words and a very compelling example of how Google search results are goog enough.

Lately, Google seems vastly more interested in language over location when it comes to content. This started when they adopted a mobile first philosophy and understood that users no longer had a click bias toward domains with, say, a .co.uk TLD. Because you don’t see the domain on mobile results.

I still see Google understanding that certain terms deserve a response from UK content (e.g. – Piccadilly Circus). But perhaps Google isn’t taking into account location for country neutral terms such as tomato growing tips.

And without that context the sites with the best user interaction signals for that language win, forcing you to make those query modifications or seek other platforms. Really interesting! Thanks again for sharing.

Micah Fisher-Kirshner // November 08th 2023

Oh how I missed these long articles!

Clutter:

Yeah, the clutter is really getting to me these days with Google, frustrated both as a user and as an SEO (wish there was a better metric tracked on how cluttered a result “feels” going beyond just pixel depth).

———

Refactoring Signals:

I do wonder if there’s another way by balancing out the impetus to rely on brands (or people’s preference for brands) as it feels like the brand bias change was too blunt/simplistic.

Obviously that can be a problem in the other direction, for example the people voted for Clinton to be President (using the accurate outcome of what happened in our election, as the people voted for Clinton not Trump, sorry Ammon), but the electoral structure in place chose Trump instead. That as long as the right balance between majoritarian and minoritarian (demos and laws) are set, then the system could be improved so the wrong sites aren’t pulled upward and the incentives correctly aligned.

With search, rather than give Forbes carte blanche ranking power by being popular from another areas, relevance would still play a part to prevent a site from ranking where it shouldn’t. That way, the results can be less a food court of the same bland goog enough to a downtown of great interesting and varied choices.

—–

Ad Creep:

SGE technically would be a great way to break with that, I’m just skeptical they could escape that trap given the revenue/share holder impact. Then again, I’m expecting us to eventually hit the Star Trek computer level where search and analysis is a commodity (dumb pipe).

AJ Kohn // November 08th 2023

Well I’m glad it’s provoking such good dialogue on the issues.

The clutter is a real problem. One of those presentations from the anti-trust trial mentions that SERP features have to be designed so Google can learn from them. With the complexity of the current SERPs, I find it hard to believe their value judgments are reliable. That’s what the clutter does, make the original magic they relied less viable.

I completely agreed on the brand signals. I still think it’s important to have that as part of the algorithm. But we need to find a way to balance it due to the current nature of the ecosystem and the mix of users searching.

And that brings me to the SGE bit. I actually think that’s 100% the wrong way to go for Google. It doesn’t make any sense from a revenue standpoint. But beyond that, if Google is going to dish out the same fast food content that I could get on ChatGPT, then what’s the point of searching? There’s no real differentiation!

I think they need to lean back into finding the very best results.

Noah Learner // November 08th 2023

Excellent article sir. I’ve been talking/ thinking about the how few and how many pixels from the top organic results have become. Anything shopping related has turned into one organic result per page on my 27” Mac.

As I was reading your thoughts about information asymmetry I couldn’t help but think about how much that plays into us trying to actually do the work.

We know big picture what is necessary to compete in the SERPs, but the more i know/ look deeply into search data, the more I see how that data is obfuscated by Google. Take CTR. Have fun trying to figure that out due to site links. I routinely see pages with Avg position — yes I’m holding my nose as I type that — of 1 with CTR of 30% of old.

And the asymmetry comes in when we think about how few of us know that. Gone is the industry’s outrage about this.

And then based on the clutter and low CTRs businesses wrongly (or rightly) decide that they need to grow their adspend to get the traffic to keep their business goals attainable.

All the while enshittification keeps getting worse and worse.

Ugh.

Thanks again for the great piece. Love it.

AJ Kohn // November 08th 2023

I very much appreciate the comment and commiseration Noah.

And you’re spot on about our own information asymmetry when it comes to understanding Google search and its data. And sure enough Google does obfuscate, not just in how things work but what data they provide. Sometimes that is for good cause (i.e. – privacy) and other times not so much (i.e. – ranking for featured snippets).

Granted, Google has to be opaque to some degree. And I assume the complexity of SERPs is also irksome for many at Google trying to make value judgments.

I’m still rooting for the Google I knew.

Dan Thies // November 08th 2023

Holy cow I’m glad you wrote this, it’s not very often that something this long is this good. Is it longer than the alligator party story? Can I get you on my podcast?

AJ Kohn // November 08th 2023

Thanks Dan. I don’t know if the content goblin piece is longer or not. I will say that I felt better about the length of mine based on that piece.

I’ll be catching up on client work (sorry folks) and then am on vacation. But I’d be open to doing something in 2024.

Riaz Ahmad // November 08th 2023

Excellent piece! I have been observing few of those changes you highlighted especially the SERPs and the quality of them. Also, as one might say the brand sites or authority sites do get good results in search and I believe that is because of the user trust. At the same time, it can be dodgy as there are many grey areas in terms of understanding the Google logic behind.

Aaron Wall // November 08th 2023

Great post! I saved a lot of the same images for a post I was writing, but your torn paper look makes them feel more forceful & on point as discrete ground truth items rather than casual references.

The only bits I would add to the above in terms of the problem being fractals with layers would be…

– a lot of the publishers engaging in the AI stuff and bulk fake review spam stuff are owned by private equity players or roll up chains & are individual properties owned by a main entity. I think Detailed / ViperChill does some reports on these sorts of concentrations from time to time.

– publishers that are in Google News get some instant distribution & any sub-scale publisher which is more in-depth but slower has that as another strike against them

– blogs had a huge opportunity to distrupt publishing on a sustainable basis, but by Google consolidating the feed reading on their reader then choosing to shut down Google Reader & iGoogle (which must have cost ~ 0 to maintain) they ultimately tilted the table away from the indy publisher toward the goog enough chains. this also harmed Google itself as they could have used those as beachheads to promote Google+ and similar

– a lot of sites likely self-penalize by trying to manipulate the rank too aggressively on a new website which lacks brand awareness

AJ Kohn // November 08th 2023

Great to see your POV here Aaron. The torn paper thing is my goto setting in Snagit!

You are 100% correct about PE players implementing shelf-space strategies and Glen Allsopp is always a great read.

But I’m most interested in your point about blogs, Google Reader and iGoogle. I see this as a turning point as well.

I had another analogy in the piece that I wound up cutting that used the idea of Walmart moving into a small town. They essentially put all the independents out of business. Google Reader was like the AMEX Shop Small campaign, trying to give them a lifeline. (I see the irony of using a massive credit card issuer as the good guy, which is perhaps why I cut it.)

But it’s a frogs in a pot problem. People realized too late that they wanted something more than what was offered at Walmart. So today I think it is more difficult to crack search unless you truly understand the landscape. Very few business owners have that knowledge and good luck having them understand which SEO is going to give them the right roadmap.

Chris UK // November 08th 2023

Post of the year! Period.

Dan Gehant // November 08th 2023

Like a box of thin mints fresh out of the freezer, this was delicious & nutritious! And thanks for toiling through the process to get this out into the world. My fav line remains ~ GPT is functioning more like MaaS.

Tip of the hat to Aaron regarding review sites & PE. There are certain verticals where PE has invested heavily and owns much of the shelf space at this point (Edu, US News, et al). It’s the tip of the iceberg in a sense. And Glen’s annual report does a great job of exposing the larger holding companies which use their brand portfolios to quietly own the shelf w/o users noticing their choices are artificially limited. Note: I don’t distrust all PE. Some PE people are good people, like Micah or my neighbor Scott 😉

Many of your candid assessments bring me back to the edu space – a bit of an obsession these days. And I struggle to see how Google is not knowingly collecting coin over damage done to misinformed consumers. Puts a new spin on passing the buck…

Said another way:

US News + PE + Goog Enough = Fatberg level Enshittification (this analogy is a mess but it fits)

AJ Kohn // November 09th 2023

Thanks for the great comment Dan.

I don’t distrust PE really or blame anyone for pursuing a shelf space strategy. They’re optimizing within the current bounds of the ecosystem.

Sometimes they may even maintain a high level of content. But it does result in a type of false choice to the consumer. The information asymmetry widens. And that opens the door to fraud.

I actually wrote a piece about Acquisition SEO and Business Crowding back in 2016. Still holds up well today I think.

Lawrence Ozeh // November 09th 2023

This is one of the best things that I have read today. It reminded me of when journalists were still my heroes when it comes to literary construction and expository writing.

AJ Kohn // November 09th 2023

Thank you Lawrence. This means a lot because I really do believe good writing is important.

Jochem Vroom // November 09th 2023

Wow, a long time ago since I read every word of such a long post, this article will probably have great ‘user feedback’! =)

AJ Kohn // November 09th 2023

Thanks Jochem. That’a bit of a heroic effort given it’s over 7000 words.

Gary // November 09th 2023

AJ your blogs should be printed into a book one day and I would buy a copy.

I have learnt so many interesting things from your various blogs over the years and this one did it again.

The part where you said: “the general idea behind Navboost is to provide a boost to documents that generate higher clicks over expected clicks (COEC).” makes me wonder – how would the Google search team manage this signal with expected CTR surely dropping by position from historical standards with so many SERP features being injected on mobile viewports?

AJ Kohn // November 09th 2023

It’s strange Gary, I had an idea a number of years ago to automate turning blogs into ebooks. It’s probably been done at this point. Either way, thank you!

And yes, the added complexity of SERPs must make any COEC type of calculation vastly more difficult. But at scale I imagine it’s a bit like multivariate testing where each combination of features delivers a specific CTR curve.

Of course they may have minimized user interaction signals with the advancements made in document understanding (i.e.- BERT, PaLM) but that seems less likely given the brand bloat we have today.

Zac // November 09th 2023

Quality read. I’m 24, relatively fresh out of education and making my way in digital marketing, SEO and life. A lot of interesting information, both historical and present, which is mostly new…

One thing I’ve found is it’s just hard to come across this kind of article – Something that actually educates me. Perhaps that confirms the current state of affairs of Google & the internet.

AJ Kohn // November 09th 2023

I really appreciate this feedback Zac. I try not to be too nostalgic about the ‘old Internet’ but it does seem like Tesco has forced out small businesses.

The glimmer of hope for me are things like Substack. But if small sites or bloggers knew they had a better opportunity to get traffic again I think we might see a resurgence of content.

Joe Youngblood // November 09th 2023

Great article AJ, far beyond “Goog enough”. I agree that many SERPs are a disaster these days. However, I’ll be the contrarian in the room and say that I am 100% ok with more diversified results and fewer clustered or indented results. It’s a tiny glimmer of hope for new websites to get some kind of visibility.

AJ Kohn // November 09th 2023

Thanks Joe. I 100% agree with fewer clustered results. Host crowding has been a bugaboo for a long time.

There’s even a thread on Bird Site that spun off of this piece that deals with host crowding for ‘does sensodyne have fluoride‘

When I look at it I get four results from Sensodyne itself spread across the SERP. Fewer are needed and they need to take advice from Bowfinger and KIT.

Hasan Fransson (SEO-guru) // November 09th 2023

Nice article. Very interesting views about how Google works.

A bit too long for me though so skimmed some of the middle parts.

Will Scott // November 09th 2023

In 2008 Eric Schmidt, then CEO of Google says to a group of magazine executives, “Brands are how you sort out the cesspool”.

Ain’t nothin’ changed.

Still pandering to magazine publishers, and still a cesspool.

AJ. Damn. Thanks!

AJ Kohn // November 09th 2023

Thanks Will. I appreciate the comment and still can’t believe I didn’t connect this up to the Schmidt quote.

Roy // November 09th 2023

A lot of effort in a great post. This should be on every SEO rotation for the next couple of weeks. In the end we, as users, have the power to accept the Google results and in the current state, it’s better than nothing for most of us.

They do live up to what Eric Schmidt already said in 2012: “Brands are the solution, not the problem. Brands are how you sort out the cesspool.”

AJ Kohn // November 09th 2023

Thanks Roy. While I didn’t touch on it in the piece. The anti-trust angle does come into play here. For most Google is just … the default. So we wind up like frogs in a pot. We put up with a lot and don’t realize just how bad it’s gotten.

Mor Mester // November 09th 2023

+1 post of the year!

Mor Mester // November 09th 2023

FYI I couldn’t subscribe to your newsletter, it gave a 404 error.

AJ Kohn // November 09th 2023

Ah … I bet that’s still connected to the dearly departed Feedburner. You should be able to snag the RSS feed and digest it in your favorite feed reader. I prefer Feedly.

Thanks for letting me know.

Scott Hendison // November 09th 2023

Thanks AJ. I skimmed this last night when you first tweeted it, and knew it would be a hit. It wasn’t until I woke up this morning and saw all the buzz about it on Tw… X that I dug in and read the whole thing. Now I just got done going through it for the third time, and I really hope the right folks at Google read this and take some sort of action to reverse the trend or at least slow it down… (and I hope Trustpilot faces racketeering charges) Keep up the good work!

AJ Kohn // November 09th 2023

Thanks for the kind words and reply Scott.

I’m rooting for Google to make the same decision they made back in 2011 when they launched Panda to get rid of content farms. The question is whether the Google of today is the same as the one of yesteryear.

As for TrustPilot, I’m not looking for charges no matter how distasteful their strategy. I’d prefer that Google simply not allow those types of sites to consistently rank well. Problem solved.

Ann // November 09th 2023

Where is your newsletter?

I would like to subscribe.

AJ Kohn // November 09th 2023

Sorry Ann. It looks like I never found a new newsletter provider with the demise of Feedburner. You should be able to get my RSS Feed and use a reader like Feedly to get future updates.

JP // November 09th 2023

Thank you for creating a reference article we can go back to that encompasses so many aspects of the Google search experience.

As the power of brands gets bigger and bigger, the actual content matters less and less. ChatGPT is able to generate an article on any topic that would be acceptable to Google. The way things are going, it seems that down the line, content isn’t bound to matter more but less and less. What matters is who hosts that content. Content – as Google ranks it – is a commodity that can be generated at will. The latest update tried to weed out all the small to medium affiliation/advertising “review” sites and funnel all that traffic into Quora and Reddit (and they get those big sites to moderate the content for them, for free), while boosting the big brands like Forbes.

What Google would need is to be able to tell if a content is actually good or not. At present it can only say whether it’s goog enough.It doesn’t look like a search engine can do that yet.

AJ Kohn // November 09th 2023

I very much like this statement JP. “What matters is who hosts that content.”

Fundamentally I think that’s one of the major problems. And while it sounds like nostalgia and a bit of de-centralized bias, I think the Internet needs a robust creator economy, not the equivalent of Walmart greeters.

Michael Cottam // November 09th 2023

Brilliant work, AJ! I especially liked the section on TrustPilot’s sales model–I was totally unaware of that.

AJ Kohn // November 09th 2023

Thanks Michael. I only know about TrustPilot and other similar site’s sales model because of the dialogue I’ve had with clients. Sometimes I learn as much from them as they learn from me.

Nat Miletic // November 09th 2023

This is one of the best articles I have read in a while. Excellent work AJ!

Yeah, it seems that the first page real estate is getting more and more scarce. Between ads, maps, and other rich snippets, first-page results are getting pushed lower and lower.

Thankfully we have infinite scroll now, so everyone is on the first page. That’s one way to solve this.

Kidding aside though…

How big of a threat do you think ChatGPT is for Google and other search engines? I see more and more people using ChatGPT and similar tools for short and quick answers (recipes come to mind because no one wants to read a recipe blog article).

AJ Kohn // November 10th 2023

Thanks or the kind words and comment Nat.

If Google continues to deliver goog enough results, I think more people might use ChatGPT instead. And that’s because the difference in content isn’t material enough and you can get that answer without the overwhelming UX.

What is Google’s unique selling proposition then? It’s why I think they must level up in terms of content quality and UX.

So for me, I think they need to reject the generative content craze and refocus their efforts and PR toward the idea that Google is where you find better answers, not the average answer.

Adam Audette // November 09th 2023

Just commenting to hang out with all you guys.

AJ Kohn // November 10th 2023

Great to see you here Adam!

Rich Hargrave // November 10th 2023

Wow, this is one hell of an article! Great work, and very enlightening.

TJ Robertson // November 13th 2023

Loved the article. It was a fun read and hard to disagree with most of it. However, your solutions at the end feel obvious and in line with what Google has already been doing (aside from the ad creep).

“No language model will be able to keep up with the pace of weird internet lingo and memes” – Wait, what?! I’m failing to understand how anyone could believe this. What ability do you imagine humans posses in this regard that LLMs are incapable of reproducing?

Google is already working to promote content written by humans with real experience. Further demoting content because it was generated by an AI seems unnecessary. If the two are indistinguishable, why would we care?

Don’t get me wrong; I agree that LLM content is generic and unhelpful, but we’re still in year one of ChatGPT. Plenty of companies are working on methods to get the best of humans and the best of AI into their content. You aren’t suggesting that LLMs will never be able to improve the quality of content, are you?

AJ Kohn // November 13th 2023

Thanks TJ.

The issue here is the way LLMs work. They have a corpus content they’re trained on and will choose the most probable next word again and again based on that corpus. You’ll always get the most probable (aka generic) version of something. Sure you can fiddle with prompts but it’s still not going to be able to get my reference to a rogue Buffalo Wild Wings app.

You say Google is already working to promote content written by humans with real experience. They do talk about that. But how do you think they do that algorithmically? It’s likely syntax driven. I just think they need to go further.

The stance Google took on being neutral about how content was generated was … a mistake. Generative content can be useful for areas that are underserved or that don’t scale. There might be interest in local school board meetings but newspapers can’t cover that in the current economic environment. So creating generative stories based on school board meeting minutes is essentially new and valuable content.

But using generative content because you’re allergic to work and want to crank out another generic post about the best meditation apps isn’t new or valuable. Google simply miscalculated how eager SEOs and digital publishers would be to use it for this purpose.

And the corpus any LLM is going to use in the future is not going to be all that and a bag of chips given how many sites are blocking usage of their content.

The future for generative content is, in my view, running it on your own private corpus and providing interesting ways for users to query that content. It could almost replace the search function on a number of sites in many ways.

I could write more but … this is goog enough for now.

Siddhesh Assawa // November 13th 2023

Hey AJ,

It’s 12 AM and I’m hooked! Really liked how you distilled these simmering thoughts in a succinct article. Loved the “Enschittification” bit combined with the vested Org structure.

Do you think we’ll soon have a G Search plus (a paid model minus the ads?) – it’s also in line with what Victor shared – different search engines for different people.

AJ Kohn // November 13th 2023

Thanks Siddhesh, though I’m impressed that you think it was succinct. I suppose it was given everything I covered.

A subscription based search engine model would be intriguing and was tried by Neeva recently. They didn’t make it, though they claim their demise was more about convincing people to try a different search engine versus the subscription model itself.

But for Google, I think it would be difficult for them to make as much through subscriptions than they do via the ad model. So I think the best we could see is a they of filtered view, perhaps as simple as a dropdown that would let people only see results from … blogs or social or news.

John // November 13th 2023

Another amazing post. Thanks for taking the time to write this… I’m glad I made the time to read it!

AJ Kohn // November 13th 2023

Thank you. I really appreciate that John.

Nils van der Knaap // November 14th 2023

Great article 🙂 I’ve never heard of RankEmbed as a machine learning system used in ranking.

“This isn’t just traditional systems, like the one I showed you earlier, but also the most cutting-edge machine learning systems, many of which we’ve announced externally– RankBrain, RankEmbed, and DeepRank.”