Earlier this year Jon Henshaw gushed over federated social. I didn’t quite get it but I managed to create a Mastodon account, which I summarily abandoned until Elon Musk purchased Twitter.

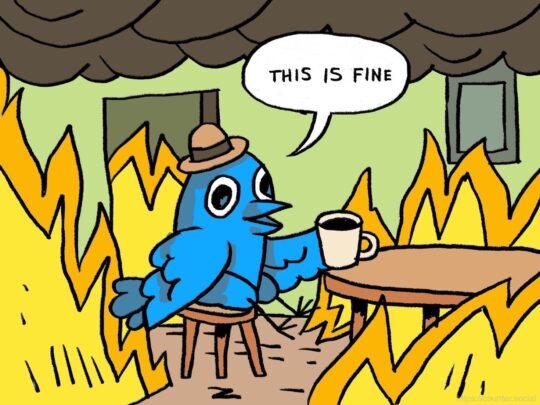

The purchase pushed me to figure out Mastodon (not as hard as you might think) and led me to realize what I’d been missing and just how corrosive current social networks had become. I’ve concluded that today’s social media is a bad batch of Soma made from Soylent Green.

Social Media

I’d usually start out with a definition to help ground the discussion. But there isn’t a canonical definition of social media from what I can tell.

There’s this definition of social media from dictionary.com.

websites and other online means of communication that are used by large groups of people to share information and to develop social and professional contacts

Then there’s Wikipedia’s definition.

interactive media technologies that facilitate the creation and sharing of information, ideas, interests, and other forms of expression through virtual communities and networks.

Mind you, they’re not that dissimilar. But the first doesn’t include creation of content and the second doesn’t mention the development of contacts. In the end, I find Wikipedia’s definition to be more descriptive of today’s social media environment.

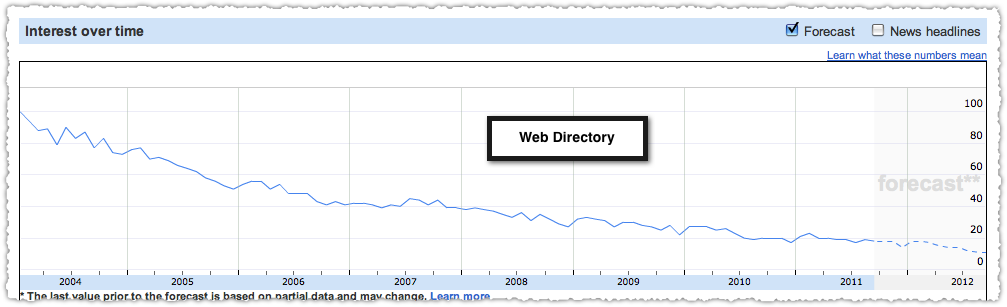

I see more and more people creating content on Twitter and LinkedIn. Twitter incentivizes this by enforcing a character limit. Yeah, yeah, you can create threads but that’s an outlier. And all of the platforms work very hard to keep the conversation about any content on their platform.

Did we just acquiesce to the idea of digital sharecropping? Do we even think about this anymore? Bueller. Bueller. Bueller.

I also don’t see most of these platforms as helping to develop contacts. They help develop a following or a fan base. You are not developing Hank Green as a contact when you follow him on Twitter. You are really not connecting with him either.

Sure, LinkedIn still pushes folks to develop contacts. But I can’t be the only one who’s had a conversation go as follows:

Them: I see you’re connected to so-and-so, how do you know them?

Me: Who?

Them: so-and-so

Me: *typing name into LinkedIn search and seeing we are, indeed, connected*

Also Me: *I have no recollection of this person*

Both of these definitions make social media seem like a utopian exchange of ideas. The vaunted town square! Does this jibe with what you encounter everyday? Or are you instead served up influencer content with a side helping of targeted ads based on your behavior?

Algorithms and Influencers

I still believe that Dunbar had it right, and that the number of social relationships one can maintain is not that high. Technology might be able to extend the number past 150. But it certainly doesn’t reach 1.4 million.

Follower and following numbers are meaningful only to the algorithm, allowing it to understand who might provoke a reaction and, thereby, create monetary value. This is why we have influencers. Because social media isn’t really about being social anymore. It’s about people.

Sure they needed content to get there but more and more of these platforms want you to create that content on the platform – whether that’s Twitter, Instagram or TikTok. From there the only way to profit from that system is to amass a large enough following.

Algorithms create a competitive incentive to produce content so users can appear in topics that produce monetizable engagement. It’s a lot like going to the casino. Most people lose. A few (influencers) win. But the house (platform) always benefits.

This still wouldn’t be a problem if the content that produced engagement wasn’t, for lack of a better word, toxic.

Let me say up front that Twitter was very good to me when I was building my brand. But it was a different time and if I were to build a brand today I think I’d lean on LinkedIn more so than Twitter.

I started to use Twitter a lot less three to four years ago. Even during the pandemic my usage stayed about the same. Part of this was because of the way I used Twitter in the first place. I used it for business because I always found Twitter to be more akin to a megaphone. It didn’t really encourage conversation. But it sure helped to get your brand out to more people!

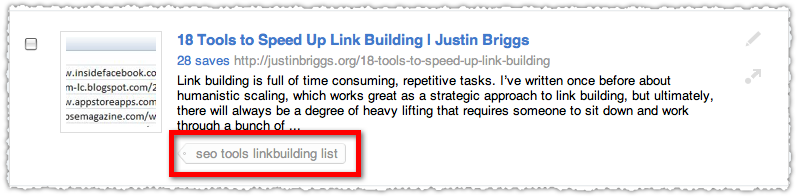

So I cultivated a presence that was about sharing the best of what I saw in my industry. I may not have made a lot of friends during that time. I frequently ignored requests to Tweet posts from colleagues. I was a content snob. But I think that made what I did share that much more valuable.

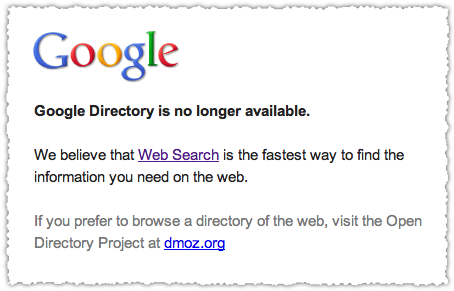

I found less and less to share over time. Granted, I had less time to read, but what I did read was, to put it nicely, not inspiring. So my Tweets ground down to a trickle. But that doesn’t mean I wasn’t lurking.

I didn’t lurk in my industry. I find SEO squabbles to be unimaginative and dull. Instead I’d look in at the trends, particularly during these tumultuous times. Man is that bad for your health.

I’d see Scott Baio trending and I’d click to see what the fuss was all about. Of course he’d said something stupid. And I’d see few zingers confirming my viewpoint. But then I’d see others defending him and, in today’s parlance, I was triggered.

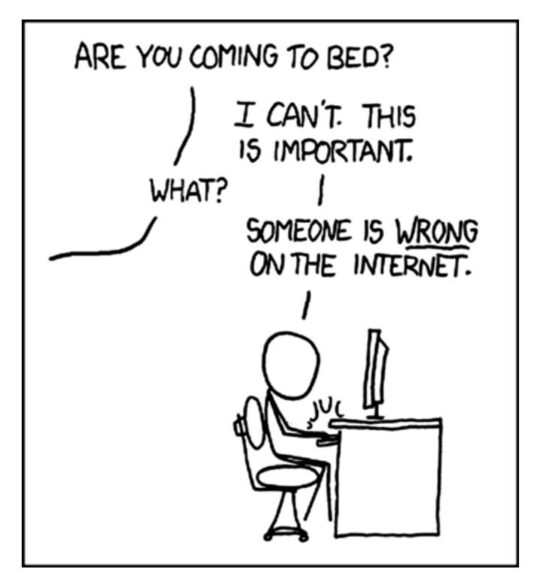

Someone is wrong on the Internet

This statement is essentially the raison d’etre of Twitter. They create a feedback loop of tribalism.

Twitter became a place to be performative. What witticism or burn could rack up the most Retweets? Twitter became social for sport.

All social media companies are piggybacking on the human desire to connect. Star Trek: Discovery explores this theme endlessly and poorly. (I mean, honestly, you’d be in the hospital with alcohol poisoning if you drank every time they mentioned ‘connection’.) But being on Mastodon, I realize that I forgot how satisfying it is to forge those new connections.

Facebook is no angel either. You can clearly go down the same rabbit hole there than you can on Twitter. But for me Facebook is rather tame because I use it largely to keep up with family, old classmates and to see heartwarming The Dodo videos. But again, that’s only because I’ve been aggressive in not engaging elsewhere in that ecosystem.

I may post an update about the current state of things or comment on a political thread now and then. But I generously block people. In fact, it’s gotten to the point where I don’t engage in those posts anymore but simply go and block the people arguing in them instead.

There are other, more subtle dangers surrounding Facebook. I believe that maintaining these connections from the past often hold us back from moving forward. I am not the same person I was in high school. I’m not the same person I was in college. Not the same person I was even a year ago. Yet Facebook encourages us to continue to interact with the cohort of people we knew in these eras.

I’m not saying you might not have lifelong friends that you keep up with. I’m an introvert so I likely have fewer of them than some of you. But how many different careers have you had? I’ve been in advertising, fundraising and marketing. Should I still be trying to engage and keep up with colleagues in each of these careers?

I wonder whether Facebook has stunted the growth of people by having them continuously looking backward instead of forging ahead.

Mastodon

I won’t go into the details of federated social and how Mastodon is structured. Instead, I want to talk about what it feels like and what it inspires. Using Mastodon is like going back to the early days of social.

I was a big fan of FriendFeed, a social platform that most of you probably didn’t use or have even heard of before now. It was similar to Mastodon in that it had a diverse set of people from all walks of life. And from what I recall it didn’t really have an algorithm to present content. Instead it was up to you to follow the people who would help bring good stuff into your feed.

I liked FriendFeed so much that I ventured out to meet the team on the Peninsula during one of their events. That was a huge step for this introvert. I felt super-awkward at that event but it was interesting to meet and chat (or witness them chatting) with Paul Buchheit and Bret Taylor.

But here’s the thing. FriendFeed went the way of the Dodo bird and it’s not lost on me that Bret Taylor wound up at Facebook and, ultimately, on Twitter’s board.

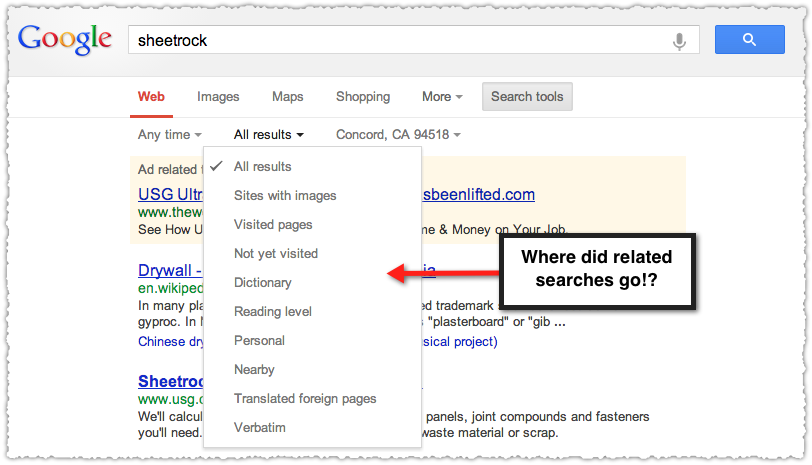

The only other social platform that felt similar was Google+. Once again, that platform worked primarily based on the quality of those you followed. I didn’t need to follow everyone in the SEO community. I just needed to follow those that would share the important posts they found.

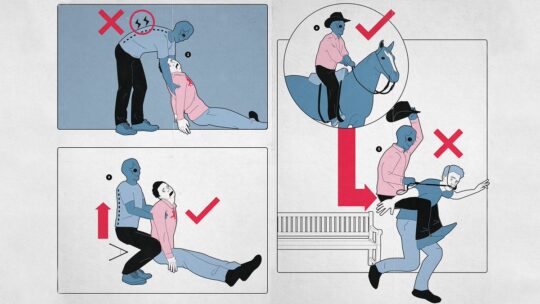

What both of those platforms had in common, in my view, was that users were in charge of configuring their own ‘algorithm’. Who you followed shaped your feed. So following became less about being nice and doling out a pellet of ego to others than to simply enhance your own feed.

Who you followed was a very selfish endeavor. It was about me, and what I saw because of them.

Configuration and Ego

Two things spring from this different dynamic. First is that adding configuration to any platform is going to make it more difficult. I’ll call it like I see it, Mastodon is more difficult. It’s easier to let an algorithm learn what you like and return relevant content based on your explicit and implicit tastes.

The second is that without an algorithm surfacing content, the number of people you follow will likely, and should, shrink. Mastodon is more about the content people share rather than the people who share it.

Lately I’ve seen the idea that the ‘users are the product’ on social media platforms. And that works when they’re all competing to be the chosen one to be featured by the algorithm. But I see great content on Mastodon by following a user who has, at the time of writing, 114 followers. It is meaningless how many people follow them. I follow them because they put interesting content into my feed. If they stop doing so I’ll probably unfollow them.

Will enough people be willing to configure their feed without an ego based algorithm fueling competition? The only platform that comes close is Reddit. In many ways you can think of subreddits as an analog to a Mastodon server/instance with the home feed aggregating those instances.

Reddit is still working to monetize their ecosystem. Oddly, there’s a cottage industry out there that takes Reddit threads and turns them into articles that can be monetized. It’s annoying for Reddit at the corporate level but potentially a solid signal for the community.

I say this because it means that the discussions on Reddit are happening not because it will produce monetizable engagement but despite it.

The bigger problem with Mastodon is the lack of ego. Don’t get me wrong, some of it will always exist in one form or another. We’re only human. But it is a content first universe with no real rewards for popularity.

In fact, to get geeky, popularity could produce some problems given the way Mastodon and federated social works. A person with a large following could tax smaller servers since both sides need to retrieve that content. For that reason, there’s already an admonishment to not upload video.

But I digress. We’ve become inured to social media that rewards tribal content from influencers. We celebrate when we pass a milestone for number of followers or subscribers. I’m not saying I’m immune to those things. It feels good to be … validated. But I’m always on guard to be sure I don’t produce or share content to reach those metrics.

But it might be harder for others to let go of those vanity metrics. I’m not saying it’s going to be easy. I’m saying it’s going to be worth it.

Serendipity

If you can, I think you’ll find that Mastodon is more positive, more diverse and produces more real interaction. I won’t say it’s the best place to have a full-blown conversation yet. It might never be.

Instead it’s like having an interesting talk with a stranger you meet on an airplane. You might not keep in touch but you walk away feeling positive and amazed by the world we live in and how different but alike we all are at the same time.

Instead of being force fed content about Kanye, Kate Middleton or Kyrsten Sinema I find information about CRISPR advances, a fascinating story about the Battle of Midway and an amazing painting of an octopus on the underside of a hotel table.

These things make me happy and also help me make disparate connections with other things in my life and work. Serendipity is a feature and not a bug.

TL;DR

Social Media doesn’t have to be dictated by algorithms that reward influencers who produce divisive and monetizable content. But alternatives like Mastodon that are more about the content people share than the people who share it will require more work by users both technically and personally.