I have 155 pending comments right now. The overwhelming majority of them are pingbacks from benign scrapers. Some may see this as a boon but I view these scrapers as arterial plaque that could ultimately give the Internet a heart attack.

Here’s my personal diagnosis.

The Illness

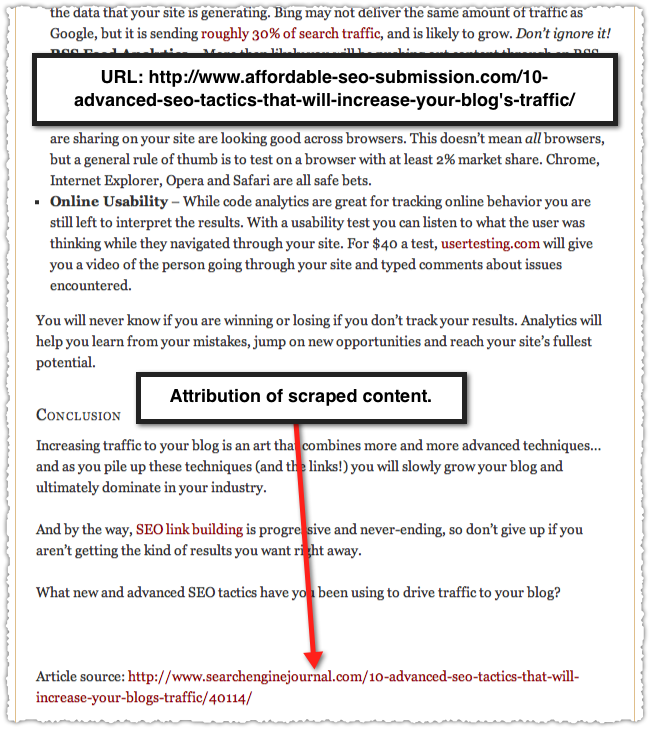

My definition of a benign scraper is a site that scrapes content but provides attribution. I’ve gotten a ton of these recently because of links I received in high profile sites within the search community. Those sites are the target of these scrapers so my link gets carried along as part of the deal.

The attitude by most is that the practice won’t damage the scraped site and may actually provide a benefit through the additional links. Heck, Jon Cooper at Point Blank SEO even came up with a clever way to track the scrape rate of a site as a way to determine which sites might be the best candidates for guest posts.

Signs and Symptoms

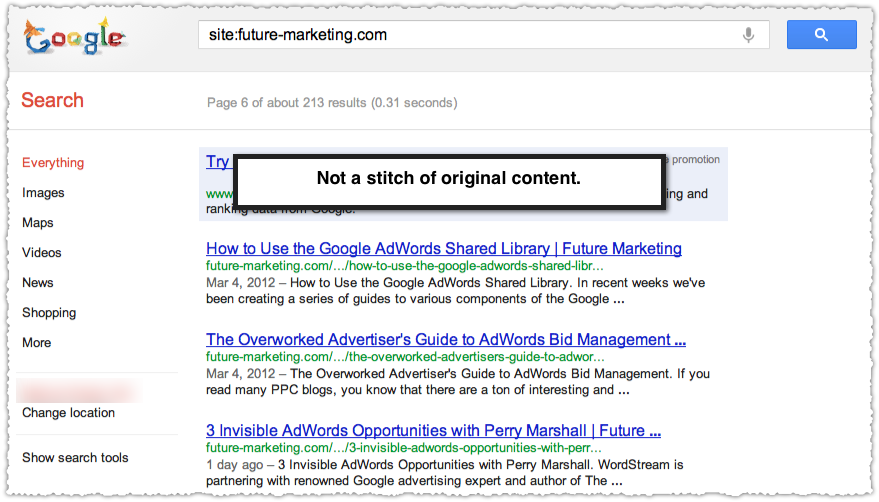

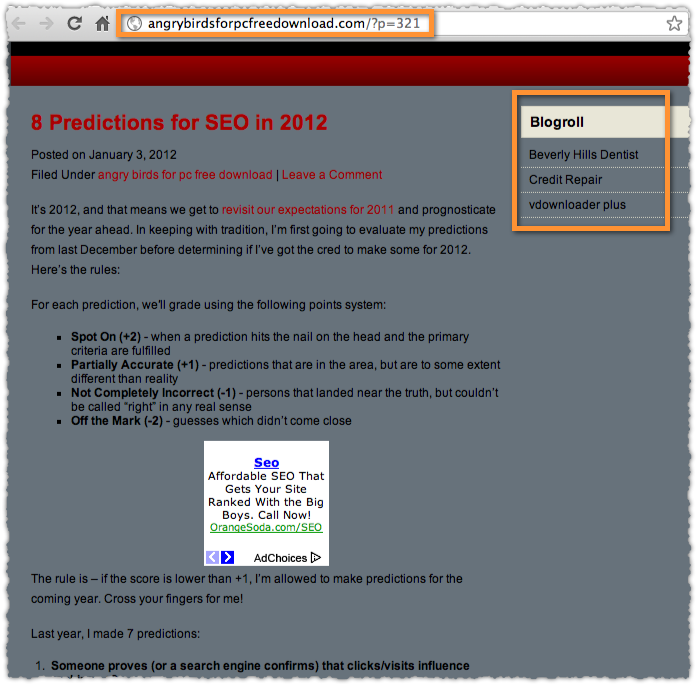

But what do these scraper sites look like? Some of these scrapers might have original content mixed in with the scraped content but in reviewing my pingbacks this seems like the exception and not the rule. Most of these benign scrapers are just pulling in content from a number of feeds and stuffing it onto the page hoping that users show up and click on ads and that the content owners don’t take exception.

“Hey, I gave you a link, so we’re cool, right bro?”

No bro, we’re not cool.

This stuff is garbage. It’s content pollution. It is the arterial plaque of the Internet.

The Doctor

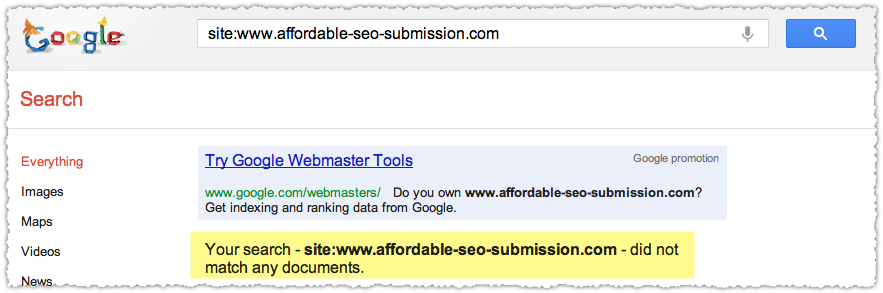

Google is trying to keep up and often removes this dreck from the index.

But for every one that Google removes there’s another that persists.

How long until the build up of this arterial plaque gives the Internet a heart attack? One day we’ll wake up and the garbage will be piled high like a horrifying episode of Hoarders.

Support Groups?

The industry attitude toward these scrapers is essentially a tacit endorsement. It brings to mind the quote attributed to Edmund Burke.

All that is necessary for the triumph of evil is that good men do nothing.

We turn a blind eye and whistle past the graveyard happily trusting that Google will sort it all out. They’ll make sure that the original content is returned instead of the scraped content. That’s a lot of faith to put in Google, particularly as they struggle to keep up with the increasing pace of digital content.

Are we really this desperate for links?

Yet, we whine about how SEO is viewed by those outside of the industry. And we’ll whine again when Google gets a search result wrong and shows a scraper above the original content. Indignant blog posts will be written.

Treatment

Even if we wanted to, we have few tools at our disposal to tell Google about these sites. The tools we do have are onerous and inefficient.

It doesn’t have to be that way.

Why not build a Chrome extension that lets me flag and report scraper sites? Or a WordPress Plugin that lets me mark and report a site as a scraper directly within the comment interface. Or how about a section in Google Webmaster Tools where I can review links?

Sure, there are reporting issues and biases but those are solvable problems. Thing is, many doctors have a God complex. Google may not think we’re able to contribute to the diagnosis. That would be a mistake.

Cure?

Maybe we don’t want to be cured. Perhaps we’re all willing to let this junk persist, willing to smile as your mom finds one of these sites when she’s looking for that article you wrote. Willing to believe that your brand is totally safe when it appears on these sites. But the rest of the world isn’t nearly as savvy as you think.

I know many of these links work, but they shouldn’t. The fact that they do worries me. Because, over time, people might not be able to tell the difference and that’s not the Internet I want.

Today these scrapers are benign but tomorrow they could turn malignant.

The Next Post: Single Domain Results

The Previous Post: Blog Post Optimization

5 trackbacks/pingbacks

Comments About No Such Thing As A Good Scraper

// 19 comments so far.

MIcah // March 14th 2012

Let’s flip this around with a fun question: Would you say that part (minimal perhaps) of the problem is Google always trying to show 10 results for a given long-tail phrase when there is only one or few articles that match?

Example: I’m looking for your article on “Rich Snippets Testing Tool Bookmarklet” and come to this result set: http://www.google.com/search?q=%22Rich+Snippets+Testing+Tool+Bookmarklet%22&pws=0&hl=all&num=10

Obviously not trying to say that there aren’t any posts that would be worthwhile follow-ups talking about your original topic, but if you’re trying to determine what content of a page is good vs. bad… well, if the HuffPo can rank for copying a Twitter comment…

AJ Kohn // March 15th 2012

That’s an interesting idea Micah. Could Google be letting some of this go because they feel a need to have enough content to support a full page of results?

I hope the answer is no. Because looking through all of those results there just isn’t much ‘there’ there. How many different ways do we need to get to the same content? A lot of this isn’t scraped content per se but simply shared on various different platforms. It’s a demonstration of the digital wake of social media. (I just made up that term digital wake. I kind of like it.)

Micah // March 15th 2012

Letting or Not able to?

Letting: non-Internet savvy people may think that if there’s only one result, then it looks bad and switch to Bing. Therefore, you annoy the savvy ones as you don’t get as much harm as long as people don’t complain to loudly.

Not able to: First past the post as they cannot tell if the content is referencing or ‘scraping’ (to use as a negative term here). At what point do you say a long-tail phrased search of content should only show one site (eg: HuffPo on a phrase that gets popular “arrow to the knee”) can be technical impossible.

Both: You want to avoid a situation where you take out quality viewpoints on a similar topic–you also want to avoid a “he said, she said” layout where you make it seem as if it’s a 50/50 view (eg: science areas). Or maybe Google can’t tell since the ability to determine authority is too complex currently.

AJ Kohn // March 18th 2012

Micah,

You do have to wonder whether Google looked at the behavior on Googlewhacks and other small-set search results and found that user satisfaction was low. Many do seem to think more is better and often think a result is ‘good’ by looking at the number of results right under the search returned in total for a query.

Can Google determine what content in the digital wake is meaningful? I’m fairly certain Google can compare text from each and make some determination based on the difference. If there’s only 3% difference in text, how much value is that replicated content providing?

One of the reasons why HuffPo continues to win at this game is user generated content in the form of comments. Even if the article text is essentially identical the content corpus on a HuffPo page winds up dwarfing that of the original. Those comments then become meta content which can drive tremendous value (sometimes).

The site which has the highest engagement has a big advantage and should be returned (just below in my view) with the original.

Micah // March 18th 2012

AJ,

Why would user satisfaction be necessarily low? People find it, get a laugh, and maybe even share it. Sounds like a great user satisfaction instead to me.

Why would 3%, much less 0% be enough to determine? That’s the whole point as to why it’s an issue with duplicate content (internal or external) for bots. If it’s first to post, then slower sites that push information to Googlebot will always lose. If you use other factors, then brand sites (generally) implicitly win. So, how do they define original? Not all sites link back to the original (both small sites, scrapers, or major news outlets).

Re-look at Wall’s article here: http://www.seobook.com/huffington-post . The example given had 0 (zero) comments. In this case, what’s higher engagement? How do you compare an engagement with a news sites with a smaller brand site? Would a niche site on said topic be more appropriate or CNN? What if CNN has better engagement, but is incorrect?

Now, throw in Panda/site-wide penalties and you get scrapers or brands that can take duplicate content from these penalized domains, and as long as they are less penalized, could conceivably rank higher even at 0% difference.

I guess my roundabout point in the end is that people often over-estimate the functionality Google’s algorithm. It’s not easy, and it’s only getting harder when you throw in site-wide penalties rather than just page-level ones.

AJ Kohn // March 19th 2012

Micah,

I have no idea whether user satisfaction is low on short-set results, but offered it as a possibility based on your last comment.

While I agree that many over-estimate Google’s ability, they have gotten much better at identifying source content post-Caffeine using the discovery date. Authorship is another attempt at establishing content ownership.

The HuffPo example using a Tweet is a red herring in my view. Twitter does an abysmal job at SEO so it’s no surprise that HuffPo could outrank Twitter, particularly at a time when that topic was returning QDF results.

The idea that a site who is in Panda Jail could be outranked by a scraper (who is not) is an interesting one. I’d like to find examples of that.

Micah // March 19th 2012

AJ,

Point taken on the UX note.

I agree that it got better post-Caffeine, but for whatever reason, got worse through Panda. I’ve seen various examples of scraped content ranking for no reason other than being an authority site (“singing” is a my favorite example).

While I agree that QDF is more than appropriate as a counter, the point of doing a poor SEO job shouldn’t matter (hence not a red herring) as it is about the original content, not how well optimized the site is. If original content is going to be dictated by SEO (excluding blocking the content), then in the end, the best SEO wins regardless of who’s content it is–which is exactly the current issue with scrapers who can usually optimize faster/better than smaller sites/blogs can.

AJ Kohn // March 19th 2012

Micah,

You’ll have to provide some specifics on your ‘singing’ example since I’m not sure what you’re referencing.

The level of SEO shouldn’t matter in relation to identifying original content. And usually the original content should outrank the scraped or repurposed content.

However, if there is more value produced through the comments and conversation that takes place on another site, then the order could be reversed. The question becomes whether it’s the additional content (which then makes it different on the document level than the original) that pushes it over the top or if it’s the ‘brand’.

Now the scrapers I identified have none of this. There’s no brand and they have no engagement on those items.

Micah // March 19th 2012

AJ,

Search ‘singing’ and compare Wikipedia & Facebook. You’ll notice immediately what I’m referencing.

How do you know that the scrapers have none of this? I’m not trying to be facetious, but rather, what data could you say (as a bot) to determine that’s the case? Do you know those scrapers aren’t emulating a brand (or vice versa, does the original content have enough info to be seen as a brand)? Do you know that there is no engagement on the site (ie: Do regular users–not us, know or care about the difference)?

AJ Kohn // March 20th 2012

Micah,

You’ll need to drop in a screencap since my search for ‘singing’ does not include a result from Facebook.

As to your questions on these scrapers, yes, I do know this. They are not emulating brands nor do they have any engagement. Mediadeve.com is but one easy example of this.

Micah // March 20th 2012

AJ,

http://en.wikipedia.org/wiki/Singing vs. http://www.facebook.com/pages/Singing/109468752412938

Once again, beyond the obvious of no comments (and few corporate sites get blog comments, just like the lack with social interaction), how do you actually know? I don’t mean a gut check as a human, but as a bot. We have to disassociate ourselves from what we can easily see to how a bot should be able to find that and note it is a junk site. That’s where the issues become complicated.

Just as you mention with your parents reading these sites, there could be a great amount of time spent reading the articles by those generally naive about the Internet. And since Google’s algorithm is usually focused on a page-level, it would have to go back and apply additional values to mark it downwards or penalize it if they were to do more on a domain level (whole other set of problems)–yet at the same time recognize that new sites may start with that kind of layout or ‘brokenness’.

AJ Kohn // March 21st 2012

Micah,

Okay, I see the use of the Wikipedia content on the Facebook page. It’s certainly using that content and could be construed as scraping, but the Facebook page is actually just excerpting that page and providing attribution. I see this as different form scraping.

As a bot the scrapers we’re discussing are not wholly difficult to identify. In fact, in doing this analysis I found that Google has caught up to nearly half of the ones I looked at and that was just in the span of a few months. The first part of the equation is simply identifying that the content is the same on two different pages. There are a number of methods Google could (and does) use for this purpose. Once it identifies a page or cluster of pages with the same content you can use a combination of discovery date and link graph signals (among others) to determine the original content.

Here’s a relevant post regarding this type of cross-domain URL selection.

A piece of content can often be reached via several URLs, not all of which may be on the same domain. A common example we’ve talked about over the years is having the same content available on more than one URL, an issue known as duplicate content. When we discover a group of pages with duplicate content, Google uses algorithms to select one representative URL for that content. A group of pages may contain URLs from the same site or from different sites. When the representative URL is selected from a group with different sites the selection is called a cross-domain URL selection. To take a simple example, if the group of URLs contains one URL from a.com and one URL from b.com and our algorithms select the URL from b.com, the a.com URL may no longer be shown in our search results and may see a drop in search-referred traffic.

When the same domain is on the losing end of a cross-domain URL selection that’s a pretty strong signal that can be used and refined to remove scrapers from the landscape. Because you’re right, many non-savvy users wouldn’t recognize that the content is scraped or that they’ve found themselves in a rather dank part of the Internet. That’s why Google needs to do even more to quickly identify and remove these from the index.

My hope is that they allow others to help inform that process so the speed in which this plaque is removed accelerates.

Micah // March 21st 2012

Oh, I don’t disagree about a different form of scraping, but it’s these things we have to keep in mind for bots to differentiate between good and bad scraping; between sites that are legitimate but also have a bad scraping section; between a bad scraping section and some useful content.

This is where domain level penalties can be hazardous as you go for a shotgun approach: Kill sites that are bad even if good content is on them in the hopes that good sites don’t create junk to fill in the gaps and that other bad sites don’t come up in turn.

Discovery date and link graph work well if you’re first to market and getting links; the smaller you are, the harder it will be and the more those problems arise.

I’m generally negative about allowing others to help for the following reasons:

-Biased group of people (basically marketers or techies)

-Once people know you can do this, spammers join in and kill legitimate sites

-Once you’re penalized, you won’t get looked at again.

This is likely why they don’t use the block function anymore, it creates a strong incentive to nuke other people’s websites.

AJ Kohn // March 25th 2012

I agree Micah. I don’t like the fact that Panda is applied at the domain level. Soon after I wrote about how Panda treats lousy content the same as great content. While i think search results got marginally better, the overall effect was that they become different.

You’re also right that smaller sites or blogs will encounter more problems. However, to date I haven’t seen many scrapers target these sites. Frankly, a smarter scraper would look for high-quality but small blogs and try to do this. It’s a scary idea.

I was generally negative about the use of the block functionality as a signal, in part because it was biased to the Internati. However, with identity attached Google could normalize the contributions and throw out the ones that are clearly biased or submitted with malicious intent. (Heck, if StumbleUpon can figure out that I submitted my own site too often I know Google can crack this type of problem.) Now, the caveat here is that it won’t be perfect. There will be a few false-positives. But I think the net result would be faster and more comprehensive identification of scrapers.

Micah // March 26th 2012

P1 & P2 agreed.

P3: If Google can get this to be around technorati and not /marketers/ then they might have something (hence the original functionality around links). But if identity is attached via marketers instead, then this won’t be a normalization of contributions, but a bias of SEOs (whether white or black hat). I’d say SU can figure it out b/c there’s not enough money (at scale) to really go after them (similar to Apple and virii)–but for Google it makes economic sense and means a continual battle unfortunately.

Jon Marcos // March 27th 2012

I am not entirely against junk, scraped content.Sometimes the original source host gets taken down or deleted, and we would otherwise not be able to access that information if it wasn’t scraped. I would love Google to give more value to original posters, but if the article gives attribution, I think Google should still index it.

Kaj Kandler // April 05th 2012

Isn’t Google+ Authrorship verification the means to know original from copy? See ‘Is Google Authorship the end of scraper sites‘ for my thoughts.

AJ Kohn // April 06th 2012

Kaj,

Yes, it’s a very strong signal and should provide additional fidelity to reducing scraped content and outright plagiarism.

Craig S. Kiessling // May 22nd 2012

Excellent topics – both in the post and in the comments. I’ve often wondered/worried about scrapers, social shares, canonical, authorship, etc. and how it would all play out.

Sorry, comments for this entry are closed at this time.

You can follow any responses to this entry via its RSS comments feed.