Are banner ads dying?

I remember people shouting about this during the Web 1.0 hey day and there have been plenty of folks who have since cried wolf on the topic. Each time banner ads come back from the proverbial dead, walking the Internet with zombie like efficiency. Is this time any different?

Maybe.

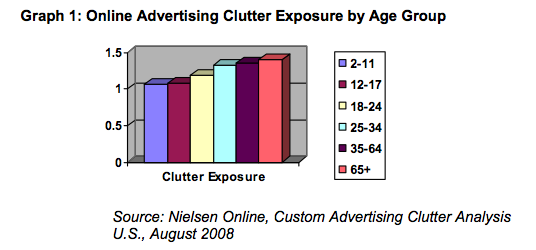

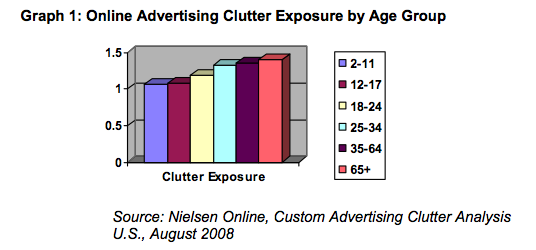

More and more research indicates that young users are not responding to banner advertising. But can we blame them? We’re entering an era in which a whole generation has grown up with the Internet. At an increasingly young age children begin to surf the Internet. Yet, the sites they visit often have far less clutter and advertising than the traditional site.

Are we creating a generation of banner intolerant Internet users?

Those of us, myself included, who came to the Internet as young adults have been exposed to a higher degree of ad clutter from the start. We’re used to it. Not only that, our context for approaching the Internet was shaped through television. Television conditioned us to expect advertising to be part of the equation.

Yet, again, this is not the case for a new generation that has grown up with TiVo and other DVR products. These services have undermined normal TV watching patterns and preconceptions. Every day more of us are conditioned to simply hit the 30 second skip button when presented with a commercial. (As an aside, thank you to Fringe for telling me how many seconds the break is going to be. That’s very handy!)

These habits are particularly important since new research indicates that the heaviest TV viewers are also the heaviest Internet users.

Are social networks part of the problem?

Yes.

Social networking is one of (if not the) largest activity for the young. Sites like Piczo, MySpace and Facebook, among others, are like the middle school and high school of the Internet. These sites are, at their core, utilitarian in nature. They’re about communicating. They’re about making ‘friends’.

Some of them do use banner ads, but more and more evidence shows a growing banner blindness on these sites. I suspect the high number of visits (for a very specific purpose) exacerbates banner blindness on social networks.

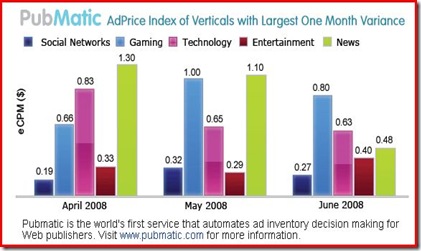

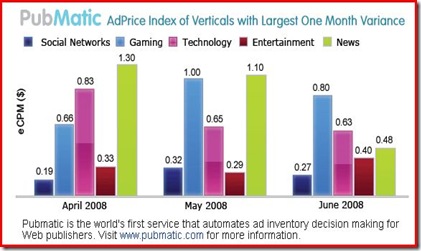

If you use CPM rates as a proxy for effectiveness then it becomes obvious that social networks are in distress.

Can we blame Twitter?

Yes.

Twitter and even my current addiction, FriendFeed, contribute to the problem by not using banner ads. They are but one more site, one more application, one more widget that is providing a valued service for … nothing. Clearly advertising isn’t the only way to monetize these sites, but by not implementing any monetization strategy they send a signal to users that they can get something for nothing.

The ‘something for nothing’ mentality makes us less tolerant of advertising. I can surf the Internet with Firefox, supercharge my blog with WordPress plugins and use Google Analytics to track metrics for a slew of client sites all … for not one penny.

This isn’t a long term problem. A type of Internet Darwinism will take place where those without a true business (aka revenue) model will either fail or try to implement some sort of real revenue strategy. In most instances, that will be advertising or subscriptions.

Will it be advertising or subscriptions?

Just before the collapse of Web 1.0, I worked at Bluelight.com, the online version of Kmart. One of their major initiatives was an ad supported free ISP. We had millions of users! The thing is, it didn’t really work. They wound up converting it to a subscription based service which was ultimately acquired by United Online

Today, we’re conditioning a generation to ignore banner and display advertising. The cat is out of the bag. The genie is out of the bottle. So even if we want to return to it as a revenue stream it is becoming an ever weaker medium. And we only have ourselves to blame.

So perhaps subscription based sites, or networks are the wave of the future. Would you pay for Twitter?

Will banner ads die?

Of course not. Banners will never completely die, but a few things will have to happen for them to rise again to feast on the glorious eyeballs of Internet users.

They’ll need to be more engaging and use rich media in more appropriate ways. More importantly, the industry will need to measure banner ads not by CTR or traditional click-based ROI but by brand measurement metrics. There are a few companies who provide this service, though my favorite is Vizu Ad Catalyst. (Disclosure: I worked at Vizu for a short time but stand by the fact they are leaders in this new field.)

The current economic climate has forced many analysts to adjust their online advertising industry forecasts, some of them twice. Yet, I’m unsure any of them are accounting for this new generation of Internet users who are blind to banners at best and intolerant of them at worst.

The other day I updated my StumbleUpon toolbar (well, I was essentially forced to) and immediately couldn’t Stumble posts from this blog or my

The other day I updated my StumbleUpon toolbar (well, I was essentially forced to) and immediately couldn’t Stumble posts from this blog or my