Amazon Mechanical Turk is a great way to do a wide variety of tasks, from content creation to image tagging to usability. Here are 15 tips to get the most out of Mechanical Turk.

Learn The Lingo

What’s a HIT? Mechanical Turk can be a bit confusing upon first glance. In particular, you’ll need to understand this one important acronym.

A HIT is a Human Intelligence Task and is the work you’re asking workers to perform. A HIT can refer to the specific task your asking them to perform but also doubles as the terminology of the actual job you post in the community.

Select A+ Workers

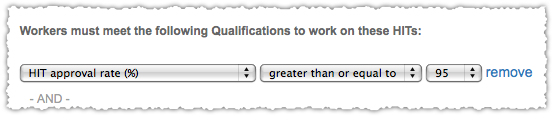

The long and the short of it is that reputation matters and past performance is a good indicator of future performance. Limit your HITs to those with at least a 95% approval rate.

It may shrink your pool of workers and could increase the time to completion but you make up for it in QA savings.

Segment Your Workers

Match the right workers to the right task. In my experience, you get better results from US based workers when you’re doing anything that requires writing or transcription. Conversely, international workers often excel in tasks such as data validation and duplicate detection.

Give Workers More Time Than They Need

The time you give is the time workers have before the HIT disappears. Imagine starting a job and when you come back to turn in your work and collect payment the shop has closed and left town. This can really frustrate workers.

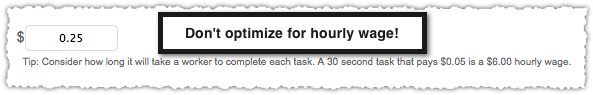

I think Amazon creates this problem with the messaging around the hourly rate calculation. My advice, don’t get too hung up on the hourly rate and err on the side of providing more time for your HITs.

Provide Specific Directions

Remember that you are communicating work at a distance to an unknown person. There’s no back-and-forth dialog to clarify.

In addition, workers are looking to complete work quickly and to ensure they fulfill the HIT so their approval rate remains high. The latter, in particular, makes specificity very important.

Tell workers exactly what to do and what type of work output is expected.

Make It Look Easy

While the directions should be specific you don’t want a 500 word paragraph of text to scare folks off. Make sure your HIT looks easy from a visual perspective. This means it’s easily scanned and understood.

Take advantage of the HTML editor and build in a proper font hierarchy, appropriate input fields and use a pop of color when you really want to draw attention to something important.

Give Your HIT a Good Title

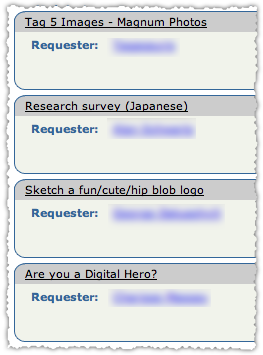

Make sure your HIT title is the appropriate length (not too short or long) and that it’s descriptive and appealing.

A good title is a mixture of SEO and marketing principles. It should be relevant and descriptive but also interesting and alluring.

Bundle The Work

If you can do it, bundle a bunch of small tasks into one HIT. For instance, have them tag 10 photos at a time.

This helps because you can set a higher price for your HIT. You’ll attract a larger pool of workers since many don’t seek out ‘penny’ HITs.

Mind Your Email

Workers will email you – frequently. Do not ignore them.

You are joining a community. Just take a peek at Turker Nation. As with any community, you get and build a reputation. Don’t make it a bad one. Respond to your email, even if the response isn’t what workers want to hear.

In addition, you learn how to tweak your HIT by listening to and interacting with the workers.

Pay Fast

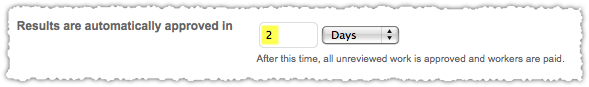

A lot of the email you may receive is around a familiar refrain: “When will you pay.” This gets tedious so I generally recommend paying quickly, reducing the amount of unproductive email and giving you a good reputation within the community.

That means setting your automatic approval for something like 2 or 3 days.

Develop a QA System

To pay fast you need a good QA system. You can either do this yourself or, alternatively, put the work out as a separate HIT. That’s right, you can use Mechanical Turk to QA your Mechanical Turk work. Insert your Inception or Yo Dawg joke here.

Bonus Good Work

Give a bonus when you find workers who have done an excellent job on number of HITs. It doesn’t have to be a huge amount, but take the top performers and give them a bonus.

Not only is this the right thing to do, it’ll go a long way to establishing yourself in the community and developing a loyal pool of quality workers.

Build a Workforce

Once you find and bonus good workers, continue to give them HITs. You can do this by creating a list of and limiting HITs to just those workers.

If you do this you probably want to keep the ‘Required for preview’ box checked so workers not on that list aren’t frustrated by previewing a HIT they don’t have any chance of working on.

Download the worker history (under Manage > Workers) and use Excel to find high volume and high quality workers. Then create your list (under Manage > Qualification Types) so you can use it in your HIT.

Block Bad Apples

Just as you build a list of good workers, you also need to block a few of the bad ones. They might have dynamite approval ratings but for different types of tasks. Some people are good a some things and … not so good at others.

Coaching workers is time consuming and costly, so it’s probably better for you and the worker to simply part ways. You ensure the approval rate on your HITs remains high and the worker won’t put their approval rate in jeopardy.

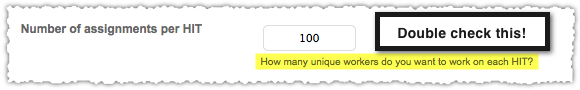

Understand Assignments

Finally, understand and use assignments wisely. Each HIT can be assigned to a certain number of workers.

So if you’re HIT is about getting feedback on your new homepage design, you might assign 500 workers to that HIT. That means you’ll give 500 reactions to your new homepage. It’s one general task that requires multiple responses.

But if you’re HIT is about validating phone numbers for 500 businesses, you will assign 1 worker to each HIT. That means you’ll get one validation per phone number. Do not assign 500 workers or you’ll get 500 validations per phone number. That’s wasteful and likely to irk those businesses too.

Mechanical Turk Tips

These tips are the product of experience (both mine and the talented Drew Ashlock), of trial and error, of stubbing toes during the process.

I hope this helps you avoid some of those pitfalls and allows you to get the most out of a truly innovative and valuable service.