What if I told you that the future of mobile search was swiping.

I don’t mean that there will be a few carousels of content. Instead I mean that all of the content will be displayed in a horizontal swiping interface. You wouldn’t click on a search result, you’d simply swipe from one result to the next.

This might sound farfetched but there’s growing evidence this might be Google’s end game. The Tinderization of mobile search could be right around the corner.

Horizontal Interface

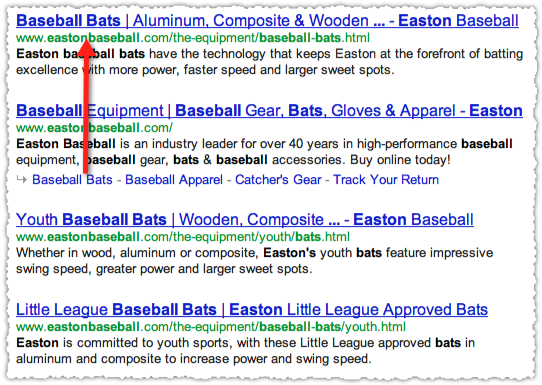

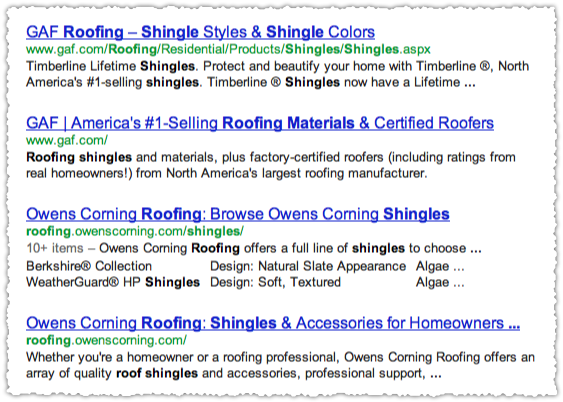

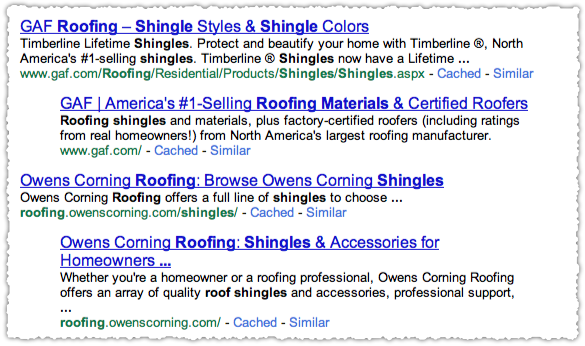

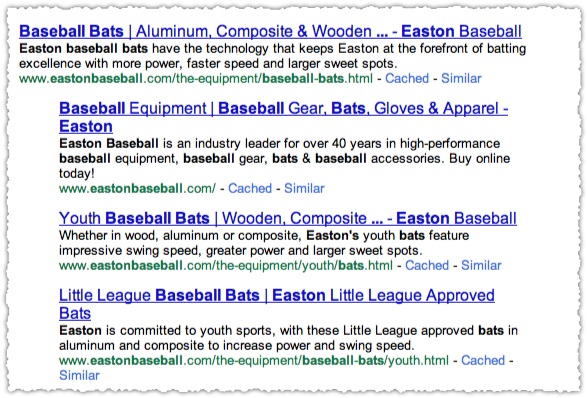

Google has been playing with horizontal interfaces on mobile search for some time now. You can find it under certain Twitter profiles.

There’s one for videos.

And another for recipes.

There are plenty of other examples. But the most important one is the one for AMP.

The reason the AMP example is so important is that AMP is no longer going to be served just in a carousel but will be available to any organic search result.

But you have to wonder how Google will deliver this type of AMP carousel interface with AMP content sprinkled throughout the results. (They already reference the interface as the ‘AMP viewer’.)

What if you could simply swipe between AMP results? The current interface lets you do this already.

Once AMP is sprinkled all through the results wouldn’t it be easier to swipe between AMP results once you were in that environment? They already have the dots navigation element to indicate where you are in the order of results.

I know, I know, you’re thinking about how bad this could be for non-AMP content but let me tell you a secret. Users won’t care and neither will Google.

User experience trumps publisher whining every single time.

In the end, instead of creating a carousel for the links, Google can create a carousel for the content itself.

AMP

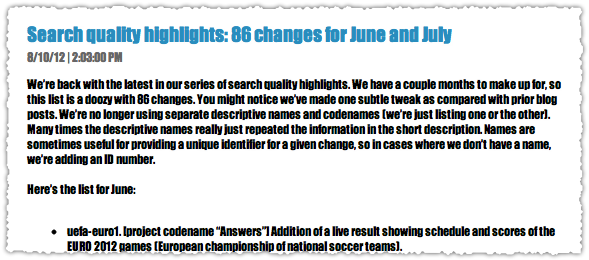

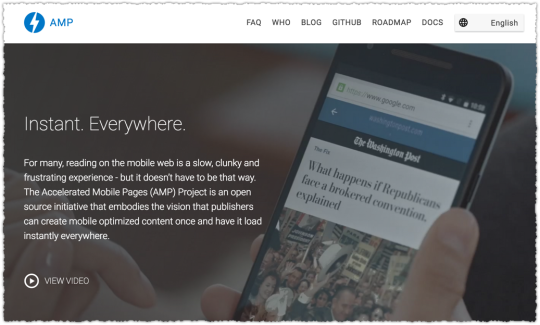

For those of you who aren’t hip to acronyms, AMP stands for Accelerated Mobile Pages. It’s an initiative by Google to create near instantaneous availability of content on mobile.

The way they accomplish this is by having publishers create very lightweight pages and then cacheing them on Google servers. So when you click on one of those AMP results you’re essentially getting the cached version of the page direct from Google.

The AMP initiative is all about speed. If the mobile web is faster it helps with Google’s (not so) evil plan. It also has an interesting … side effect.

Google could host the mobile Internet.

That’s both amazing and a bit terrifying. When every piece of content in a search result is an AMP page Google can essentially host that mobile result in its entirety.

At first AMP was just for news content but as of today Google is looking to create AMP content for everything including e-commerce. So the idea of an all AMP interface doesn’t seem out of the question.

Swipes Not Clicks

![]()

Why make users click if every search result is an AMP page? Seriously. Think about it.

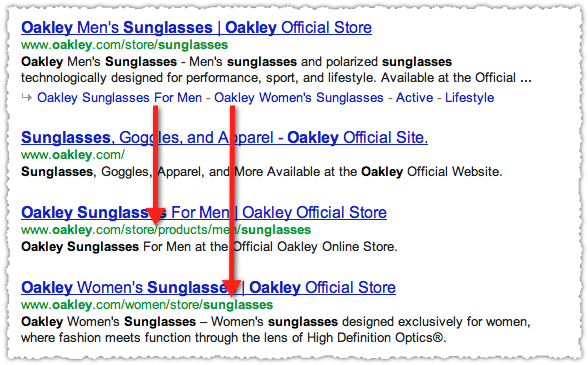

Google is obsessed with reducing the time to long click, the amount of time it takes to get users to a satisfactory result. What better way to do this than to remove the friction of clicking back and forth to each site.

No more blue links.

Why make users click when you can display that content immediately? Google has it! Then users can simply swipe to the next result, and the next, and the next and the next. They can even go back and forth in this way until they find a result they wish to delve into further.

Swiping through content would be a radical departure from the traditional search interface but it would be vastly faster and more convenient.

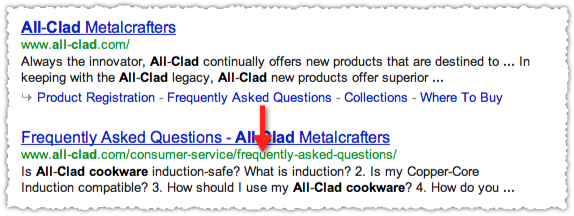

This would work with the numerous other elements that bubble information up further in the search process such as Knowledge Panels and Oneboxes. Dr. Pete Meyers showed how some of these ‘cards’ could fit together. But the cards would work equally as well in a swiping environment.

How much better would it be to search for a product and swipe through the offerings of those appearing in search results?

New Metrics of Success

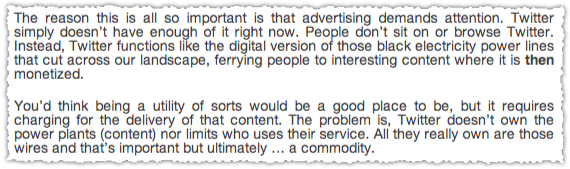

If this is where the mobile web is headed then the game will completely change. Success won’t be tied nearly as much to rank. When you remove the friction of clicking the number of ‘views’ each result gets will be much higher.

The normal top heavy click distribution will disappear to be replaced with a more even ‘view’ distribution of the top 3-5 results. I’m assuming most users will swipe at least three times if not more but that there will be a severe drop off after that.

When a user swipes to your result you’ll still get credit for a visit by implementing Google Analytics or another analytics package correctly. But users aren’t really on your site at that point. It’s only when they click through on that AMP result that they wind up in your mobile web environment.

So the new metric for mobile search success might be getting users to stop on your result and, optimally, click-through to your site. That’s right, engagement could be the most important metric. Doesn’t that essentially create alignment between users, Google and publishers?

Funny thing is, Google just launched the ability to do A/B testing for AMP pages. They’re already thinking about how important it’s going to be to help publishers optimize for engagement.

Hype or Reality?

Google, as a mobile first company, is pushing hard to reduce the distance between search and information. I don’t think this is a controversial statement. The question is how far Google is willing to go to shorten that distance.

I’m putting a bunch of pieces together here, from horizontal interfaces, to AMP to Google’s obsession with speed to come up with this forward looking vision of mobile search.

I think it’s in the realm of possibility, particularly since the growth areas for Google are in countries outside of the US where mobile is vastly more dominant and where speed can sometimes be a challenge.

TL;DR

When every search result is an AMP page there’s little reason for users to click on a result to see that content. Should Google’s AMP project succeed, the future of mobile search could very well be swiping through content and the death of the blue link.