Are click through rates on search results a ranking signal? The idea is that if the third result on a page is clicked more often than the first that it will, over time, rise to the second or first result.

I remember this question being asked numerous times when I was just starting out in the industry. Google representatives employed a potent combination of tap dancing and hand waving when asked directly. They were so good at doing this that we stopped hounding them and over the last few years I rarely hear people talking about, let alone asking, this question.

Perhaps it’s because more and more people aren’t focused on the algorithm itself and are instead focused on developing sites, content and experiences that will be rewarded by the algorithm. That’s actually the right strategy. Yet I still believe it’s important to understand the algorithm and how it might impact your search efforts.

Following is an exploration of why I believe click-through rate is a ranking signal.

Occam’s Razor

Though the original principle wasn’t as clear cut, today’s interpretation of Occam’s Razor is that the simplest answer is usually the correct one. So what’s more plausible? That Google uses click-through rate as a signal or that the most data driven company in the world would ignore direct measurement from their own product?

It just seems like common sense, doesn’t it? Of course, we humans are often wired to make poor assumptions. And don’t get me started on jumping to conclusions based on correlations.

The argument against is that even Google would have a devil of a time using click-through rate as a signal across the millions of results for a wide variety of queries. Their resources are finite and perhaps it’s just too hard to harness this valuable but noisy data.

The Horse’s Mouth

It gets more difficult to make the case against Google using click-through rate as a signal when you get confirmation right from the horse’s mouth.

Google confirms watching clicks to evaluate results quality. FYI Google still won’t say if clicks used as rank signal pic.twitter.com/jzNGc5reQk

— Danny Sullivan (@dannysullivan) March 25, 2015

That seems pretty close to a smoking gun doesn’t it?

Now, perhaps Google wants to play a game of semantics. Click-through rate isn’t a ranking signal. It’s a feedback signal. It just happens to be a feedback signal that influences rank!

Call it what you want, at the end of the day it sure sounds like click-through rate can impact rank.

[Updated 7/22/15]

Want more? I couldn’t find this quote the first time around but here’s Marissa Mayer in the FTC staff report (pdf) on antitrust allegations.

According to Marissa Mayer, Google did not use click-through rates to determine the position of the Universal Search properties because it would take too long to move up on the SERP on the basis of user click-through rate.

In other words, they ignored click data to ensure Google properties were slotted in the first position.

Then there’s former Google engineer Edmond Lau in an answer on Quora.

It’s pretty clear that any reasonable search engine would use click data on their own results to feed back into ranking to improve the quality of search results. Infrequently clicked results should drop toward the bottom because they’re less relevant, and frequently clicked results bubble toward the top. Building a feedback loop is a fairly obvious step forward in quality for both search and recommendations systems, and a smart search engine would incorporate the data.

So is Google a reasonable and smart search engine?

The Old Days

There are other indications that Google has the ability to monitor click activity on a query by query basis, and that they’ve had that capability for dog years.

Here’s an excerpt from a 2007 interview with Marissa Mayer, then VP of Search Products, on the implementation of the OneBox.

We hold them to a very high click through rate expectation and if they don’t meet that click through rate, the OneBox gets turned off on that particular query. We have an automated system that looks at click through rates per OneBox presentation per query. So it might be that news is performing really well on Bush today but it’s not performing very well on another term, it ultimately gets turned off due to lack of click through rates. We are authorizing it in a way that’s scalable and does a pretty good job enforcing relevance.

So way back in 2007 (eight years ago folks!) Google was able to create a scalable solution to using click-through rate per query to determine the display of a OneBox.

That seems to poke holes in the idea that Google doesn’t have the horsepower to use click-through rate as a signal.

The Bing Argument

Others might argue that if Bing is using click-through rate as a signal that Google surely must be as well. Here’s what Duane Forrester, Senior Product Manager for Bing Webmaster Outreach (or something like that) said to Eric Enge in 2011.

We are looking to see if we show your result in a #1, does it get a click and does the user come back to us within a reasonable timeframe or do they come back almost instantly?

Do they come back and click on #2, and what’s their action with #2? Did they seem to be more pleased with #2 based on a number of factors or was it the same scenario as #1? Then, did they click on anything else?

We are watching the user’s behavior to understand which result we showed them seemed to be the most relevant in their opinion, and their opinion is voiced by their actions.

This and other conversations I’ve had make me confident that click-through rate is used as a ranking signal by Bing. The argument against is that Google is so far ahead of Bing that they may have tested and discarded click-through rate as a signal.

Yet as other evidence piles up, perhaps Google didn’t discard click-through rate but simply uses it more effectively.

Pogosticking and Long Clicks

Duane’s remarks also tease out a little bit more about how click-through rate would be used and applied. It’s not a metric used in isolation but measured in terms of time spent on that clicked result, whether they returned to the SERP and if they then refined their search or clicked on another result.

When you really think about it, if pogosticking and long clicks are real measures then click-through rate must be part of the equation. You can’t calculate the former metrics without having the click-through rate data.

And when you dig deeper Google does talk about ‘click data’ and ‘click signals’ quite a bit. So once again perhaps it’s all a game of semantics and the equivalent to Bill Clinton clarifying the meaning of ‘is’.

Seeing Is Believing

A handful of prominent SEOs have tested whether click-through rate influences rank. Rand Fishkin has been leading that charge for a number of years.

Back in May of 2014 he performed a test with some interesting results. But it was a long-tail term and other factors might have explained the behavior.

But just the other day he ran another version of the same test.

If you’re curious – results came in rather quickly. Will be interesting to see how long it lasts. pic.twitter.com/I9mJLj2GMq — Rand Fishkin (@randfish) June 21, 2015

However, critics will point out that the result in question is once again at #4, indicating that click-through rate isn’t a ranking signal.

But clearly the burst of searches and clicks had some sort of effect, even if it was temporary, right? So might Google have developed mechanisms to combat this type of ‘bombing’ of click-through rate? Or perhaps the system identifies bursts in query and clicks and reacts to meet a real time or ‘fresh’ need?

Either way it shows that the click-through behavior is monitored. Combined with the admission from Udi Manber it seems like the click-through rate distribution has to be consistently off of the baseline for a material amount of time to impact rank.

In other words, all the testing in the world by a small band of SEOs is a drop in the ocean of the total click stream. So even if we can move the needle for a small time, the data self-corrects.

But Rand isn’t the only one testing this stuff. Darren Shaw has also experimented with this within the local SEO landscape.

Darren’s results aren’t fool proof either. You could argue that Google representatives within local might not be the most knowledgable about these things. But it certainly adds to a drumbeat of evidence that clicks matter.

But wait, there’s more. Much more.

Show Me The Patents

For quite a while I was conflicted about this topic because of one major stumbling block. You wouldn’t be able to develop a click-through rate model based on all the various types of displays on a result.

The result that had a review rich snippet gets a higher click-through rate because the eye gravitates to it. Google wouldn’t want to reward that result from a click-through rate perspective just because of the display.

Or what happens when the result has an image result or a answer box or video result or any number of different elements? There seemed to be too many variations to create a workable model.

But then I got hold of two Google patents titled Modifying search result ranking based on implicit user feedback and Modifying search result ranking based on implicit user feedback and a model of presentation bias.

The second patent seems to build from the first with the inventor in common being Hyung-Jin Kim.

Both of these are rather dense patents and it reminds me that we should all thank Bill Slawski for his tireless work in reading and rendering patents more accessible to the community.

I’ll be quoting from both patents (there’s a tremendous amount of overlap) but here’s the initial bit that encouraged me to put the headphones on and focus on decoding the patent syntax.

The basic rationale embodied by this approach is that, if a result is expected to have a higher click rate due to presentation bias, this result’s click evidence should be discounted; and if the result is expected to have a lower click rate due to presentation bias, this result’s click evidence should be over-counted.

Very soon after this the patent goes on to detail the number of different types of presentation bias. So this essentially means that Google saw the same problem but figured out how to deal with presentation bias so that it could rely on ‘click evidence’.

Then there’s this rather nicely summarized 10,000 foot view of the issue.

In general, a wide range of information can be collected and used to modify or tune the click signal from the user to make the signal, and the future search results provided, a better fit for the user’s needs. Thus, user interactions with the rankings presented to the users of the information retrieval system can be used to improve future rankings.

Again, no one is saying that click-through rate can be used in isolation. But it clearly seems to be one way that Google has thought about re-ranking results.

But it gets better as you go further into these patents.

The information gathered for each click can include: (1) the query (Q) the user entered, (2) the document result (D) the user clicked on, (3) the time (T) on the document, (4) the interface language (L) (which can be given by the user), (5) the country (C) of the user (which can be identified by the host that they use, such as www-google-co-uk to indicate the United Kingdom), and (6) additional aspects of the user and session. The time (T) can be measured as the time between the initial click through to the document result until the time the user comes back to the main page and clicks on another document result. Moreover, an assessment can be made about the time (T) regarding whether this time indicates a longer view of the document result or a shorter view of the document result, since longer views are generally indicative of quality for the clicked through result. This assessment about the time (T) can further be made in conjunction with various weighting techniques.

Here we see clear references to how to measure long clicks and later on they even begin to use the ‘long clicks’ terminology. (In fact, there’s mention of long, medium and short clicks.)

But does it take into account different classes of queries? Sure does.

Traditional clustering techniques can also be used to identify the query categories. This can involve using generalized clustering algorithms to analyze historic queries based on features such as the broad nature of the query (e.g., informational or navigational), length of the query, and mean document staytime for the query. These types of features can be measured for historical queries, and the threshold(s) can be adjusted accordingly. For example, K means clustering can be performed on the average duration times for the observed queries, and the threshold(s) can be adjusted based on the resulting clusters.

This shows that Google may adjust what they view as a good click based on the type of query.

But what about types of users. That’s when it all goes to hell in a hand basket right? Nope. Google figured that out.

Moreover, the weighting can be adjusted based on the determined type of the user both in terms of how click duration is translated into good clicks versus not-so-good clicks, and in terms of how much weight to give to the good clicks from a particular user group versus another user group. Some user’s implicit feedback may be more valuable than other users due to the details of a user’s review process. For example, a user that almost always clicks on the highest ranked result can have his good clicks assigned lower weights than a user who more often clicks results lower in the ranking first (since the second user is likely more discriminating in his assessment of what constitutes a good result).

Users are not created equal and Google may weight the click data it receives accordingly.

But they’re missing the boat on topical expertise, right? Not so fast!

In addition, a user can be classified based on his or her query stream. Users that issue many queries on (or related to) a given topic (e.g., queries related to law) can be presumed to have a high degree of expertise with respect to the given topic, and their click data can be weighted accordingly for other queries by them on (or related to) the given topic.

Google may identify topical experts based on queries and weight their click data more heavily.

Frankly, it’s pretty amazing to read this stuff and see just how far Google has teased this out. In fact, they built in safeguards for the type of tests the industry conducts.

Note that safeguards against spammers (users who generate fraudulent clicks in an attempt to boost certain search results) can be taken to help ensure that the user selection data is meaningful, even when very little data is available for a given (rare) query. These safeguards can include employing a user model that describes how a user should behave over time, and if a user doesn’t conform to this model, their click data can be disregarded. The safeguards can be designed to accomplish two main objectives: (1) ensure democracy in the votes (e.g., one single vote per cookie and/or IP for a given query-URL pair), and (2) entirely remove the information coming from cookies or IP addresses that do not look natural in their browsing behavior (e.g., abnormal distribution of click positions, click durations, clicks_per_minute/hour/day, etc.). Suspicious clicks can be removed, and the click signals for queries that appear to be spammed need not be used (e.g., queries for which the clicks feature a distribution of user agents, cookie ages, etc. that do not look normal).

As I mentioned, I’m guessing the short-lived results of our tests are indicative of Google identifying and then ‘disregarding’ that click data. Not only that, they might decide that the cohort of users who engage in this behavior won’t be used (or their impact will be weighted less) in the future.

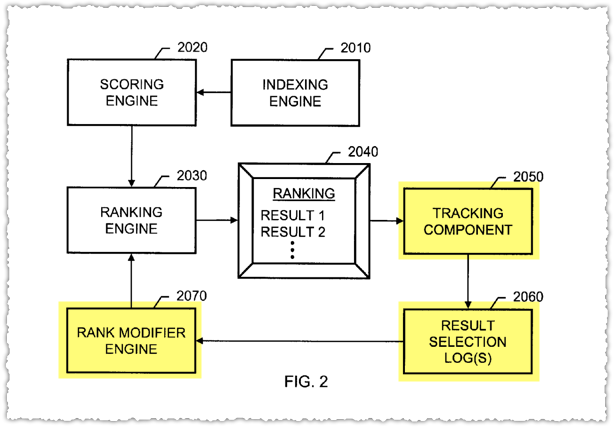

What this all leads up to is a rank modifier engine that uses implicit feedback (click data) to change search results.

Here’s a fairly clear description from the patent.

A ranking sub-system can include a rank modifier engine that uses implicit user feedback to cause re-ranking of search results in order to improve the final ranking presented to a user of an information retrieval system.

It tracks and logs … everything and uses that to build a rank modifier engine that is then fed back into the ranking engine proper.

But, But, But

Of course this type of system would get tougher as more of the results were personalized. Yet, the way the data is collected seems to indicate that they could overcome this problem.

Google seems to know the inherent quality and relevance of a document, in fact of all documents returned on a SERP. As such they can apply and mitigate the individual user and presentation bias inherent in personalization.

And it’s personalization where Google admits click data is used. But they still deny that it’s used as a ranking signal.

Perhaps it’s a semantics game and if we asked if some combination of ‘click data’ was used to modify results they’d say yes. Or maybe the patent work never made it into production. That’s a possibility.

But looking at it all together and applying Occam’s Razor I tend to think the click-through rate is used as a ranking signal. I don’t think it’s a strong signal but it’s a signal none the less.

Why Does It Matter?

You might be asking, so freaking what? Even if you believe click-through rate is a ranking signal, I’ve demonstrated that manipulating it may be a fool’s errand.

The reason click-through rate matters is that you can influence it with changes to your title tag and meta description. Maybe it’s not enough to tip the scales but trying is better than not isn’t it?

Those ‘old school’ SEO fundamentals are still important.

Or you could go the opposite direction and build your brand equity through other channels to the point where users would seek out your brand in search results irrespective of position.

Over time, that type of behavior could lead to better search rankings.

TL;DR

The evidence suggests that Google does use click-through rate as a ranking signal. Or, more specifically, Google uses click data as an implicit form of feedback to re-rank and improve search results.

Despite their denials, common sense, Google testimony and interviews, industry testing and patents all lend credence to this conclusion.

The Next Post: That Time I Had Cancer

The Previous Post: Do You Even Algorithm, Google?

16 trackbacks/pingbacks

Comments About Is Click Through Rate A Ranking Signal?

// 44 comments so far.

Matt Antonino // June 24th 2015

Importantly, I think what we need to remember is that Google (and the end user) treats each vertical differently as well. Not just in CTR but in other ways. We don’t call it the “Payday Loans” algo update because it affected hobby shops.

So I think CTR matters (and pretty much always has) as a feedback/ranking signal. However, what constitutes a positive/negative would vary. I imagine they already have a vertical-specific weight on factors because of time on site. You wouldn’t expect “train schedule Melbourne” to have a super-long time on site. But you would on a long, educational article that was 3000 words.

Same with click-through rates. “Emergency plumber” should have a high CTR and low return-to-search rates. “cool pictures of Melbourne” should have a higher return-to-search rate despite a high CTR.

And traditionally medical info sites should have a huge return-to-search rate. If you or a family member is diagnosed with something you read EVERY search result, not just the first one. So we can’t compare apples & organic oranges in this case. Every CTR would be query-specific or at least vertical specific.

I think every result on page 1 for any “just diagnosed with X” search would be 100%. I think the CTR on emergency plumber matters a LOT more as you’d expect someone with water on their floor to click one and never return. If they do, that’s a very, very bad signal.

AJ Kohn // June 24th 2015

100% agree Matt. And there are sections in the patent(s) that explore some of these issues. It may not be as explicit as you’ve outlined, but the framework is there and it’s relatively easy to see how Google would get to this vertical endpoint.

You’ve picked great examples, particularly the healthcare vertical, where the return-to-search rate (aka pogosticking) is obviously high based on the mental model of those users.

Conversely, StackExchange is a wildly popular site but with high bounce rates and low page views per session. But that’s because people search for something specific, find what they’re looking for and then get back to coding instead of going back to the search result.

Each vertical will have different CTR benchmarks as they relate to the definition of a long-click. So it’s not just CTR, but CTR+quality that is being measured. Again, those things are detailed in the patents I reference in this piece.

Rick Bucich // June 24th 2015

It’s hard to argue CTR isn’t used…in some cases/verticals. Clear as mud, right? Perhaps I’m biased because if I was a search quality engineer it would be an area I’d closely look at for both page and site aggregate signals.

Ever notice the correlation between a brand affinity and region on Google Trends? CTR would be a direct way to mine that sentiment. I don’t think you would just limit yourself to branded queries. I will frequently type in certain unbranded queries that return the result I’m looking for knowing I will find it in the top 2 or 3 results. Don’t think I’m alone.

Mobile search seems to be training users (in some verticals) to use shorter, more head term oriented queries as well which necessitates the ability to cater results using signals other that just keywords.

Just a few thoughts pounded out on my phone.

AJ Kohn // June 24th 2015

Yes Rick, it’s getting harder and harder to argue that CTR isn’t used. I try very hard to see where I might be making a leap in logic or bad assumption but there comes a point where you’d have to be some sort of contortionist to reason your way to CTR not being a factor. That doesn’t mean that might still not be the case but I find it highly improbable at this point.

Andrea // June 25th 2015

Great post AJ as always.

I also think that (as the Rand experiment shows) short time-frames clicks events can be used to solve a temporary problem.

In my experience I saw this behaviour frequently when some first-position website go down in case of huge peaks in traffic. When this happen the slow to respond website is demoted very fast to go back to the previous position after some hours (if up again).

AJ Kohn // June 25th 2015

Andrea,

Thanks for the kinds words and comment. And yes, there are quite a few interesting things that can happen when to SERPs during traffic spikes. If a site goes down, too many short clicks in a short time-frame does seem to cause a shuffling of positions.

It all points to a fairly adaptive system that helps ensure that users always get a positive experience and that the time to long click is optimized.

Victor Pan // June 25th 2015

The ‘so what’ has always been for me… that meta descriptions are not used for rankings, but hell if they can impact CTR then you should very damn well pay attention to them.

Semantics.

AJ Kohn // June 25th 2015

Thanks for the comment Victor.

I tend to think the title is more important and that meta description is a blur to many users. But you’ve still got a chance with both to make an impression, to gain attention over competing content.

So whether it’s mimicking the query syntax (exact match title tags), ensuring the description fires with as many bolded terms as possible, or simply writing a compelling description, you can always try to drag more clicks to your result.

A lot of things are out of our control, but this is one instance where we can put both SEO and marketing skills right there in front of users (unless Google changes them which usually tells you you’ve screwed up).

RJ Mussiett // June 25th 2015

Seems like some back-end magic with QDF and relative CTRs.

AJ Kohn // June 26th 2015

RJ,

Thanks for the comment. The tests the industry usually perform might trigger some form of QDF (query deserves freshness for anyone no following the acronyms). And when pushed into that realm you’ve certainly got another type of algorithm in play.

Roger Montti // June 25th 2015

Great article! Except I don’t know why you came to this conclusion:

“The reason click-through rate matters is that you can influence it with changes to your title tag and meta description. Maybe it’s not enough to tip the scales but trying is better than not isn’t it?”

The reason is because keyword optimized title tags and meta descriptions that induce SERP clicks are the definition of Presentation Bias, which as you note in your article, are things that Google (and Bing) have learned to discount from their scoring.

But other than that (and the question as to how a Ranking Signal is defined), a great article, well done. 😉

AJ Kohn // June 26th 2015

Roger,

Thanks for the kind words and the comment. As to your question, using the ‘right’ words and writing a compelling meta description won’t be interpreted as presentation bias.

In fact, Enrico Altavilla pointed me at research by Google on just this topic (PDF). The test revealed the bias of bold words in the title. But … do you see that bolding today? Nope. It also showed that bolding in the meta description didn’t cause material presentation bias. Hence, they’re still presented in SERPs.

But a well written title or meta description that causes users to select your result over one above it is going to fall more into preference bias. And that’s what you’re trying to create. Ross Hudgens did a good job showing how titles might be used to influence CTR.

Not everything can be attributed to presentation bias but to the actual content and context (i.e – a brand word) of that result.

Jon // June 26th 2015

Brilliant article. Not a smoking gun but a smoking Bazuka!

“Users that issue many queries on (or related to) a given topic (e.g., queries related to law) can be presumed to have a high degree of expertise with respect to the given topic”

Or they haven’t got a clue 🙂

AJ Kohn // June 27th 2015

Thanks Jon.

And yes, I suppose it could be one way or the other. I’m guessing they must use some sort of query classification to separate novice from expert. So, ‘what is an objection’ versus, well, I’m not a lawyer so I don’t know exactly but I assume some more specific case law queries.

It’s actually an interesting line of thought on how you’d identify expertise in a field solely by queries.

Grant // June 26th 2015

If Google Adwords uses CTR (or expected vs reality) to calculate / refine Quality Score why wouldn’t Google be able to use for organic results?

https://support.google.com/adwords/answer/2454010?hl=en

Great arguments / conclusion AJ

Cheers

AJ Kohn // June 27th 2015

Grant,

Absolutely. I really should have added a ‘The Adwords Argument’ section to this piece. Because there is plenty of research in this area and fairly transparent usage as you reference.

Bill Slawski // June 27th 2015

Hi AJ,

Very interesting stuff. One of the patents I’ve looked at over the past year was one titled “Propagating query classifications”, in which the selection of a search result, and long clicks related to that selection might be used to determine which classification of sites might be best for a particular query. So, a search that includes the term “Lincoln” could involve the President by that name, the city in Nebraska, and the car model. This approach isn’t being used to determine the ultimate site that should rank highest for that term, but rather a category of selection that might go with that particular query term.

And unsurprisingly, one of the inventors listed on that patent is Hyung-Jin Kim. 🙂

AJ Kohn // June 27th 2015

Thanks for the comment and the interesting patent Bill.

So that patent essentially uses implicit feedback in the form of long clicks to help disambiguate the query class. I would imagine it wouldn’t always be binary either since the intent behind some queries will be fractured. This might help Google to determine the order in which those intents should appear in results.

I’ll have to check out more of Mr. Kim’s patents when I get the chance.

JebediahJuice // June 27th 2015

Google serves a market of one. You.

Your CTR affects only your (future) results.

AJ Kohn // June 27th 2015

You are free to believe that Jebediah and click evidence is clearly used in personalization.

But exactly what do you base the exclusionary use of CTR on and how do you address the contents of this post?

Bill Slawski // June 27th 2015

Hi AJ,

You’re Welcome, Glad to supplement your stellar research with the notion that It is likely worth looking through more of Hyung-Jin Kim’s patents. They do seem to offer some interesting insights.

Mark Munroe // June 28th 2015

Great post and discussion.

To me, it is unthinkable that they wouldn’t use it. They have their own metrics for their own site (like we do for ours) and many of those metrics are based on click behavior. So, of course, they do things to try and improve those metrics (like alter results).

But there is a problem with using click behavior for individual pages and search terms. The vast majority of pages in Google’s index don’t get enough impressions and clicks to reach statistical significance.

So I think Google applies it at a domain level, (strengthening or weakening a domain), not specific to an individual term or page. True, they could use it for high volume search terms, but I tend to think they would want to implement an algorithm that is globally applicable.

Same thing for all click metrics … I think Google uses them as well, but also at the domain level for the same reason.

AJ Kohn // June 28th 2015

Thanks for the kind words and comment Mark. You do bring up an interesting dilemma on the volume of data for many queries.

The idea of a domain level click evidence metric is possible. But I wonder if it’s not a document level click evidence metric that can be stored and used independent of queries. So, for instance, if that document appears in 1,245 queries and the click evidence on that result is positive (performs at or above CTR benchmarks with appropriate long click percentages) then it might be ranked higher for long tail queries when competing against other documents in that SERP.

In addition, between auto-complete and hummingbird I tend to think that the number of queries might be declining and some of what is happening is a grouping of queries that can be answered by a selection of documents. But you’re right, if we believe Panda (a domain level algorithm) scores for thin content and poor experience then the opposite could also certainly be true.

Marvin Webster // June 28th 2015

If they do use click-thru rate for ranking I would hope it would be buffered by bounce rate. Getting click thru doesn’t really prove anything about the quality of the information on the site in my opinion.

AJ Kohn // June 28th 2015

Marvin,

Well, I should hope it’s not bounce rate which is very isolated but pogosticking and click length that, among other things, is used to measure productive CTR. That’s what 50% of this post explores.

Mark Munroe // June 28th 2015

AJ,

Even at the document level, data will be too sparse for most pages. I was looking at some data, a high-traffic travel site with a half million indexed pages with about 19K pages generating landing in a month and about 2 million visits a month. Of that 19K, only about 4K get more than 50 clicks in a month. About 8K get less than 20. Somewhere there will be a cutoff where it can’t be judged behaviorally.

Of course, behavioral metrics could work into the algorithm in multiple ways. It is possible they do it at a query level, only if there enough data to make an assessment (my original assumption being wrong). And it could also fit into a domain level score based on the domain overall metrics of their appearance in the SERP which then would impact results for the entire domain.

Mark

AJ Kohn // June 30th 2015

Mark,

Yes, the click data might be sparse but there’s also a survivorship bias here to some degree. For every click there’s a higher number of non-clicks, which is part of the entire data set.

But yes, clearly not all queries will rise to the level where you can use click evidence on the document level. I’d guess that it’s used for those that have enough volume and when there isn’t enough volume it isn’t used at all or they may fallback and apply domain level click evidence in the rank modifier engine.

There’s also an intriguing thread to tease out about new content that enters a SERP and how that interacts with the click data and when the click evidence rises to a high-enough confidence level that it can start to be applied.

Bill Sebald // June 29th 2015

I like the argument. I’ve always been one to believe CTR is used to proof rankings, but not as a direct signal on typical queries because of a) spammers – G is not going to go down the road of underestimating them again, b) Mark’s point, and c) other use cases that can falsely promote bad rankings. The patents you shared challenge my traditional thoughts, and so they should – Google’s only more sophisticated being built upon itself. That was good to see.

Now, I’ve seen some friends, incredibly clever spammers, never move the needle with click bots. But that could mean Google identified them (even though they tricked AdWords), or there’s no meaurable effect. I’ve also seen/performed eye-tracking studies from a top ecommerce platform showing a snippet following a strong CTA title not only does get read, but gets more relative time in the SERPS. But that’s quite different than Google’s study. My story would suggest better (or possibly inflated) performance from snippets.

Then there’s Google last month at SMX blatantly saying “no.” But usually I find Gary to be black and white on items that we’ve collectively tested with real “it depends” answers.

So it now leaves me with … this stubborn dog (me) is starting to lean more the other way now. I really enjoyed the piece, and have been spinning it around for a few days now. Thanks for taking the time to put together a really challenging piece.

AJ Kohn // June 30th 2015

Thanks for the kind words and great comment Bill.

I always believed that CTR or click data in general was used to some extent. This is Google the most data intensive company on the planet! How could they not. But there was always something or someone saying it wasn’t possible.

The rank modifier diagram made a lot of sense to me. Though as you and Mark have pointed out not all SERPs would be modified. But that would make sense too. Use the click evidence to modify rank only when that evidence rises to a level of confidence that makes it clear that the rank modification is legit.

I’m not sure how to interpret Gary’s statements. I like Gary a lot but perhaps it’s that we’re asking the question incorrectly. If we asked ‘Is click data used to modify rank?’ we might get a different answer.

Overall, when you put all the pieces together it makes it difficult for me to believe otherwise. I’m guessing I have the specifics of how click data is used wrong but that the general concept right.

Buildrr // June 30th 2015

I don’t know why this is even an argument. I didn’t make it through the whole article to know if this was mentioned, but when I read that you thought it would be hard for Google to track CTR, I just laughed. Have you checked out Google Webmaster Tools? The number of clicks is literally the first thing they show. If anything, I think they’re telling you that it’s uber important.

AJ Kohn // June 30th 2015

Buildrr,

Thank you for your comment, though I don’t agree with your logic. The fact that CTR is tracked and reported by Google within Google Webmaster Tools does not mean that it is then automatically a ranking factor.

By that measure, that would mean that any metric within Google Webmaster Tools impacted rank and taken further to other Google products would mean that something like bounce rate was a ranking factor (which it is not).

What all of these things show is that Google uses click data where the CTR is the entry into that environment.

Jay // July 06th 2015

Hey AJ,

Great work as always.

In addition to messing around with titles and metas, running paid search ads could also improve organic CTR. I feel like everybody always says combining PPC and organic helps with organic CTR. There’s a zillion blog posts about it already. Here’s an old SEER one if anybody cares: http://www.seerinteractive.com/blog/part-one-adwords-paid-organic-report-results/

But improving CTR for its own sake would be missing the point. (Not that you said that, just that’s generally how most SEOs treat anything that’s a ranking signal.) I always work on improving it simply because more clicks lead to more visits, links, sign ups, sales, etc.

Kate // July 08th 2015

Though I don’t agree with some of your point but it’s still a great article. People have their own idea and i totally understand you. I think Google have their own tools to rank a specific website. I agree with Jay that in order to improve your rank rate or ranking signal, you have to invite people to click the links. With this strategy, more people will visit to your website and more sales of course.

Collin Davis // July 09th 2015

Quality score in Adwords involves CTR as one of the factors and if Google uses it for PPC, I think they should definitely be using it as a ranking factor.

However my assumption is that it won’t be as big a factor in organic search results as compared to paid simply because people would then go a long way to game the system. Microworkers could be hired to click across the globe.

I have seen quite a few case studies on this subject and even participated in some of them. The results vary. I remember one that was carried out by Rand Fishkin where it did show improvement in ranking. But I feel that Google will never want to let the cate out of the bag. Its just the same as when they say that they wouldn’t be using your Google Analytics data to determine your rank in search results.

They would be foolish not to use that data.

Salman Aslam // July 15th 2015

I personally believe it is tiny part of the other 200 ranking factors so it does count because Google is getting more data than ever with its browser and smartphones and even Chromecast’s so with that data they are putting it to use but still it is not significant.

Theodore Nwangene // July 25th 2015

What an awesome article AJ.

Seriously, I don’t believe that Click Through Rate is a ranking signal. The amount of clicks you gets on your webpage does not equate the quality of information you’ve got which is the most important factor.

You might be getting poor CTR but your information is of a higher quality so for me, I don’t think it matters much.

The most important factor which we all know is how good your information is.

Thanks for sharing

AJ Kohn // August 13th 2015

Theodore,

I’m confused. Is this post awesome or do you disagree with everything in it?

And actually, it doesn’t matter how ‘good your information is’ if no one can find it or if it’s unreadable. You seem to be arguing that it doesn’t matter how people react to content as long as you subjectively believe it is of ‘higher quality’.

Mat // August 25th 2015

I’m in the not a ranking signal camp too.

Not to say this isn’t an important subject, but not for RANKING.

I’ll spare a long argument and keep mine simple, CTR is important because it helps you get more traffic!

So should you focus on it as an SEO? Yes… because as an SEO you’re trying to drive more traffic, not higher rankings 🙂

AJ Kohn // December 21st 2015

Okay Mat so I suppose ranking 2nd for a term rather than third wouldn’t give you more traffic?

rakhi // September 28th 2015

@AJ KOHN

The pleasure is all mine, Glad to supplement your stellar examination with the thought that It is likely worth looking through a greater amount of Hyung-Jin Kim’s licenses. They appear to offer some fascinating bits of knowledge.

regards

rakhi

Ross Barefoot // September 29th 2015

Belated thanks for this post AJ. Very good exposition on the topic.

I tend to agree with you that Google would not ignore user feedback in ranking results.

I remember a vid that I’ve watched many times featuring Hal Varian, chief economist with Google, explaining how Quality Score works. Watch this clip and tell me that they would not use CTR in evaluating result quality: https://youtu.be/qwuUe5kq_O8?t=2m45s

AJ Kohn // December 21st 2015

Ross,

Thanks for your comment and apologies for my belated reply. It’s funny, I keep telling myself that I’m going to update this to include a ‘they use it for paid search’ section. If I do get around to it I’ll probably use that video you reference. Just more signs pointing to the use of click data to inform rankings.

Bill Scully // August 24th 2016

Hi AJ,

I just started reading your blog and can’t get over the effort you put in and value you provide. Thanks!

I think we can all agree that in 2016 CTR does provide signals to Google now. I can’t find any information on click thru rate being a direct part of the Google Rank Brain, but with their experience of using quality score in ranking paid ads, it would make sense to use CTR in organic as well, and what other quality signals could they possibly get from the result page to determine if it’s a good result?

Regards,

Bill

AJ Kohn // August 29th 2016

Thanks for the kind words Bill.

I’m relatively certain that click signals (that’s a combination of CTR and long clicks) are used to better rank results. Is that a part of Rank Brain? I don’t know. It’s hard to tell.

But this excerpt from a recent article on machine learning certainly piqued my interest.

As measured by whether the user clicks on it seems to be a pretty clear form of using click signals.

Sorry, comments for this entry are closed at this time.

You can follow any responses to this entry via its RSS comments feed.