Google has a Heisenberg problem. In fact, all search engine algorithms likely have this problem.

The Heisenberg Uncertainty Principle

What is the Heisenberg Uncertainty Principle? The scientific version goes something like this.

The act of measuring one magnitude of a particle, be it its mass, its velocity, or its position, causes the other magnitudes to blur. This is not due to imprecise measurements. Technology is advanced enough to hypothetically yield correct measurements. The blurring of these magnitudes is a fundamental property of nature.

The quantum mechanics that go into the Heisenberg Uncertainty Principle are hard to follow. That’s probably an understatement. It is fascinating to read about the verbal jousting Heisenberg, Schrödinger and Einstein on the topic. (I’m envisioning what the discussion might look like as a series of Tweets.)

Yet, some of the mainstream interpretations you get from the Heisenberg Uncertainty Principle are “the very act of measuring something changes it” and, by proxy, that “the observer becomes part of the observed system”.

These two interpretations can be applied to Google’s observation of the Internet.

Google Changes the System

Not only does Google observe and measure the Internet, they communicate about what they’re measuring. Perhaps quantum theorists (and those looking at comments on YouTube) would disagree with me, but the difference is also that the Internet is made up of real sentience. Because of this, the reaction to observation and measurement may be magnified and cause more ‘blur’.

In ancient SEO times, meta keywords were used in the algorithm. But the measurement of that signal caused a fundamental change in the use of meta keywords. This is a bit different than the true version of the Heisenberg Uncertainty Principle where measuring one signal would cause the others to blur. In this case, it’s the measurement of the signal that causes it and the system to blur.

Heisenberg and the Link Graph

Today the algorithm relies heavily on the link graph. Trust and authority are assigned based on the quality, quantity, location and diversity of links between sites. Google has been observing and measuring this for some time. The result? The act of linking has blurred.

The very act of measuring the link graph has changed the link graph.

In an unmeasured system, links might still be created organically and for the sole purpose of attribution or navigation. But the measured system has reacted and links are not created organically. The purpose for a link may be, in fact, to ensure measurement by the observer.

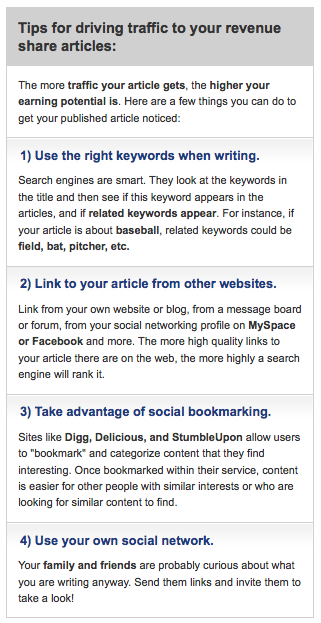

Nowhere is this more clear than Demand Media’s tips to driving traffic.

Clearly, the observer has become part of the observed system.

Social Search Won’t Help

There are those who believe that moving from document based measurement to people based measurement will solve algorithmic problems. I disagree.

In fact, the people web (aka social search) might be more prone to blur than the document web. Documents can’t, in and of themselves, alter their behavior. The raft of content produced won’t simply change on it’s own, people have to do that. And that’s precisely the problem with a people based algorithm.

Think links, social mentions and other Internet gestures are perverted by measurement now? Just think how they’d change if the measurement really were on people. The innate behavior of people on the web would change, as would their relation to documents. Do I have to mention Dr. House’s favorite mantra?

No, social search won’t help. Is it another signal? Sure. But it’s a signal that seems destined to produce a higher amount of blur.

Does Google Measure Blur?

Does Google have an attribute (meta data) attached to a signal that determines the rate or velocity of blur? I have no idea. But those folks at Google are pretty dang smart. And clearly something like that is going on since signals have gone in and out of favor over time.

At SMX Advanced 2010, Matt Cutts made it clear that the May Day algorithm change was made, primarily, to combat content farms. These content farms are a form of blur. The question is what type of signals did they trace the blur back to? Were they content based signals (e.g. – quality of content based on advanced natural language processing) or link based signals?

Does Google realize they are, inadvertently, part of the problem? Aaron Wall believes it’s more intentional than inadvertent in his excellent How To Fix The Broken Link Graph post.

Should Google Shut Up?

Google has always been somewhat opaque in how it discusses the algorithm and the signals that comprise it. However, they are trying to be better communicators over the last few years. Numerous blogs, videos and Webmaster Central tools all show a desire to give people better guidance.

You’ll still run into stonewalls during panel Q&A, but more information seems to be flowing out of the Googleplex. On the one hand, I very much appreciate that, but a part of me wonders if it’s a good thing.

In this case, the observer is clearly acting on the system.

Should Google tell us meta keywords are really dead? Or that they don’t process Title attributes? Or that domain diversity is really important? Sure, we’ll still have those who postulate about the ranking factors (thank you SEOMoz), but there would be a lot less consensus. It might produce less homogeneity around SEO practices, or in other words, the blur of other signals might lessen if the confidence in signal influence weren’t as clear.

It’s not that the algorithm would change, but perhaps the velocity in which a signal blurs beyond usefulness slows.

The Solution

Well, there really isn’t a solution. A search engine must observe and measure the Internet ecosystem. Acknowledging that they’re part of the system and working on ways to minimize their disturbance of the system would be a good start. Hey, perhaps Google already does this.

The number of signals (more than 200) may be a reaction to the blur produced by their measurement. More signals mean a distribution of blur? But I somehow doubt it’s as easy as a checks and balances system.

Google could maintain very different algorithms – 2 or even 3 – and randomly present them via their numerous data centers. However, they’d all have to provide a good user experience and it’s been difficult for Google to maintain just the one thus far.

I don’t have the answer, but I know that the rate in which the system blurs as a result of Google’s observation is increasing. I believe Google must account for this as they refine the algorithm.

What do you think?

The Next Post: The Best SEO Tools Not About SEO

The Previous Post: I Ask Good Questions

1 trackbacks/pingbacks

Comments About Google’s Heisenberg Problem

// 5 comments so far.

Micah // June 14th 2010

I would agree that Google’s ranking methods prompt us to make changes to try and optimize our rank based on those methods. However, one positive out of all of this is that Google’s methods get progressively harder to game and they generally encourage better content. The tactics of writing good content that is accessible and linked to from other content produces far a more satisfying web experience than meta tag keyword stuffing and doorway pages. I think that is why Google has become more open about how they calculate rank – producing content to appeal to Google generally leads to content that is more appealing for humans too.

aj // June 14th 2010

Thanks for the response Micah. I’d agree that producing content to appeal to Google generally leads to content that is more appealing to humans. Too often I hear that writing for the search engines is bad, but really it’s about writing more clear and concise content – and that helps both the search engine and human reader.

I’m not sure that the methods are getting harder to game or not. I think the clear knowledge of signal weights may be making the playing field uneven. The link graph is broken and I don’t think Google has figured out a good way to fix it or find an alternate way to determine real trust and authority. They’re clearly concerned about finding a proxy for user experience so they don’t have to rely so heavily on links which are simply not generated the same way they were when Google wasn’t observing and measuring them so closely.

Duncan // June 28th 2010

I think that is one of the best metaphors about SEO that I have read.

Absolutely spot on and I don’t see how it changes, unless different players enter the space (such as social media, mobile marketing, etc.).

But as long as there is money at stake for search terms – and Google is the primary portal for accessing those businesses, products, etc. the players with the deepest pockets will end up winning. Which is partially what I think you are alluding to when you say the link graph is broken.

Sure we may be more motivated to write quality content – your post is an example of that – but most folks who have gone that far down the seo rabbit hole knows why they are trying to write quality content and are likely already optimizing the other elements of the web page.

aj // June 29th 2010

Thank you for your comment and kind words.

And yes, the link graph is broken because those with deep pockets have found ways to create links with faux trust and authority. They have the resources to create a process and network that can manufacture links. Content farms aren’t dangerous because of the content per se, but because they are attached to link factories. Without the links, the content would have to compete on a level playing field, where they’d probably lose.

steveplunkett // January 03rd 2013

200? more like 20000

you almost had it…

fyi.. meta keywords are still used… not the way they used to be used, but the way they are now.

(nope, not explaining it just like the google minister pf propaganda wont either.. )

links are no longer the big signal.. it IS the documents. some of us pre-date google.

There are roughly about 30 patents (maybe more these are the one i can count quickly), these patents are simply classification of objects.. and the patterns they create in daily internet activity.

schema works.. better than links…

here is what happened.. enough idiots tried to game the system outside of Google Webmaster Guidelines and those idiots created patterns.. let’s pretend one of those signals was the nofollow tag on links…

(note: i built my first website for the university of Texas in 1995 i have yet to actually code a nofollow tag and i do code daily)

classification of my web footprint does NOT contain the nofollow attribute.

my footprint includes google webmaster tools and google analytics accounts.

These can all be under different email address for clients.. but at some point my personal document contains this information. none of these clients were penalized and their spammy links were cleared up, any error in sitemaps or robots.txt were fixed…

So my “document” has a pretty clean, credible footprint..

part of my job is to report spam on branded terms for fortune 5, 50. 500 etc..

i do this according to google guidelines.. for a long time.. i have a spam report extension on chrome, created by matt cutts at my request.. i use daily.. pretty sure Google knows who i am and what my footprint is..

Being aware of this allows me to “tamper” with my “document”. Most searchers are not aware and what they do online actually matches their preferences quite well.. which is why despite Bing having a huge share being verizon search provider.. people still like Google.. it works for them.. because of their “document”.

Good article.. thanks for making me think today =)

Sorry, comments for this entry are closed at this time.

You can follow any responses to this entry via its RSS comments feed.