The recent algorithm change by Google, dubbed by Danny Sullivan as the Farmer Update, was “designed to reduce rankings for low-quality sites …”

The emphasis is mine. I think that emphasis is important because the Farmer Update was not a content level algorithm change. Google did not suddenly get better at analyzing the actual quality of content. Instead they changed the algorithm so that the sites for which they felt were “low-value add for users” or “sites that are just not very useful” were demoted.

Manual Versus Algorithmic

I’ve been asked a number of times whether this was done manually. The simple answer is no. If it were done manually it would have been a lot more precise. Google did not, for example, take a list of the top 1000 low-quality sites and demote them. That would have been akin to a simple penalty. If they had done this you wouldn’t find sites like Digital Inspiration getting caught in the crossfire.

The complex answer is sort of. Algorithms are built by people. The results generated reflect the types of data included, the weight given to each type of data and how that data is processed. People at Google decide what signals make up the algorithm, what weight each signal should carry and how those signals are processed. Changing any of these elements is a type of human or manual intervention.

Until the Google algorithm becomes self-aware it remains the opinion of those who make up the search quality team.

Dialing For Domains

I absolutely believe Google when they say they didn’t manually target specific sites. Yet, they clearly had an idea of the sites they didn’t want to see at the top of the rankings.

The way to do this would be to analyze those sites and see what signals they had in common. You build a profile from those sites and try to reverse-engineer why they’re ranking so well. Then you look to see what combination of signals could be turned up, or down that would impact those sites.

Of course, the problem is that by turning the dials to demote these sites you also impact a whole lot of other sites in the process. The sites Google sought to demote do not have a unique fingerprint within the algorithm.

The result is a fair amount of collateral damage.

Addition By Subtraction

The other thing to note about the technique Google used was the idea that search quality would improve if these sites were demoted. Again, it’s not that other content has been deemed better. This was not an update that sought to identify and promote better content. Instead, Google seems to believe that by demoting sites with these qualities that search quality would improve.

But did it really?

You can take a peek at what the results looked like before by visiting a handful of specific IP addresses instead of using the Google domain to perform your search. So, what about a mom who is looking for information on school bullying.

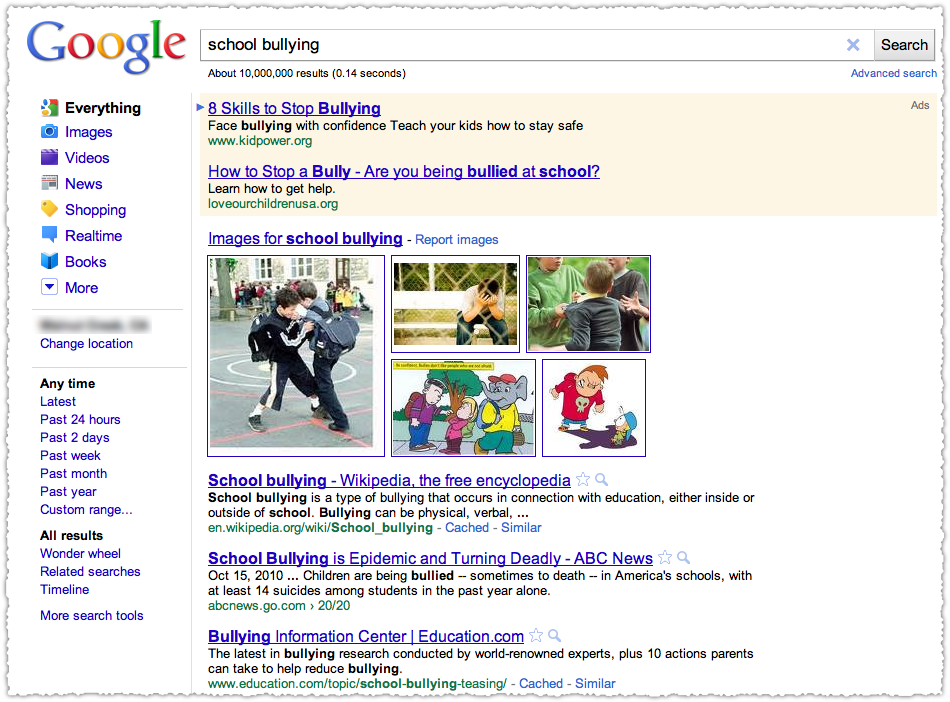

Here’s what she’ll see now.

Wikipedia is the top result for school bullying. Really? That’s the best we have on the subject? Next up is a 20/20 piece from ABC. It’s not a bad piece, but if I’m a mom looking for this serious subject do I appreciate, among other things, the three links to Charlie Sheen articles.

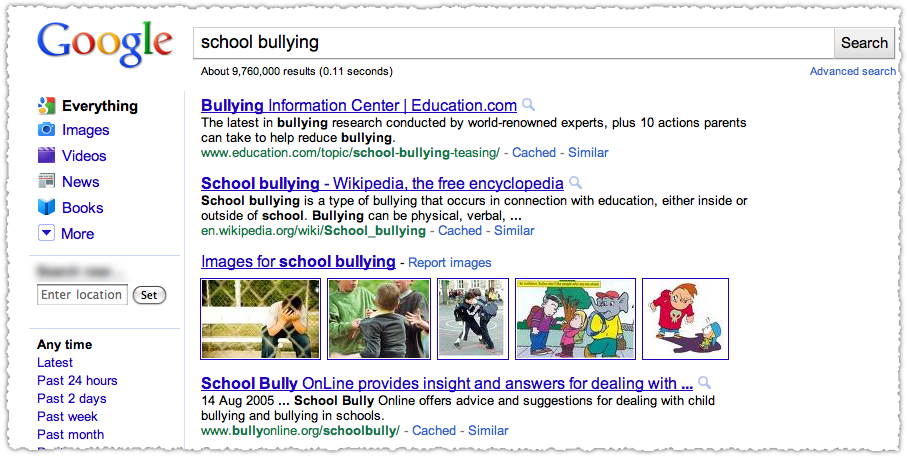

What was the top result before the Google Farmer Update?

The Bullying Information Center from Education.com was the top result. That’s right, Education.com was one of the larger sites caught up in this change according to the expanded Sistrix data. Is the user (that mom) better served?

This is the tip of the iceberg. In fact it was the first one I tried, by simply using SEMRush to get a list of top terms for Education.com.

Throwing The Baby Out With The Bath Water

The last problem with the site technique is the inability for it to identify the diamonds in the rough. A content site composed of thousands of independent authors may have a varying degree of content quality. Applying a site wide demotion applies the same standard to all content regardless of individual quality.

The Google Farmer Update treats great content the same way as lousy content.

Shouldn’t we want the best content, regardless of source?

The Google Opinion

I’ve been vocal about how I think it’s presumptuous to believe that Google understands our collective definition of quality. Even in the announcement I find their definition of high-quality interesting.

… sites with original content and information such as research, in-depth reports, thoughtful analysis and so on.

First, is this really what took the place of those ‘low-quality’ sites? As I’ve shown that’s not always the case.

But more to the point, do we all want research and in-depth reports? In the age of 140 characters and TL;DR, where time is of the essence and attention is scarce, is the everyman looking for these things?

And what makes an analysis thoughtful, or thoughtful enough? Is my analysis here thoughtful? I hope so, but I’m sure some people won’t agree.

Don’t Hate The Player, Hate The Game

Let me tell you a secret, I personally agree with what Google is trying to accomplish. There are sites I absolutely detest, that if I were king of the Internet would be removed from all search results. I wish people wouldn’t fall over themselves for the cliche, but effective, ‘list’ blog post. I want people to truly read, word-for-word, instead of scan and to think that people won’t look at a 1,000 word blog post (like this) as a chore.

I wish more people took the time (particularly in this industry) to download a research paper on information retrieval and actually read it. But guess what? That’s not how it works. Maybe that’s how I, and Google, want it to work. But that’s not reality. You know it and I know it.

So while I personally get where Google is coming from, I can’t endorse or support it.

From Library To Bookstore

The reason why I have been such a fan of Google is that they were close to neutral. The data would lead them to the right result. They were the library, allowing users to query and pick out what they wanted from a collection of relevant material. Google was the virtual catalog, an online Dewey Decimal System.

Today they seem more like an independent bookstore, putting David Mitchell, Philip Roth and Margaret Atwood out front while burying Stuart Woods and Danielle Steele in the stacks. Would you like an expensive ad latte with that?

Different Is Not Better

Did search quality get better? I tend to think not. It’s certainly different, but lets not confuse those two concepts.

By subjectively targeting a class of sites Google inadvertently demoted good sites along with bad sites, good content along with bad content.

We know 6 minus 3 doesn’t equal 10.

The Next Post: SEO and UX

The Previous Post: Google Doesn’t Trust Us

7 trackbacks/pingbacks

Comments About Farmer Update About Sites Not Content

// 18 comments so far.

Dani Horowitz // February 28th 2011

My site, DaniWeb, fared VERY poorly in the Google farmer update. We literally lost 70% of our US traffic overnight, and are now in a desperate situation. However, we’re an online discussion community catering to IT professionals. I am not sure how we got stuck in the crossfire, or what we can do to crawl our way out of it. At first I thought because we get a lot of longtail traffic. Then I thought maybe because there are a lot of misspellings (as is frequently the case with a site that’s almost entirely user generated content.) However, a lot of things we used to rank #1 for have been replaced with threads from other forums that don’t necessarily offer any better information. What did we do wrong that they did right?

aj // February 28th 2011

Dani, I’m very sorry to hear that your site got dinged in the Google Farmer update. I understand and really do empathize with your situation.

The fact is you may have done nothing wrong. As I’ve shown, the change only sought to demote a certain class of sites Google thought to be ‘low-quality’. Yet they wanted to do it algorithmically instead of manually. That means that if you shared enough traits (based on how Google’s individual signals are weighted) you might wind up getting demoted.

My analysis indicates that exact match keyword domains now carry more weight, as do prime TLDs such as .gov and .org. In addition, brands got some sort of boost. Whether that’s through a trust and authority mechanism (similar to the Vince Change) or more reliance on brand mentions is unclear.

A few other theories that I can’t confirm but am seeing anecdotally are that social signals may have gained in importance, sites with fewer links per page may be in vogue and the geographic mix of your back link profile may be important.

Would you be open to posting a sample of terms for which you once ranked #1? I’d be interested in looking at the sites who now outrank you.

Once again, I’m truly sorry.

Jeremy Post // February 28th 2011

Hey Google, can you please turn my client’s site back up to 11?

In all seriousness, the QC on this algo update was lacking — and that’s being generous. Google’s impetus behind the change feels a lot like a reactionary PR stunt, thinly veiled as user benefit.

In analyzing the change, we’ve looked at before/after SERPs for hundreds of queries, and — personal interest aside — we’ve seen just as many high-quality/value sites demoted as the content farms Google was trying to target. Baby with the bath water, indeed.

All of this brings up a bigger issue: The total lack of transparency in an organization which controls 65-75% of all search traffic in the US. From Google’s Quality Score algo in PPC, to their organic SERP ranking algo — businesses and users are at the mercy of a single entity which controls the flow of information and owns the rules of the game. Should online search be considered a utility, thereby opening the door to regulation and greater transparency?

aj // February 28th 2011

Thanks for weighing in Jeremy.

There was mention that the Google Farmer Update was tested before being launched. I have to believe they meant they tested it internally and not externally since even a small test would have raised alarm bells with many sites. They seemed happy enough to compare their dataset to the Personal Blocklist Chrome extension. Never mind that Chrome users are a biased sample to begin with or that the number of users they collected data from was still small relative to the total user base.

The transparency issue is a thorny one. I’d like to avoid making search a utility and going down the road of regulation. A business should have the ability to conduct and produce product on their own terms. Google’s search results are their opinion of the most relevant search results. They are entitled to that opinion. I’m also entitled to say I think they’re wrong.

But one of the problems is many people don’t even understand that there’s a real alternative. If this were car insurance and they raised your rates you would try out a new carrier and see how it worked out. Yet, many search users only know they search through their tool bar and it goes to Google. The Internet is a mystery to a lot of people and that means there’s a lot of inertia.

Ellen // February 28th 2011

I was just talking to yet another client today who was hit super hard with this latest algo update. I really don’t understand it. One site I know of that was moving to the top of search results was http://www.ZippyCart.com. They offer thousands of pages of unique, relevant, and topical information for the world of ecommerce. They also provide completely unbias reviews of ecommerce software. All of the other major sites in the niche take payment to rank solutions and let bribes mask their opinions when writing reviews. Yet they all got a bump in SERPs while ZippyCart has been jumping around from page 1 to page 5 out of nowhere. It’s sad. The sites now ranking for the head terms that Zippy once owned are either content farms (yup, now content farms are ranking) or sites that haven’t been updated in years. It’s a huge disservice to the ecommerce community and sad to see for a company that works as hard as Zippy. And they are just one of many clients that took similar hits.

aj // February 28th 2011

Thanks for your comment and insight Ellen.

I share your dismay regarding the demotion of sites that provide unbiased reviews. I too have noticed a lot of ‘made-for-Amazon’ sites, or clearly biased reviews ranking above sites that could have provided more balanced information. Is the user better served today?

I still believe Google felt they were making things better, but they seem to have broken as many things as they’ve fixed in doing so.

Master Dayton // February 28th 2011

Definitely been interesting watching this one unfold, and what’s stunned me is the other times there was an announced change I could see some searches that seemed cleaned up, but this time the exact opposite has happened, in my opinion. Many terms I’ve searched for or look up bring back four stores (Sears, Amazon, Best Buy, JC Penny etc), wikipedia, or some really terrible websites that don’t offer any value. If I wanted to buy something, I’d go straight to Amazon. I don’t need the top of half my Google searches to all be stores I can drive down the street to get to. I’m hoping the customary tweaking that often happens after a big change happens here, too, otherwise I’m going to be at a frustrated loss as to finding good information.

aj // February 28th 2011

Master Dayton, thank you very much for your comment.

You hit the nail on the head with the idea that the same handful of retailers (and the ubiquitous Wikipedia) are being returned for many, many transactional terms. Google clearly thinks that users want to go directly to the retailers they trust. Whether it was intentional or accidental, Google removed a lot of review and shopping aggregators.

Your comment shows a different version of how search is used. Today you can simply open a new tab and go to those established brands, or perform a navigational search and get there. Either way, you’re looking to Google to find a better mix of results, the stuff you might miss. That’s a really interesting use case that I haven’t really explored but instantly feels authentic and valid.

I too hope that there’s some amount of tweaking, but with such a huge corpus of queries being impacted I’m not sure how easy it will be to make a course correction.

Ed // March 01st 2011

This was an excellent analysis. I’d like to add my thoughts on how Google is making the determination, and why this approach is flawed.

After looking closely at about 20 sites affected by this change, I believe Google is determining the “quality” of a site by comparing the total number of pages on a site to the aggregate link profile of that site. They may be considering both the overall strength of the link profile as well as things like how many sub-pages have direct, quality links, etc.

If a site has too many pages of content that don’t have enough links, the entire site gets a penalty. This is done under the theory that a “good” site’s pages should all be highly relevant and link worthy.

This explains quite easily why small sites (sites with a small number of pages) experienced only positive outcomes from this algorithm change, while some larger sites completely collapsed. The larger a site is, the more high quality links it needs coming in in order to keep it from sinking.

Of course, some large sites survived just fine – i.e. Wikipedia and eHow. These sites survived because they actually have an overwhelmingly high number of links coming into not only the core domains but also individual pages. Even Google was probably surprised at just how hard it is to sink eHow algorithmically. It turns out that Demand Media knows what they are doing. And, with the boost Google just gave them (+20%), now they’ve got plenty of time to begin working on that critical backlink structure they need to stay ahead of the next wave.

On the surface, this approach appears plausible since Google is going after content farms. While it is easy to spin 4000 new pages of content every day, it is far harder to get 4000 “meaningful” links into those articles. Hence, by demoting sites with lots of content but few links, it seems like results will be improved.

Google used to be of the mindset:

“Upload 1,000,000 pages and we’ll find the good ones and promote them.”

Now Google is saying:

“If you upload too many pages that don’t get links, we’ll assume you are low quality and demote your entire site, regardless of the quality of certain individual pages.”

If Google were to think long and hard about this approach, they would easily see the flaws:

1) This discourages sites from creating new and useful content for readers unless they believe they can get sufficient meaningful links into that content. As a site owner, you’ll be much more scared to write new articles on your site if you fear that failing to get links to those articles will tank the rest of your site. Essentially this algorithm DISCOURAGES content creation unless the webmaster can be sure the content will garners links.

2) Some niches are much harder to gain links in than others. For example, “How-to” home improvement articles published by an independent web site are not very likely to get links from other sites that write about home improvement. All the major sites that write about home improvement with strong link profiles (e.g. DIYNetwork, HGTV) are branded sites that generally do not link-out to independent content.

They attract links from news sites because they are popular and on TV, and their content is actually usually very good. It is virtually impossible for a credible independent author to build link equity in that domain without an enormous amount of self promotion. So, if that author produces 1000 pages of interesting and helpful content, but only gets a handful of links into some of their “best” material, even those great pages will never rank in the new algorithm because Google punishes them for writing so much other content.

3) This algorithm change hurts User-Generated Content (UGC) sites the most, because those sites are the most likely to have a mix of good and poor quality articles and are extremely difficult to police.

4) This algorithm change encourages the development of small 1-off search-oriented sites (e.g. http://www.basementinsulation.net) which provide little value to the reader but do not suffer from the “big site” penalty imposed by the latest algorithm.

There is a lot of chatter out on the net about various ways Google is determining what sites to rank higher and lower. While G’s algorithm is indeed very complex and takes into account thousands of factors, I for one believe that this particular “Farmer” update wasn’t complex at all… It may be possible that other single-page factors are being evaluated besides link strength to come up with a site quality profile, but in my investigation, links and site size are the only universally-consistent metric that explains why certain sites tanked and others didn’t.

aj // March 03rd 2011

Ed,

Thank you very much for the great analysis and insight. I found myself nodding along to your narrative. Your theory definitely makes sense and the implications are, indeed, scary.

If Google did do something so … simple, it would be disturbing. I’ve said a number of times that the problem with content farms isn’t the content it’s the links. Demand Media knows this. They tell their writers to link to their own articles.

I truly hope (and in the end believe) that there was more to the change than this. Google knows the link graph is in disrepair and, more than anyone, knows the size and scope of link abuse. To use that hobbled signal as the primary way to effect this change and jeopardize future content creation would be … obtuse. Say what you will about Google, I don’t think they’re that.

Perhaps you’re right, but I’m hoping otherwise.

PJP // March 03rd 2011

Completely agree AJ, though I’m biased since I run the online marketing for education.com.

We’d definitely fall into the large amount of indexed pages category, but that’s mostly a function of covering such a wide area. ED’s explanation of a shift including a #of indexed pages/linked to pages makes a lot of sense. It would be hard for an algorithm to determine the quality of an article by simply going through text. Maybe a combination of that along with discounting the link equity passed through internal links.

I saw the sistrix list of 100 domains and we weren’t a part of it, did you see an even more expanded list. I’ll also be at SMX West next week. If you’ll be around, drop me a line.

aj // March 03rd 2011

Thanks for your contribution PJP. I’m not sure Ed’s explanation is the sole signal in the Farmer Update but it’s an interesting data point and certainly could have been one of the signals used.

There is simply no doubt at this point that this was a domain level change and not a page level change. Google did not suddenly get better at determining the specific quality of an article.

You can actually request an expanded set of data from Sistrix, which includes 331 domains. I’ll email the list to you and hope to meet you at SMX West.

LyricsExpress // March 04th 2011

OH dear Gawd – THANK YOU for this post.

I’ve been at Google Webmasters – where it’s supposed to be webmasters helping other webmasters understand what is going on – instead it’s a slam fest by the “BEAUTIFUL PEOPLE” that have taken up residence there.

When our site disappeared 2 weeks ago (completely taken out of the search engine) I knew something was wrong. Thought originally it was a competitor asking for our site to be removed as spam… At the time it was keyword loaded/stuffed/whatever.

But it had been ranking in the top 3 spots for almost 2 months Jan / Feb.

And it’s a flash site.

So – I try to figure out what is wrong. I’m pretty good at SEO – not the top top top – but pretty good at content writing – better than the average joe… so – when we tanked completely when we were included again – it was a shock.

I asked why – of course after being dissed – and only one person *Pelagic* taking the time to help point out bad issues – which I fixed – we fell EVEN FURTHER behind.

I was like – what in the world? 60 thousand pages back for what we were in the top for when I was stuffing the site?

I don’t think so.

So – I still don’t know why….

But – I noted that all the companies that do what our company does – and they pay for adwords – (in the millions of dollars – we’re electrical contractors) they are now top 10 – 20… and so on…

So – that gives me pause… Instead of being the public library – I think you’re dead on – they are now the Barnes and Noble – and maybe like Border’s they too will start to fall.

It’s a sad thing for me – because I worked hard on the site.

And can you believe that the people who are the “dahlings” of webmaster central who treat every person asking a question like a moron… (must be a special community there… treating every question as if the person were a criminal – maybe folks just don’t know…. )

Can you believe…. that they had the audacity to say Google doesn’t read flash?

Hellllllllllllllllllll0000000000000000ooooooo people….

Did they miss the part where I said our site (which was the same flash site) was ranked in the top and now is missing from the top 10000000?

Did they miss the part that Google had us listed for months?

Did they miss the part that we were completely wiped out?

So obviously Google reads flash enough – unless they turned into Steve Jobs and decided it was just too much work and they couldn’t be bothered.

I’m irked – I’m really confused – and while I don’t think my site is the best site in the world – I certainly know it was good enough.

Grrrrrr.

You’re dead on.

I think Google is in the process of selling out. Or they already did.

*like I said – we’re electrical contractors – so when the service companies who have hundreds of electrical contractors and the same template for every one of them – all using the same keywords – etc. etc. ad infinitum – are ranking in the top – because well – because I believe they pay millions of dollars (one of those companies admitted they did exactly that to me… because they try to get us to buy advertising space on them, etc.) to adwords…. well.. That makes me hmmmm and hmmmm even more.

And well – I could be exactly wrong – but the Google Wolves seem to think it’s something else…

Maybe…

But… I — personally – think you’re right.

And what I find even more amusing is that we’re ranking just fine in other search engines.

I’m wondering – are we at the demise of the Google Roman Empire?

Grrrrrr. OK – will you take my soapbox away… I think I’m starting to froth at the mouth…. *grin*

Peter // March 04th 2011

“If Google did do something so … simple, it would be disturbing. I’ve said a number of times that the problem with content farms isn’t the content it’s the links. Demand Media knows this. They tell their writers to link to their own articles.”

I don’t agree with you and ED.

Associated Content also gives their writers financial motivation to build links to the articles they’ve written (by paying them based on # page impressions), and yet, AC got hit hard by this update. So it’s definitely not a matter of # of pages vs. # of links.

Daniel // March 10th 2011

One website of many websites… http://www.cannes-tour.com/ got hit and loose all traffic from google. The problem is that in the unique and quality content creation was invested about 7-9000€, using professional editors, after hundreds of hours of research. The content was created in romanian and after translated by native professional translators. The wrong thing in google search is, if you are using local google.ro you’ll get a very incomplete article in romanian about cannes from wikipedia (which is maybe less than 1% useful information about the city than this online guide), another about cannes festival again from wikipedia, and another again from wikipedia in english (doesn’t concern me, i want to read in romanian ), and another from wiki.travel in english (again, doesn’t concern me, i want to read in romanian ) . I compare this website cannes-tour.com information with tripadvisor, again tripadvisor when is talking about what to do-visit, for most attractions there is only 1 simple paragraph, some other with no text at all. So it is clear that google is prioritizing in the search results and giving weight to big names, independent by relevance, quality and quantity of information.

If i want to find something, more detailed in some cases, from wiki i just can alone write wikipedia “keyword”, i don’t need google to give me from 10 of the top results, 4 links to wikipedia (2 in english which is not my mother tongue), and some other from tripadvisor, again in english, so from 10 search results i get 80% in english. Everything just got bad…

Daniel // March 10th 2011

bytheway i’m really sorry for daniweb, i know the web site, i remember the interface, the colors, the brand and the fact that i’ve searched something about coding problems in javascript or php in google, don’t remember what and i found what i was looking for there – on daniweb, so for me as a user this is very clear that what is doing now google is wrong, very wrong.

Matthew Pollock // March 11th 2011

Thanks! The first post by an SEO that bears out my ‘first shot theory’. Our site http://www.globalpropertyguide.com suffered a 50% decline in U.S. Google traffIc. Before this, I’d found mostly fatuous commentary, an egregious example being Vanessa Fox of SearchEnglineLand’s hymn of praise to Google as the defender of good content. I reproduce here, with your permission, my howl of pain in response to her irritating twaddle.

“The idea that ‘great content is the answer’ is, frankly, laughable, in our experience. Our site is 100% original, with very deeply researched content, recognized within our industry as a leader, and is frequently updated. Central Banks, Multilateral Institutions, and academic researchers write to us asking for data (yesterday I had the Chief Economist of the Czech Central Bank write to ask for our data). Our site looks clean and modern. Yet our US traffic is 50% down, across all keywords, new articles and old, more or less entirely undifferentiated.

Quote from an SEO I know here:

tim buenasuerte (name changed): some websites I handle dont have original content

tim buenasuerte: in fact, we copied most of them.

tim buenasuerte: and we weren’t affected

So, I am sorry [Vanessa], but your article seems like complete nonsense to me.

My take on the Farmer Update:

Previously, there was a rule:

“Google does not rank web sites, they rank pages”

Not true any more – Matt Cutts has now said, somewhere – you want to remove low-quality pages, because they impact the whole site.

So Google is saying: “If you LOOK like a content farm, we WILL TREAT YOU as a content farm”.

We will rate the site as a whole.

Sites with diverse old content = content farm = BAD

Sites with diverse new content = news site = GOOD

Sites with Above Fold small ads = content farm = BAD

Sites with targeted keyword SEO = commercial site = OK

Sites with messy untargeted keyword SEO + old content = potential content farm = BAD

Why we got hit

1. Our classical SEO isn’t the greatest, so Google sees an enormous diversity of keywords when it looks at our site. Previously, we thought this was quite a good thing. We relied on the increasingly strong consensus – just write good content, don’t link exchange, don’t cheat, have good internal links, and make your site content visible to the bots.

2. We have a strong diversity of content within the one research category, because our research covers our subject from many angles.

3. We update frequently, but not enough to be considered a news site.

These factors, we believe, caused us to be hit by the Farmer Update – diversity of content, yet updates not frequent enough to be considered a news site. Plus we have some above the fold Ad Sense type advertising, which we are removing.

That’s why we were hit. Not because of content or design.

What to do about it, is less clear.”

End of Quote

Prior to this update, we had 180,000 visits/month and a Page Rank of 6. We have never done any link trades. People write to me to ask for link exchanges, I ignore them. I have always ignored them. So we are unlikely to have received links from ‘bad neighborhoods’.

One problem may be that our work gets copied. Everyone in the international property industry copies us, putting our information up for free, and then offering properties alongside. At first we sent ‘cease and desist’ letters, but as an international site we have no legal remedy. The copy-cats realize this very well. So they carry on copying. We stopped doing anything about it, relying on the Google promise that ‘you will never be penalized because others have copied your site’.

WebVantage Technologies // June 15th 2011

Its really a very illustrative post . It is explaining almost everything about teh diiferent strategies used by google.

Sorry, comments for this entry are closed at this time.

You can follow any responses to this entry via its RSS comments feed.