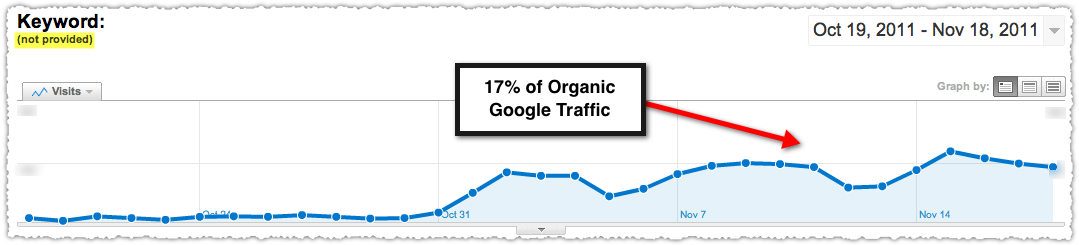

Have you noticed that direct traffic year over year is through the roof? Maybe you scratched your head, wrinkled your brow and chalked it up to better brand recognition. In reality, no such thing happened. What is happening is search traffic from iOS is being attributed to direct traffic instead.

Your organic search numbers are being mugged.

[Update] Frank Zimper notes that this problem also exists for those running Android 4.0 and higher. I’ve confirmed this via the same process you’ll read below. The only saving grace is that Android is usually a smaller traffic driver and the version migration is far more gradual. Yet, it’ll clearly continue to syphon search traffic off over time unless Google addresses this problem.

iOS 6 Search Theft

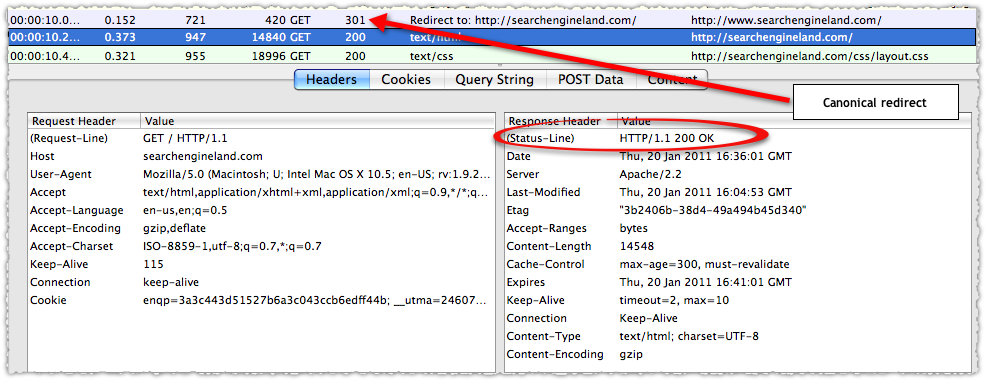

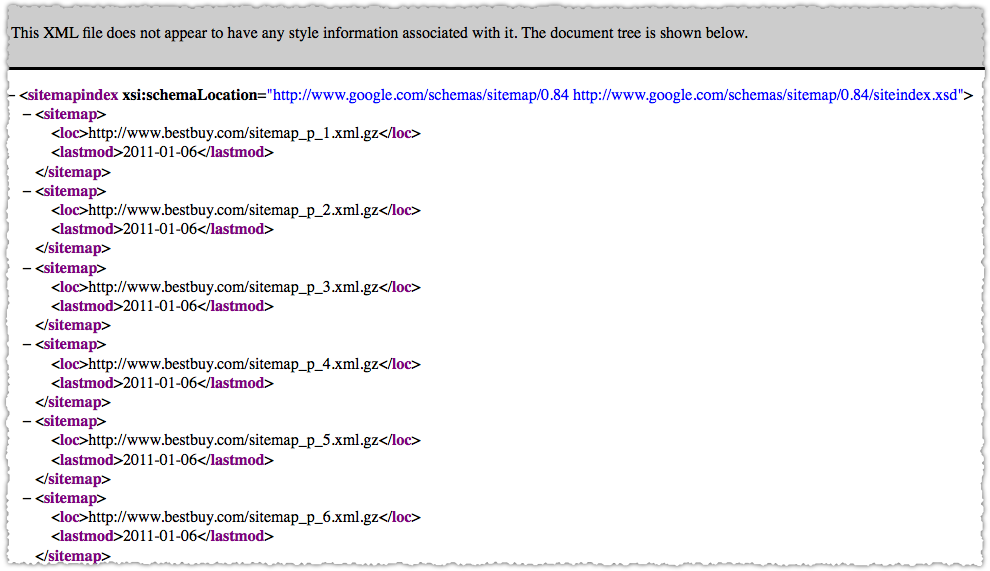

The reason these visits are being mis-attributed is a decision by Apple to move Safari search to secure (SSL) in iOS 6. The result of this decision is that the referrer isn’t passed. In the absence of a referrer Google Analytics defaults those visits to (none) which shows up in direct traffic.

The web browser on iOS 6 switched to use SSL by default and our web servers don’t yet take that fact into account. Searching still works fine, but in some situations the HTTP referer header isn’t passed on to the destination page. We’re investigating different options to address this issue.

As Google investigates different options to address this we’re left dealing with a serious data problem. Personally, I think Google Analytics should have a message within the interface that warns people of this issue until it’s fixed.

RKG did a nice job of tracking this and showing how to estimate the hidden search traffic. But for some reason this issue doesn’t seem to be getting as much traction as it should so I wanted to demonstrate the problem and show exactly how you can fight back. Because it’s tough enough being an SEO.

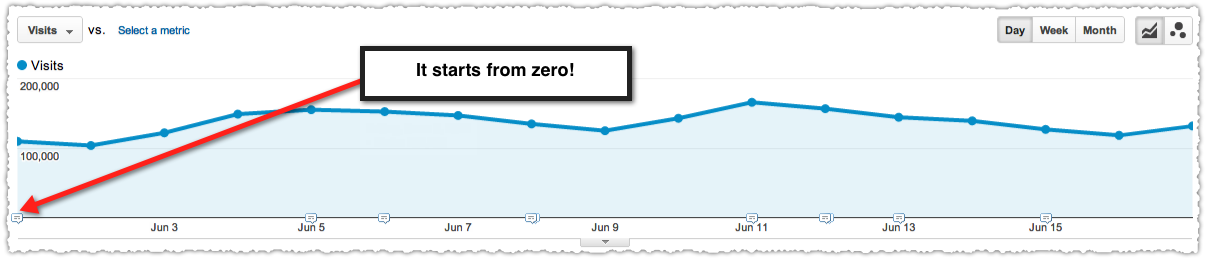

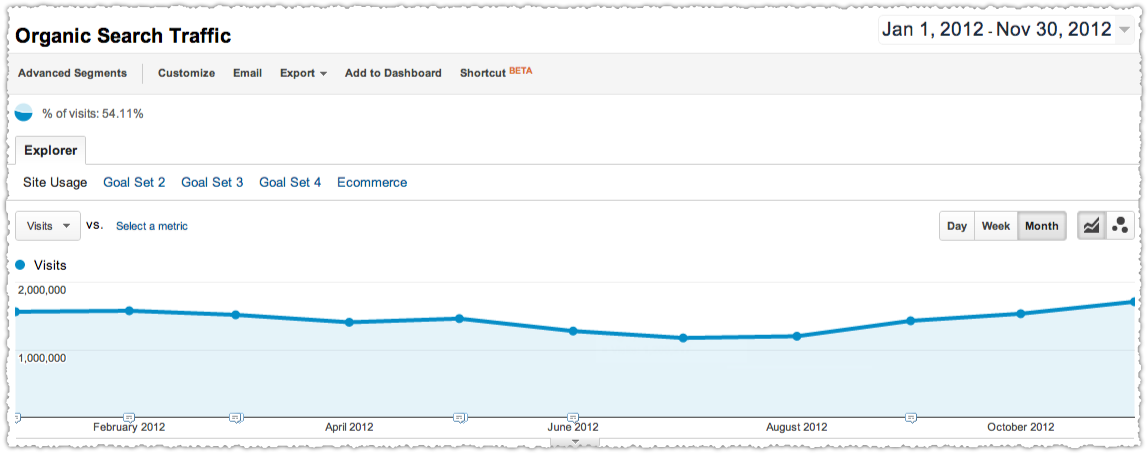

At a glance it looks like this has been a decent year for this client. But it’s actually better than it looks in October and November. Follow along to see just how much better.

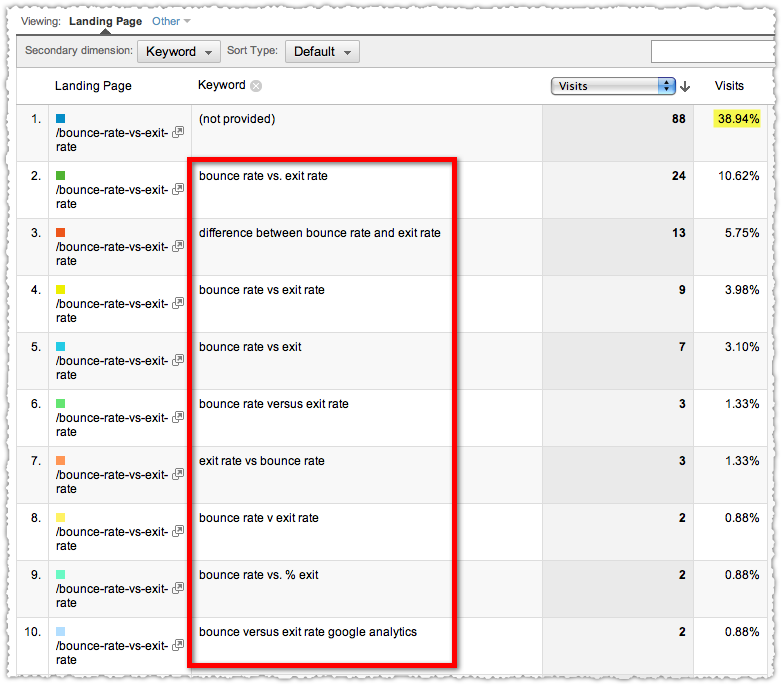

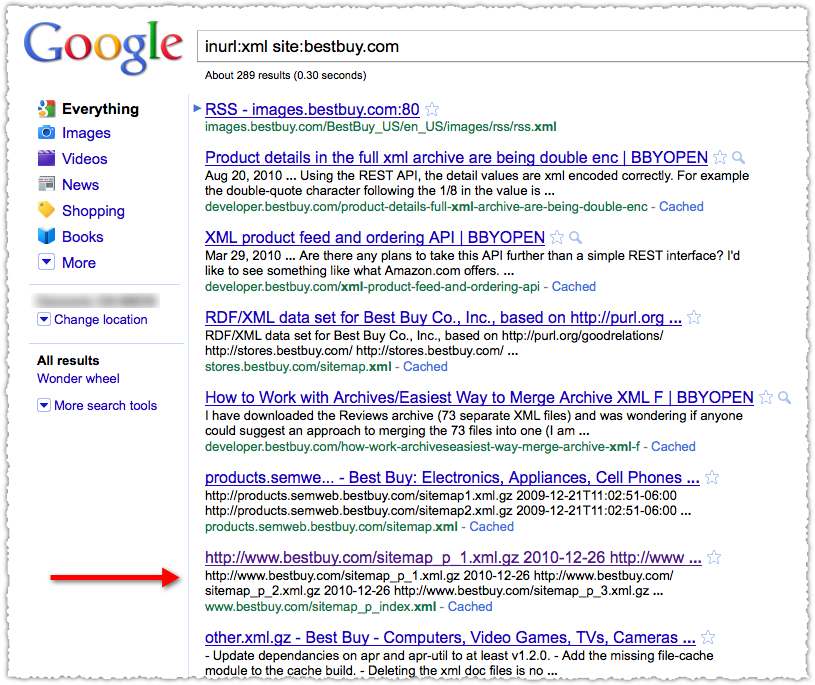

Create iOS Advanced Segments

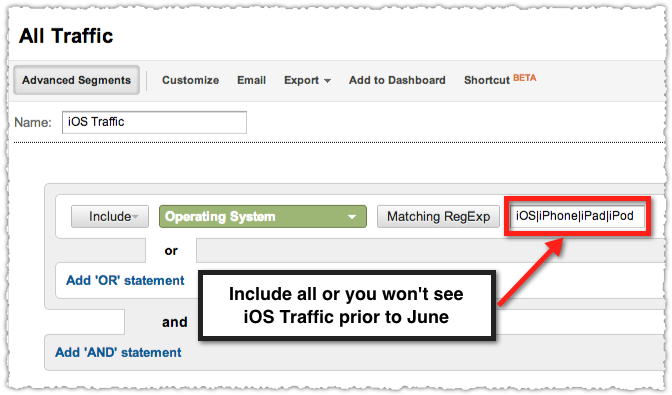

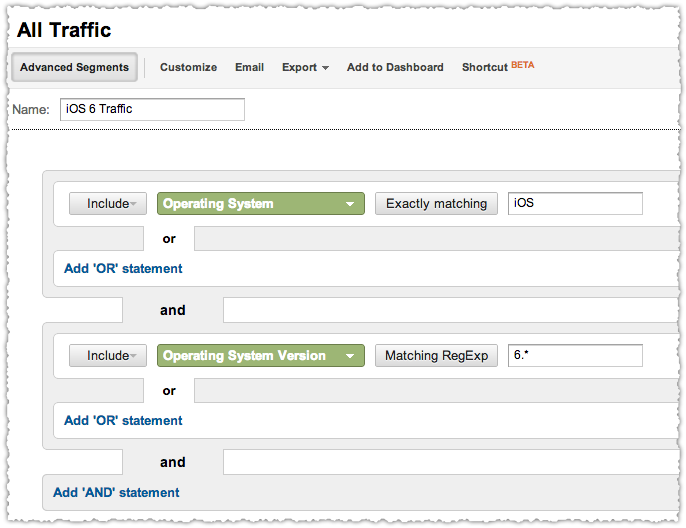

The first step is to create two Advanced Segments, one for iOS and one for iOS 6.

In May the labeling of Apple Operating Systems changed from specific devices to iOS. So include all four so you can see your iOS traffic for the entire year.

The iOS 6 segment is straightforward and will only be used to demonstrate and prove the problem. Also, if you want to perform this analysis on multiple analytics properties be sure to save these segments to any profile.

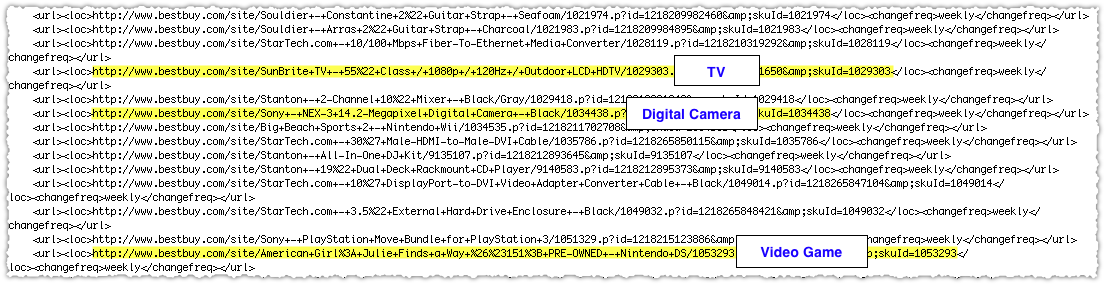

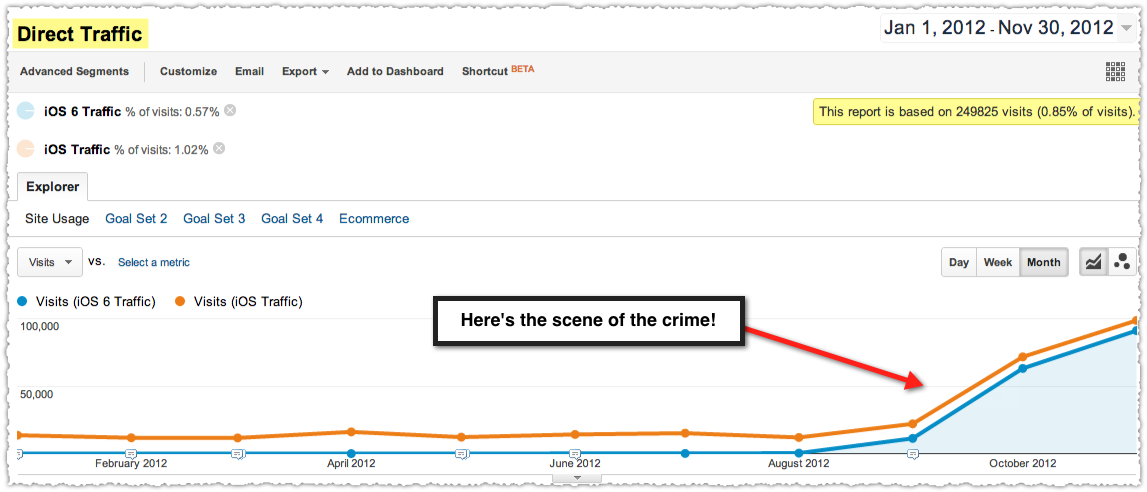

The Scene Of The Crime

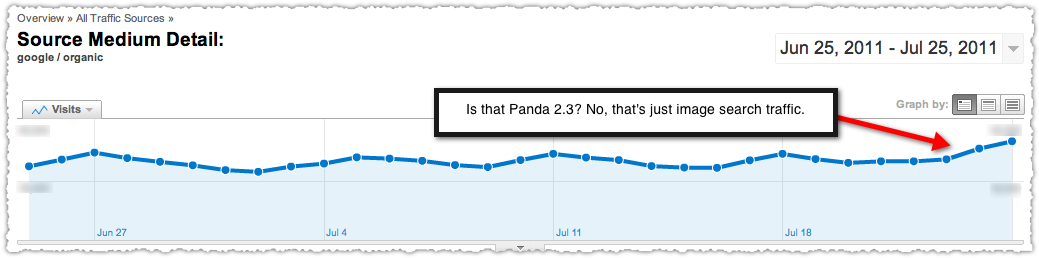

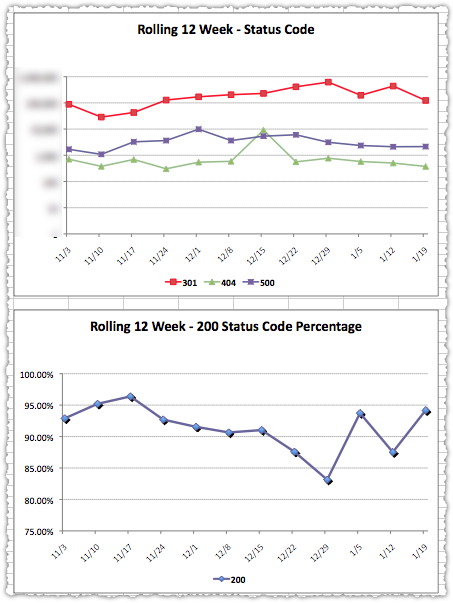

Once you have your advanced segments you want to apply them as you look at direct traffic by month.

This plainly shows that direct traffic suddenly jumped from traditional levels upon the release of iOS 6 in late September.

Reclaiming Stolen Search Traffic

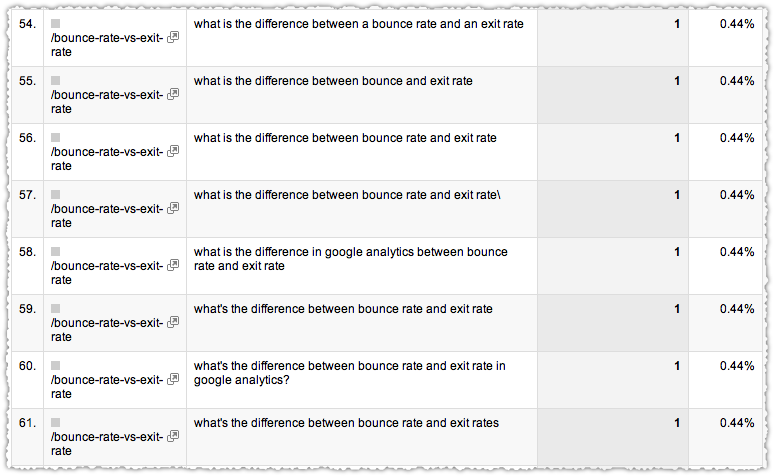

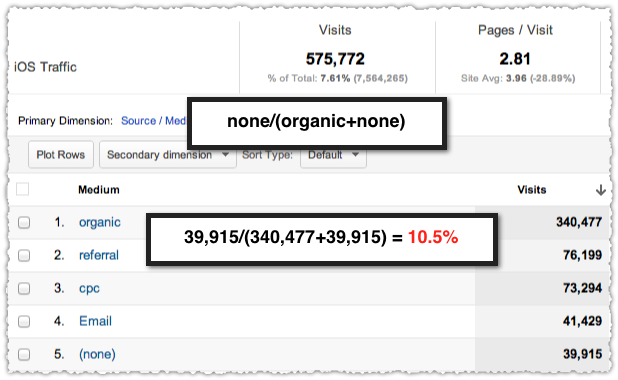

Every SEO should be reclaiming this stolen traffic to ensure they (and their clients) are seeing the real picture. Here’s my simple method of figuring out how much you should take back.

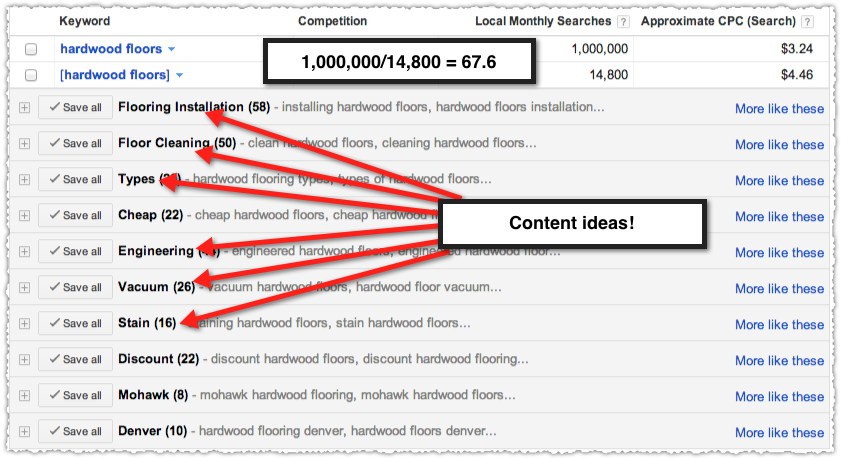

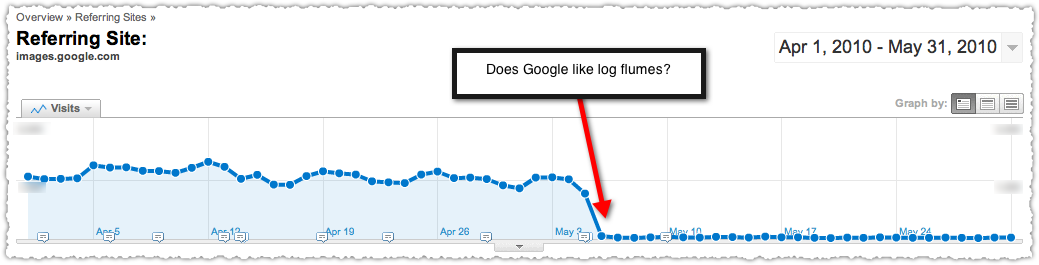

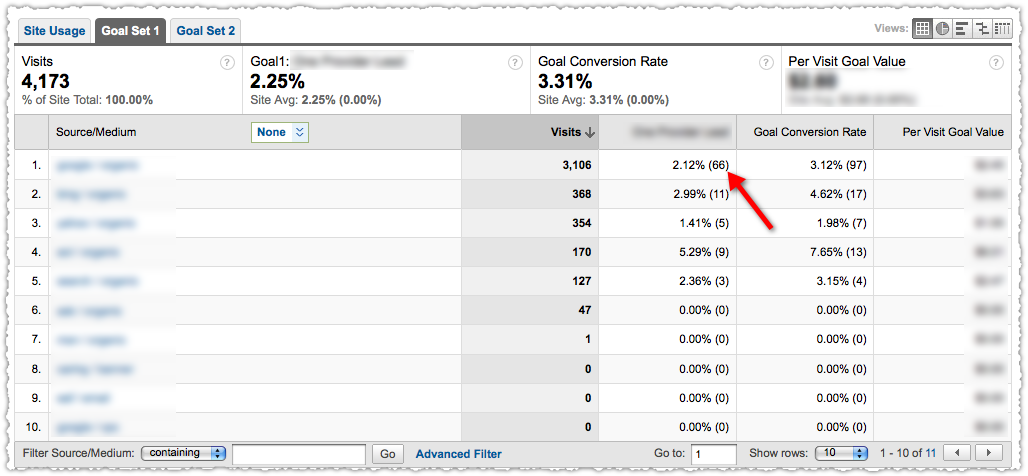

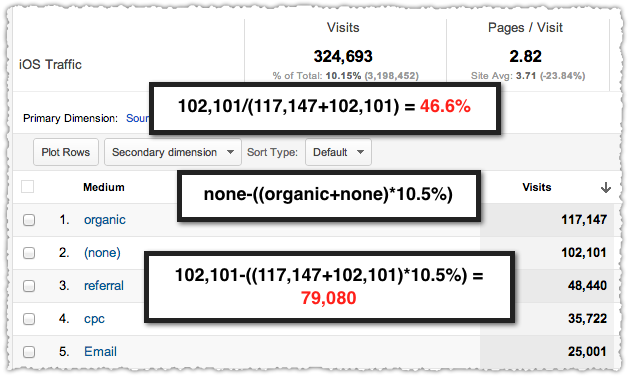

I’ve taken a three month slice of iOS traffic composed of April, May and June. From there I’m looking to see direct traffic as a percentage of the sum of direct and organic. The reason I’m not doing direct as a percentage of the total is to reduce any noise from referral spikes, paid search campaigns or other channel specific fluctuations.

In this instance direct comprises 10.5%. If you want to go the extra mile and quell the OCD demons in your head (or is that just me) you can do this for every month to ensure you’ve got the right percentage. I did and am confident that the percentage for this site is 10.5%.

Be aware, it will be different for each site.

Next I look at November and perform the same calculation just to confirm that it’s out of whack. At 46.6% it’s clearly departed from the established baseline.

I simply apply the proper direct traffic percentage (10.5% in this case) to the sum of direct and organic traffic. That’s the real amount of direct traffic. I then subtract that from the reported direct traffic to find the lost search traffic number.

The equation is none-((organic+none)*percentage). In this case I just reclaimed 79,080 search visits!

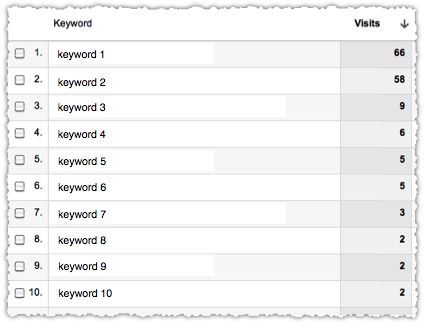

Better SEO Results

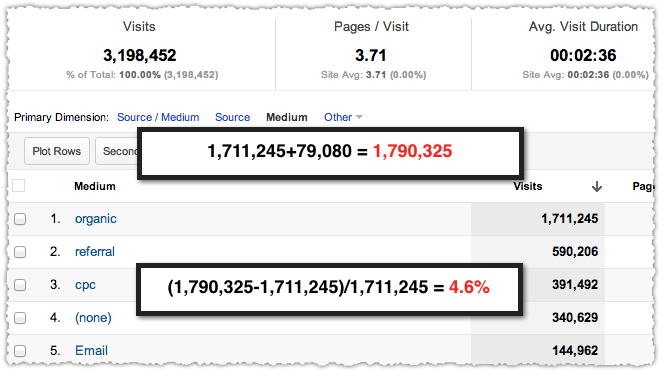

Get the credit you deserve and apply those stolen search visits to organic traffic.

A very quick calculation shows that reclaiming iOS search traffic produced a 4.6% bump in organic traffic for this client. That’s the best 32 minutes I’ve spent in a long time. Now it’s your turn.

TL;DR

Changes in how Safari searches are passed to Google Analytics is causing organic searches to be listed under direct traffic. Give clients the real picture and get the credit you deserve by properly attributing iOS traffic.